filmov

tv

13- Implementing a neural network for music genre classification

Показать описание

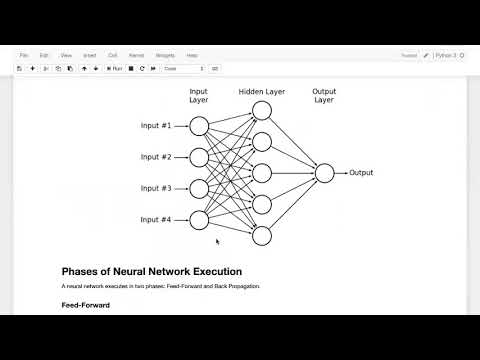

In this video, l implement a music genre classifier using Tensorflow. The classifier is trained on MFCC features extracted from the music Marsyas dataset. While building the network, I also introduce a few fundamental deep learning concepts such as binary/multicalss classification, rectified linear units, batching, and overfitting.

Video slides:

Code:

Interested in hiring me as a consultant/freelancer?

Join The Sound Of AI Slack community:

Follow Valerio on Facebook:

Valerio's Linkedin:

Valerio's Twitter:

Video slides:

Code:

Interested in hiring me as a consultant/freelancer?

Join The Sound Of AI Slack community:

Follow Valerio on Facebook:

Valerio's Linkedin:

Valerio's Twitter:

13- Implementing a neural network for music genre classification

13-Neural Network Implementation From Scratch in Python | Machine Learning | Deep Learning

But what is a neural network? | Deep learning chapter 1

Implement Neural Network In Python | Deep Learning Tutorial 13 (Tensorflow2.0, Keras & Python)

Create a Basic Neural Network Model - Deep Learning with PyTorch 5

PyTorch Tutorial 13 - Feed-Forward Neural Network

Here Is How Neural Network Work... | #neuralnetworks #chatgpt #usa #newyork #physics #demo #science

Neural Network from Scratch | Mathematics & Python Code

🤖Will Artificial Intelligence Make You a Millionaire from Trading?! (Shocking Truth!)💰📈

Learning an image using neural network #ai #machinelearning #deeplearning

Programming for AI (AI504, Fall 2020), Practice 13: Graph Neural Networks

Transformer Neural Networks Derived from Scratch

Neural Network From Scratch In Python

Implementing Neural Networks in Python

Gradient descent, how neural networks learn | Deep Learning Chapter 2

Gradient Checking (C2W1L13)

Day 13 - Design your First Neural Network

I Built a Neural Network in C++ from Scratch (NO Tensorflow/Pytorch)

Convolutional Neural Networks with TensorFlow - Deep Learning with Neural Networks 13

Creating Photographs Using Deep Learning | Two Minute Papers #13

2. Implementation of AND function using PERCEPTRON model | Artificial Neural Networks

Neural Network learns Sine Function with custom backpropagation in Julia

Lecture 13 (EECS4404E) - Neural Networks (Part IV) - Implementing Neural Nets

Wear volume prediction of AISI H13 die steel using response surface methodology and a... | RTCL.TV

Комментарии

0:33:25

0:33:25

1:07:20

1:07:20

0:18:40

0:18:40

0:13:23

0:13:23

0:15:40

0:15:40

0:21:34

0:21:34

0:00:24

0:00:24

0:32:32

0:32:32

0:12:17

0:12:17

0:00:45

0:00:45

0:50:25

0:50:25

0:18:08

0:18:08

1:13:07

1:13:07

0:07:13

0:07:13

0:20:33

0:20:33

0:06:35

0:06:35

0:39:06

0:39:06

0:18:40

0:18:40

0:25:07

0:25:07

0:03:16

0:03:16

0:29:53

0:29:53

0:43:56

0:43:56

0:46:44

0:46:44

0:00:47

0:00:47