filmov

tv

JavaScript performance is weird... Write scientifically faster code with benchmarking

Показать описание

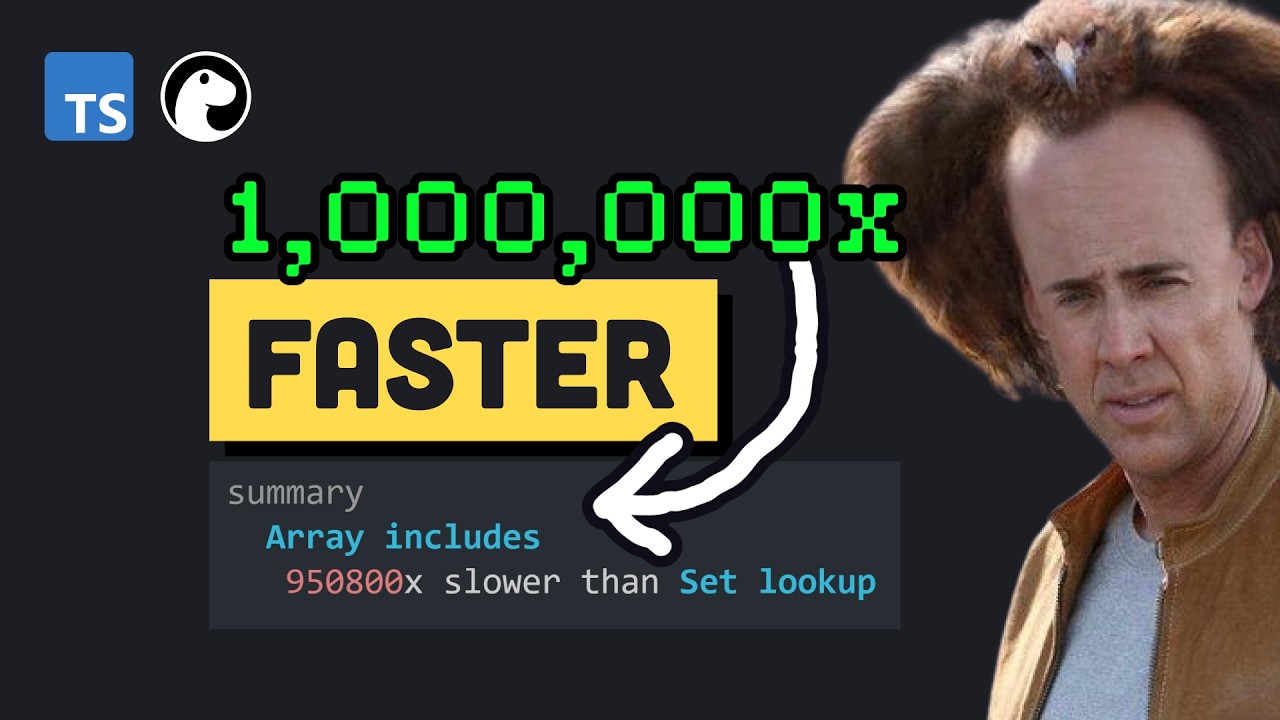

Learn how to benchmark your JavaScript code in Deno and find out how the way you write code affects performance. Why is a traditional for loop faster than forEach? And is premature optimization the root of all evil?

JavaScript performance is weird... Write scientifically faster code with benchmarking

Low-Level JavaScript Performance Best Practices (Crash Course)

Stop Using .map() Wrong - JavaScript Performance Tips 🚀

How to Improve JavaScript Performance with Google Chrome

JavaScript performance tips | Part 1 #shorts

Javascript Performance related Points.

JavaScript Pro Tips - Code This, NOT That

Top 15 Tips for Improving JavaScript Performance

'Code Review & Performance Boost: Optimizing sumArray() in JavaScript!' #shorts

JavaScript Performance ¦ JavaScript Trick ¦ #shorts #viral #javascript #interview

How to Compare Your JavaScript Code for Performance

JavaScript Loops - Code This, Not That

🕵️♀️🕵️♀️ Optimizing Loop Performance in JavaScript ES6 #shorts #javascript #programming #coding...

JavaScript Tip: Measuring the performance of functions

5 Proven Tips to Optimize Your JavaScript Code for Faster Performance

Boost JavaScript Performance Top Tips to Improve Loop Efficiency #js #javascript #reactjs #vuejs #cs

On writing JavaScript-level code and still getting high performance from Rust #shorts

Top 5 API Performance Tips #javascript #python #web #coding #programming

This is how you can measure CODE PERFORMANCE in JavaScript | Console Object | Pro Tips | Basics

'Of JavaScript Ahead-Of-Time Compilation Performance' by Manuel Serrano (Strange Loop 2022...

Optimizing your JavaScript App for Performance

Hot not to Profile JavaScript #javascript #performance

Did You Know? Switch vs Object Literal Performance? #Javascript Shorts #1

Optimizing JavaScript Performance: HashMap vs. Nested For-Loops

Комментарии

0:08:20

0:08:20

0:32:15

0:32:15

0:00:59

0:00:59

0:06:42

0:06:42

0:00:13

0:00:13

0:08:20

0:08:20

0:12:37

0:12:37

0:02:02

0:02:02

0:01:00

0:01:00

0:01:01

0:01:01

0:03:31

0:03:31

0:08:36

0:08:36

0:00:11

0:00:11

0:00:46

0:00:46

0:01:00

0:01:00

0:00:19

0:00:19

0:00:38

0:00:38

0:00:58

0:00:58

0:00:50

0:00:50

0:35:57

0:35:57

0:57:48

0:57:48

0:29:33

0:29:33

0:00:36

0:00:36

0:00:59

0:00:59