filmov

tv

Understanding Hadoop EcoSystem

Показать описание

Understanding Hadoop EcoSystem (2018)

What is Hadoop EcoSystem | HDFS | MapReduce | HBase | Pig | Hive | Sqoop | Flume | ZooKeeper

In this session, let us try to explore the Hadoop EcoSystem.

The success of Hadoop Framework, has led to the development of an array of software's.

Hadoop along with these, set of related software's makes up the Hadoop EcoSystem.

The main purpose of these software's is to enhance the functionality and increase the efficiency of Hadoop Framework.

The Hadoop EcoSystem Comprises of Apache PIG, Apache HBase, Apache Hive, Apache Sqoop, Apache Flume and Apache Zookeeper, along with the Hadoop Distributed File System and MapReduce.

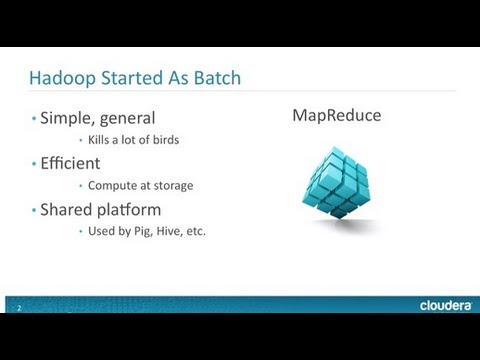

We all know the functionality provided by the Hadoop Distributed File System and the MapReduce framework.

Now, let us try to explore the functionality offered by, rest of these software's.

Let us begin with Apache Pig.

Apache Pig is a scripting language, used to write, data analysis programs for large datasets that are present within the Hadoop Cluster.

This scripting language is called as Pig Latin.

Apache HBase is a column oriented database, that allows reading and writing of data onto the HDFS on a real-time basis.

Apache Hive is a SQL like language, which allows querying of data from HDFS. The SQL version of Hive is called as HiveQL.

Apache Sqoop is an application, used to transfer the data to or from Hadoop to any Relational Database Management System.

Apache Flume is an application, that allows to move streaming data into a Hadoop Cluster. A good example for streaming data would be, that data that is being written to the log files.

And finally the Apache Zookeeper, takes care of all the co-ordination required among these software's to function properly.

This concludes our discussion on the topic "Hadoop EcoSystem".

Please don't forget to subscribe to our channel.

Enroll into this course at a deep discounted price:

If liked this video, please like and share it.

Follow Us On

What is Hadoop EcoSystem | HDFS | MapReduce | HBase | Pig | Hive | Sqoop | Flume | ZooKeeper

In this session, let us try to explore the Hadoop EcoSystem.

The success of Hadoop Framework, has led to the development of an array of software's.

Hadoop along with these, set of related software's makes up the Hadoop EcoSystem.

The main purpose of these software's is to enhance the functionality and increase the efficiency of Hadoop Framework.

The Hadoop EcoSystem Comprises of Apache PIG, Apache HBase, Apache Hive, Apache Sqoop, Apache Flume and Apache Zookeeper, along with the Hadoop Distributed File System and MapReduce.

We all know the functionality provided by the Hadoop Distributed File System and the MapReduce framework.

Now, let us try to explore the functionality offered by, rest of these software's.

Let us begin with Apache Pig.

Apache Pig is a scripting language, used to write, data analysis programs for large datasets that are present within the Hadoop Cluster.

This scripting language is called as Pig Latin.

Apache HBase is a column oriented database, that allows reading and writing of data onto the HDFS on a real-time basis.

Apache Hive is a SQL like language, which allows querying of data from HDFS. The SQL version of Hive is called as HiveQL.

Apache Sqoop is an application, used to transfer the data to or from Hadoop to any Relational Database Management System.

Apache Flume is an application, that allows to move streaming data into a Hadoop Cluster. A good example for streaming data would be, that data that is being written to the log files.

And finally the Apache Zookeeper, takes care of all the co-ordination required among these software's to function properly.

This concludes our discussion on the topic "Hadoop EcoSystem".

Please don't forget to subscribe to our channel.

Enroll into this course at a deep discounted price:

If liked this video, please like and share it.

Follow Us On

Комментарии

0:06:21

0:06:21

0:26:47

0:26:47

0:11:03

0:11:03

0:07:00

0:07:00

0:18:10

0:18:10

0:39:30

0:39:30

0:07:18

0:07:18

0:09:06

0:09:06

0:02:49

0:02:49

0:30:05

0:30:05

0:09:28

0:09:28

0:05:01

0:05:01

0:38:23

0:38:23

0:10:10

0:10:10

0:07:18

0:07:18

0:03:42

0:03:42

0:26:57

0:26:57

0:16:47

0:16:47

0:07:05

0:07:05

0:14:13

0:14:13

0:13:33

0:13:33

0:35:01

0:35:01

0:08:12

0:08:12

0:04:12

0:04:12