filmov

tv

Data Automation (CI/CD) with a Real Life Example

Показать описание

Get my Modern Data Essentials training (for free) & start building more reliable data architectures

-----

One of the most fun aspects of being a data engineer is creating different automations.

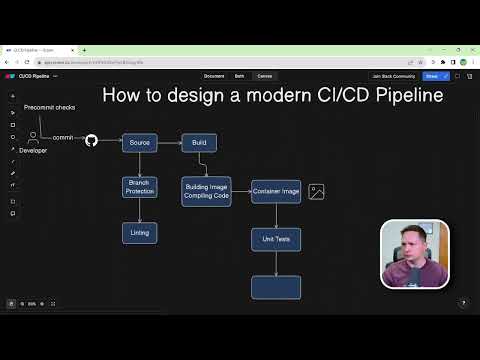

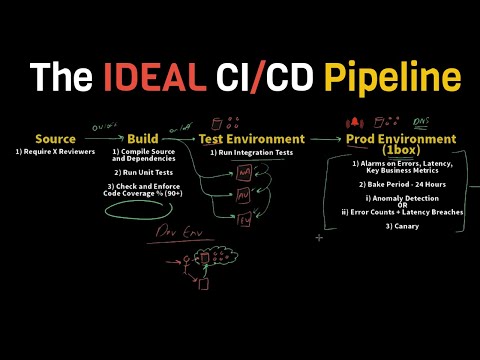

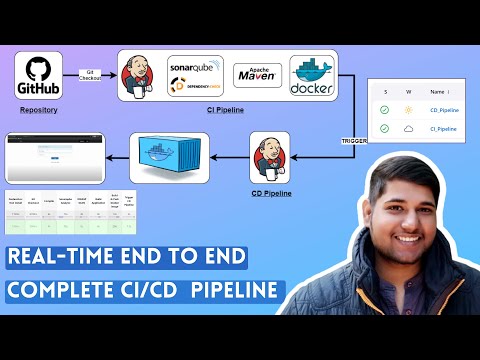

And in particular, one area that's really important is CI/CD , which stands for Continuous Integration and Continuous Deployment.

This is where you can automate your testing and release strategy.

But I also understand that this concept can be a little vague or unclear if you haven't seen it in action.

So in today's video I'll show you a real-life example of how to use Github to make this happen.

This is just one example of why people really like code-based tools because of the ability to automate and do things like this.

This can be applied not only to your deployments but as we'll mostly cover in this video the idea of automating your data quality checks.

Thank you for watching!

Timestamps:

0:00 - Intro

0:43 - Create Workflow File

1:18 - Review File Layout

2:55 - Use Pre-build Actions

4:06 - Trigger Workflow

Title & Tags:

Data Automation (CI/CD) with a Real Life Example

#kahandatasolutions #dataengineering #automation

-----

One of the most fun aspects of being a data engineer is creating different automations.

And in particular, one area that's really important is CI/CD , which stands for Continuous Integration and Continuous Deployment.

This is where you can automate your testing and release strategy.

But I also understand that this concept can be a little vague or unclear if you haven't seen it in action.

So in today's video I'll show you a real-life example of how to use Github to make this happen.

This is just one example of why people really like code-based tools because of the ability to automate and do things like this.

This can be applied not only to your deployments but as we'll mostly cover in this video the idea of automating your data quality checks.

Thank you for watching!

Timestamps:

0:00 - Intro

0:43 - Create Workflow File

1:18 - Review File Layout

2:55 - Use Pre-build Actions

4:06 - Trigger Workflow

Title & Tags:

Data Automation (CI/CD) with a Real Life Example

#kahandatasolutions #dataengineering #automation

Комментарии

0:05:23

0:05:23

0:01:56

0:01:56

0:02:15

0:02:15

0:09:59

0:09:59

0:02:03

0:02:03

0:26:32

0:26:32

0:03:40

0:03:40

0:26:19

0:26:19

1:32:04

1:32:04

0:22:36

0:22:36

0:04:01

0:04:01

0:13:58

0:13:58

0:32:10

0:32:10

0:52:25

0:52:25

0:02:06

0:02:06

0:00:46

0:00:46

1:09:45

1:09:45

0:03:12

0:03:12

0:02:41

0:02:41

0:07:26

0:07:26

0:09:38

0:09:38

0:00:22

0:00:22

0:52:48

0:52:48

0:07:10

0:07:10