filmov

tv

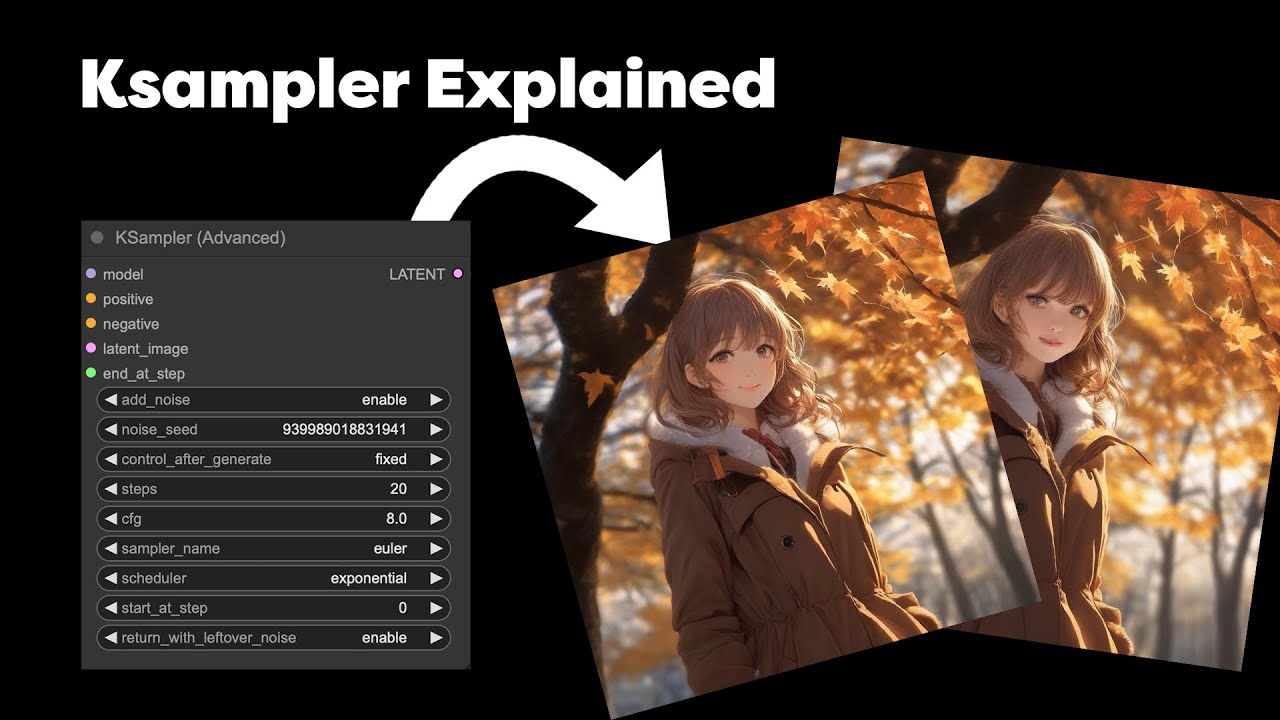

Comfy UI K sampler Explained | How AI Image generation works | Simple explanation

Показать описание

This is my attempt to try and explain how Ksamplers in comfy UI work, while also explaining a VERY simplified explanation of how Stable diffusion and Image generation works.

If you want to support the channel please do so at

If you want to support the channel please do so at

Comfy UI K sampler Explained | How AI Image generation works | Simple explanation

L1: Using ComfyUI, EASY basics - Comfy Academy

ComfyUI EP02: AI Image Generation Process (Details of K-Sampler Node) [Stable Diffusion]

Stable Diffusion 09 How to Choose a Sampler

A look at the NEW ComfyUI Samplers & Schedulers!

ComfyUI: Advanced Understanding (Part 1)

ComfyUI - Getting Started : Episode 1 - Better than AUTO1111 for Stable Diffusion AI Art generation

Stable Diffusion Samplers - Which samplers are the best and all settings explained!

ComfyUI SDXL Advanced Setup Part 5 - Adv dual sampler

Complete Comfy UI Guide Part 1 | Beginner to Pro Series

ComfyUI Tutorial Series: Ep02 - Nodes and Workflow Basics

ComfyUI - Getting Started : Episode 2 - Custom Nodes Everyone Should Have

LATENT Tricks - Amazing ways to use ComfyUI

ComfyUI Impact Pack - Tutorial #7: Advanced IMG2IMG using Regional Sampler

Understanding ComfyUI Nodes: A Comprehensive Guide

ComfyUI Impact Pack: Tutorial #5 - Preventing Prompt Bleeding based on Regional Samplers

ComfyUI: Area Composition, Multi Prompt Workflow Tutorial

How to install and use ComfyUI - Stable Diffusion.

ComfyUI for Beginner - Part - 1 - Stable Diffusion

ComfyUI Impact Pack: Tutorial #6 - Regional LoRA based on Regional Samplers

Why ComfyUI is The BEST UI for Stable Diffusion!

ComfyUI - Prompt Engineering with CFG, Sampler Steps and Clip Skipping - for Stable Diffusion Users

1000% FASTER Stable Diffusion in ONE STEP!

ComfyUI Tutorial Series: Ep04 - IMG2IMG and LoRA Basics

Комментарии

0:08:54

0:08:54

0:15:14

0:15:14

0:20:08

0:20:08

0:12:49

0:12:49

0:13:54

0:13:54

0:20:18

0:20:18

0:19:01

0:19:01

0:17:07

0:17:07

0:17:34

0:17:34

0:20:26

0:20:26

0:23:28

0:23:28

0:09:28

0:09:28

0:21:32

0:21:32

0:03:39

0:03:39

0:27:29

0:27:29

0:06:34

0:06:34

0:33:42

0:33:42

0:12:45

0:12:45

0:17:56

0:17:56

0:10:06

0:10:06

0:19:27

0:19:27

0:42:31

0:42:31

0:10:10

0:10:10

0:17:26

0:17:26