filmov

tv

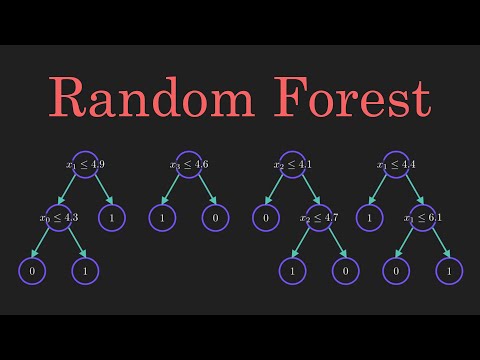

Random Forest Regression Introduction and Intuition

Показать описание

Welcome to "The AI University".

About this video:

This video titled "Random Forest Regression Introduction and Intuition" explains the ensemble learning method, its various techniques like boosting and bagging. It also explains which approach random forest algorithm takes to develop the random forest regression model. Then later on it covers advantages and disadvantages associated with Random Forest Regression algorithm.

Subtitles available in: English

FOLLOW ME ON:

About this Channel:

The AI University is a channel which is on a mission to democratize the Artificial Intelligence, Big Data Hadoop and Cloud Computing education to the entire world. The aim of this channel is to impart the knowledge to the data science, data analysis, data engineering and cloud architecture aspirants as well as providing advanced knowledge to the ones who already possess some of this knowledge.

Please share, comment, like and subscribe if you liked this video. If you have any specific questions then you can comment on the comment section and I'll definitely try to get back to you.

*******Other AI, ML, Deep Learning, Augmented Reality related Video Series*****

******************************************************************

DISCLAIMER: This video and description may contain affiliate links, which means that if you click on one of the product links, I’ll receive a small commission.

#RandomForestRegression #EnsembleLearning #MachineLearning

About this video:

This video titled "Random Forest Regression Introduction and Intuition" explains the ensemble learning method, its various techniques like boosting and bagging. It also explains which approach random forest algorithm takes to develop the random forest regression model. Then later on it covers advantages and disadvantages associated with Random Forest Regression algorithm.

Subtitles available in: English

FOLLOW ME ON:

About this Channel:

The AI University is a channel which is on a mission to democratize the Artificial Intelligence, Big Data Hadoop and Cloud Computing education to the entire world. The aim of this channel is to impart the knowledge to the data science, data analysis, data engineering and cloud architecture aspirants as well as providing advanced knowledge to the ones who already possess some of this knowledge.

Please share, comment, like and subscribe if you liked this video. If you have any specific questions then you can comment on the comment section and I'll definitely try to get back to you.

*******Other AI, ML, Deep Learning, Augmented Reality related Video Series*****

******************************************************************

DISCLAIMER: This video and description may contain affiliate links, which means that if you click on one of the product links, I’ll receive a small commission.

#RandomForestRegression #EnsembleLearning #MachineLearning

Комментарии

0:05:21

0:05:21

0:08:01

0:08:01

0:07:02

0:07:02

0:09:54

0:09:54

0:07:49

0:07:49

0:05:12

0:05:12

0:41:09

0:41:09

0:15:21

0:15:21

2:23:01

2:23:01

0:10:18

0:10:18

0:22:33

0:22:33

0:10:19

0:10:19

0:12:28

0:12:28

0:07:27

0:07:27

0:14:11

0:14:11

0:00:53

0:00:53

0:06:21

0:06:21

0:08:33

0:08:33

0:05:57

0:05:57

0:11:12

0:11:12

0:00:18

0:00:18

0:03:53

0:03:53

0:00:16

0:00:16

0:00:16

0:00:16