filmov

tv

Understanding Implicit Neural Representations with Itzik Ben-Shabat

Показать описание

In this episode of Computer Vision Decoded, we are going to dive into implicit neural representations.

We are joined by Itzik Ben-Shabat, a Visiting Research Fellow at the Australian National Universit (ANU) and Technion – Israel Institute of Technology as well as the host of the Talking Paper Podcast.

You will learn a core understanding of implicit neural representations, key concepts and terminology, how it's being used in applications today, and Itzik's research into improving output with limit input data.

Episode timeline:

00:00 Intro

01:23 Overview of what implicit neural representations are

04:08 How INR compares and contrasts with a NeRF

08:17 Why did Itzik pursued this line of research

10:56 What is normalization and what are normals

13:13 Past research people should read to learn about the basics of INR

16:10 What is an implicit representation (without the neural network)

24:27 What is DiGS and what problem with INR does it solve?

35:54 What is OG-I NR and what problem with INR does it solve?

40:43 What software can researchers use to understand INR?

49:15 What information should non-scientists be focused to learn about INR?

We are joined by Itzik Ben-Shabat, a Visiting Research Fellow at the Australian National Universit (ANU) and Technion – Israel Institute of Technology as well as the host of the Talking Paper Podcast.

You will learn a core understanding of implicit neural representations, key concepts and terminology, how it's being used in applications today, and Itzik's research into improving output with limit input data.

Episode timeline:

00:00 Intro

01:23 Overview of what implicit neural representations are

04:08 How INR compares and contrasts with a NeRF

08:17 Why did Itzik pursued this line of research

10:56 What is normalization and what are normals

13:13 Past research people should read to learn about the basics of INR

16:10 What is an implicit representation (without the neural network)

24:27 What is DiGS and what problem with INR does it solve?

35:54 What is OG-I NR and what problem with INR does it solve?

40:43 What software can researchers use to understand INR?

49:15 What information should non-scientists be focused to learn about INR?

Understanding Implicit Neural Representations with Itzik Ben-Shabat

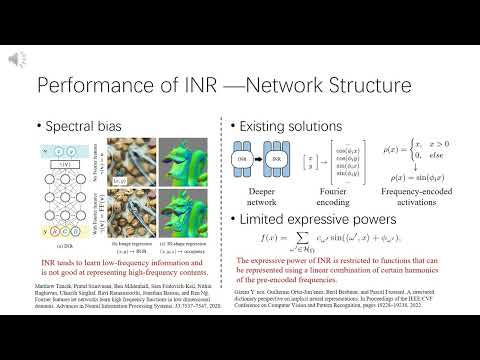

SIREN: Implicit Neural Representations with Periodic Activation Functions (Paper Explained)

Implicit Neural Representations with Periodic Activation Functions

Neural Implicit Representations for 3D Vision - Prof. Andreas Geiger

Computer Vision - Lecture 9.1 (Coordinate-based Networks: Implicit Neural Representations)

Representation Learning | Compression with Implicit Neural Representations

CSC2547 SIREN: Implicit Neural Representations with Periodic Activation Functions

Implicit Neural Representations: From Objects to 3D Scenes

DL4CV@WIS (Spring 2021) Lecture 12: Implicit Neural Representations, Neural Rendering

MINER: Multiscale Implicit Neural Representations. ECCV, 2022

Neural Experts: Mixture of Experts for Implicit Neural Representations [NeurIPS 2024]

TUM AI Lecture Series - Implicit Neural Scene Representations (Vincent Sitzmann)

Generalized Implicit Neural Representations for Deformable Objects

Neural Implicit Representations for 3D Vision and Beyond by Dr. Andreas Geiger @QUVA

3DGV Seminar: Yaron Lipman --- Unifying Implicit Neural Representations

Implicit Neural Representation for Change Detection

Vincent Sitzmann: Implicit Neural Scene Representations

Spatial Implicit Neural Representations for Global-Scale Species Mapping - ICML 2023

[CVPR 2023] WIRE: Wavelet Implicit Neural Representations

[CVPR 2024] SketchINR: A First Look into Sketches as Implicit Neural Representations

Implicit Neural Representations with Periodic Activation Functions | Siren in 100 lines of PyTorch

SIREN: Implicit Neural Representations with Periodic Activation Functions [20210127, KwonByungki]

DINER: Disorder-invariant Implicit Neural Representation

TUM AI Lecture Series - Neural Implicit Representations for 3D Vision (Andreas Geiger)

Комментарии

0:55:22

0:55:22

0:56:05

0:56:05

0:10:20

0:10:20

0:56:30

0:56:30

0:45:34

0:45:34

0:05:14

0:05:14

0:14:12

0:14:12

0:26:13

0:26:13

1:30:09

1:30:09

0:03:32

0:03:32

0:04:52

0:04:52

1:10:59

1:10:59

0:05:03

0:05:03

1:01:45

1:01:45

1:27:51

1:27:51

0:09:13

0:09:13

0:56:42

0:56:42

0:04:58

0:04:58

![[CVPR 2023] WIRE:](https://i.ytimg.com/vi/4jI8DZPEfEY/hqdefault.jpg) 0:07:49

0:07:49

![[CVPR 2024] SketchINR:](https://i.ytimg.com/vi/Sxq3hJ__SqA/hqdefault.jpg) 0:04:48

0:04:48

0:08:42

0:08:42

1:02:46

1:02:46

0:05:52

0:05:52

1:12:46

1:12:46