filmov

tv

Neural Networks From Scratch - Lec 16 - Summary of all Activation functions in 10 mins

Показать описание

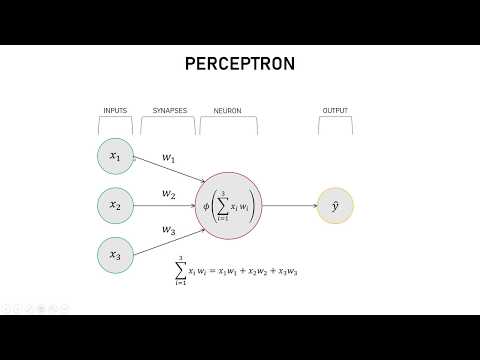

Building Neural Networks from scratch in python.

This is the sixteenth video of the course - "Neural Networks From Scratch". This video covers the summary of all the activation functions. we covered the motivation, definition, properties and performance comparison for each of the function.

Neural Networks From Scratch Playlist:

Activation Functions Playlist:

Github Repo:

Step Activation:

Sigmoid Activattion:

Tanh Activation:

Softmax Activation:

ReLU Activation:

Variants of ReLU:

Maxout Activation:

Softplus Activation:

Swish Activation:

Mish Activation:

GeLU Activation:

Please like and subscribe to the channel for more videos. This will help me in assessing your interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:50 Step Activation

1:54 Sigmoid Activation

3:27 Tanh Activation

4:12 ReLU Activation

5:58 Softplus Activation

6:54 Maxout Activation

8:16 GeLU Activation

9:11 Swish Activation

10:07 Mish Activation

11:07 Softmax Activation

#stepactivationfunction, #sigmoidactivationfunction, #reluactivationfunction, #softmaxactivationfunction, #tanhactivationfunction, #geluactivationfunction, #swishactivationfunction, #mishactivationfunction, #softplusactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient, #selfgatedactivationfunction, #dropout

This is the sixteenth video of the course - "Neural Networks From Scratch". This video covers the summary of all the activation functions. we covered the motivation, definition, properties and performance comparison for each of the function.

Neural Networks From Scratch Playlist:

Activation Functions Playlist:

Github Repo:

Step Activation:

Sigmoid Activattion:

Tanh Activation:

Softmax Activation:

ReLU Activation:

Variants of ReLU:

Maxout Activation:

Softplus Activation:

Swish Activation:

Mish Activation:

GeLU Activation:

Please like and subscribe to the channel for more videos. This will help me in assessing your interests and creating more content. Thank you!

Chapter:

0:00 Introduction

0:50 Step Activation

1:54 Sigmoid Activation

3:27 Tanh Activation

4:12 ReLU Activation

5:58 Softplus Activation

6:54 Maxout Activation

8:16 GeLU Activation

9:11 Swish Activation

10:07 Mish Activation

11:07 Softmax Activation

#stepactivationfunction, #sigmoidactivationfunction, #reluactivationfunction, #softmaxactivationfunction, #tanhactivationfunction, #geluactivationfunction, #swishactivationfunction, #mishactivationfunction, #softplusactivationfunction, #activationfunctioninneuralnetwork, #vanishinggradient, #selfgatedactivationfunction, #dropout

Комментарии

0:09:15

0:09:15

0:31:28

0:31:28

0:18:40

0:18:40

0:32:32

0:32:32

0:16:59

0:16:59

0:54:51

0:54:51

0:17:38

0:17:38

0:14:15

0:14:15

1:13:09

1:13:09

1:13:07

1:13:07

3:44:18

3:44:18

0:40:06

0:40:06

0:33:47

0:33:47

1:39:10

1:39:10

0:12:59

0:12:59

0:33:23

0:33:23

0:10:41

0:10:41

0:34:01

0:34:01

5:00:53

5:00:53

0:05:45

0:05:45

0:28:37

0:28:37

0:13:29

0:13:29

0:11:35

0:11:35

0:09:12

0:09:12