filmov

tv

Machine Learning Basics: Confusion Matrix & Precision/Recall Simplified | By Dr. Ry @Stemplicity

Показать описание

This tutorial covers the basics of confusion matrix which is used to describe the performance of classification models.

The tutorial will also cover the difference between True Positives, True Negatives, False Positives, and False Negatives which can be described as follows:

• True positives (TP): cases when classifier predicted TRUE (they have the disease), and correct class was TRUE (patient has disease).

• True negatives (TN): cases when model predicted FALSE (no disease), and correct class was FALSE (patient do not have disease).

• False positives (FP) (Type I error): classifier predicted TRUE, but correct class was FALSE (patient did not have disease).

• False negatives (FN) (Type II error): classifier predicted FALSE (patient do not have disease), but they actually do have the disease

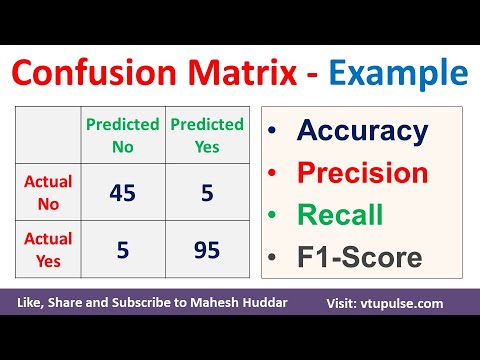

The tutorial will also cover the difference between classification accuracy, error rate, precision and recall. These metrics can be summarized as shown below:

• Classification Accuracy = (TP+TN) / (TP + TN + FP + FN)

• Misclassification rate (Error Rate) = (FP + FN) / (TP + TN + FP + FN)

• Precision = TP/Total TRUE Predictions = TP/ (TP+FP) (When model predicted TRUE class, how often was it right?)

• Recall = TP/ Actual TRUE = TP/ (TP+FN) (when the class was actually TRUE, how often did the classifier get it right?)

If you want to learn more, here’s a link to my new machine learning Classification course on Udemy:

Here’s a link to my new machine learning regression course on Udemy:

Subscribe to my channel to get the latest updates, we will be releasing new videos on weekly basis:

The tutorial will also cover the difference between True Positives, True Negatives, False Positives, and False Negatives which can be described as follows:

• True positives (TP): cases when classifier predicted TRUE (they have the disease), and correct class was TRUE (patient has disease).

• True negatives (TN): cases when model predicted FALSE (no disease), and correct class was FALSE (patient do not have disease).

• False positives (FP) (Type I error): classifier predicted TRUE, but correct class was FALSE (patient did not have disease).

• False negatives (FN) (Type II error): classifier predicted FALSE (patient do not have disease), but they actually do have the disease

The tutorial will also cover the difference between classification accuracy, error rate, precision and recall. These metrics can be summarized as shown below:

• Classification Accuracy = (TP+TN) / (TP + TN + FP + FN)

• Misclassification rate (Error Rate) = (FP + FN) / (TP + TN + FP + FN)

• Precision = TP/Total TRUE Predictions = TP/ (TP+FP) (When model predicted TRUE class, how often was it right?)

• Recall = TP/ Actual TRUE = TP/ (TP+FN) (when the class was actually TRUE, how often did the classifier get it right?)

If you want to learn more, here’s a link to my new machine learning Classification course on Udemy:

Here’s a link to my new machine learning regression course on Udemy:

Subscribe to my channel to get the latest updates, we will be releasing new videos on weekly basis:

Комментарии

0:07:13

0:07:13

0:12:19

0:12:19

0:05:09

0:05:09

0:00:55

0:00:55

0:24:47

0:24:47

0:08:45

0:08:45

0:03:19

0:03:19

0:17:17

0:17:17

0:06:24

0:06:24

0:00:59

0:00:59

0:05:50

0:05:50

0:00:14

0:00:14

0:13:05

0:13:05

0:06:22

0:06:22

0:01:32

0:01:32

0:11:46

0:11:46

0:08:14

0:08:14

0:09:55

0:09:55

0:06:21

0:06:21

0:04:22

0:04:22

0:03:39

0:03:39

0:00:18

0:00:18

0:22:10

0:22:10

0:11:47

0:11:47