filmov

tv

Gaussian Mixture Model (GMM): Introduction [E12]

Показать описание

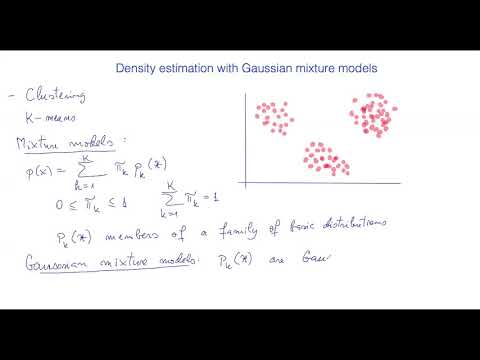

In this video, I have given an introduction to the Gaussian Mixture Model, I talked about the Maximum likelihood, which in the next lecture will be elaborated and then we will find the parameters mean , covariance matrix and the pi-ks

Notes link:

Notes link:

Gaussian Mixture Models (GMM) Explained

Gaussian Mixture Model

What are Gaussian Mixture Models? | Soft clustering | Unsupervised Machine Learning | Data Science

Gaussian Mixture Model | Gaussian Mixture Model in Machine Learning | GMM Explained | Simplilearn

Gaussian Mixture Models (GMM) Explained | Gaussian Mixture Model in Machine Learning | Simplilearn

Gaussian Mixture Models Explained | Basics of ML

Gaussian Mixture Models

Gaussian Mixture Models (GMMs) #datascience #normaldistribution #machinelearning #statistics

Intro to mixture models and GMM

Gaussian Mixture Model (GMM): Introduction [E12]

Gaussian Mixture Model | Intuition & Introduction | TensorFlow Probability

52b - Understanding Gaussian Mixture Model (GMM) using 1D, 2D, and 3D examples

Tutorial 72 - What is Gaussian Mixture Model (GMM) and how to use it for image segmentation?

What is Gaussian Mixture Model (GMM) in Machine Learning? Decoding Gaussian Mixture Models

R Tutorial: Gaussian mixture models (GMM)

Clustering (4): Gaussian Mixture Models and EM

CLUG1: Gaussian Mixture Modeling Introduction

Gaussian mixture models: introduction

Lecture 19: Gaussian Mixture Model (GMM)

MATLAB skills, machine learning, sect 4: Gaussian Mixture Models, What are Gaussian Mixture Models?

ML Math Review: Gaussian Mixture Models

R tutorial -- Gaussian Mixture Model

RO-1.0X212: Gaussian Mixture Models - Introduction

Fitting a GMM using Maximum Likelihood

Комментарии

0:04:49

0:04:49

0:15:07

0:15:07

0:09:41

0:09:41

0:18:36

0:18:36

0:18:52

0:18:52

0:06:38

0:06:38

0:17:27

0:17:27

0:00:50

0:00:50

0:07:01

0:07:01

0:25:20

0:25:20

0:17:43

0:17:43

0:14:23

0:14:23

0:16:46

0:16:46

0:02:30

0:02:30

0:04:18

0:04:18

0:17:11

0:17:11

0:24:31

0:24:31

0:10:59

0:10:59

0:59:59

0:59:59

0:01:24

0:01:24

0:25:08

0:25:08

0:06:10

0:06:10

0:07:56

0:07:56

0:14:16

0:14:16