filmov

tv

Handling Missing Data Easily Explained| Machine Learning

Показать описание

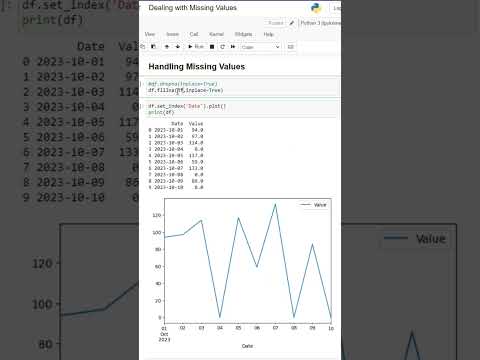

Data can have missing values for a number of reasons such as observations that were not recorded and data corruption.

Handling missing data is important as many machine learning algorithms do not support data with missing values.

In this tutorial, you will discover how to handle missing data for machine learning with Python.

Specifically, after completing this tutorial you will know:

How to marking invalid or corrupt values as missing in your dataset.

How to remove rows with missing data from your dataset.

How to impute missing values with mean values in your dataset.

You can buy my book where I have provided a detailed explanation of how we can use Machine Learning, Deep Learning in Finance using python

Handling missing data is important as many machine learning algorithms do not support data with missing values.

In this tutorial, you will discover how to handle missing data for machine learning with Python.

Specifically, after completing this tutorial you will know:

How to marking invalid or corrupt values as missing in your dataset.

How to remove rows with missing data from your dataset.

How to impute missing values with mean values in your dataset.

You can buy my book where I have provided a detailed explanation of how we can use Machine Learning, Deep Learning in Finance using python

Handling Missing Data Easily Explained| Machine Learning

Don't Replace Missing Values In Your Dataset.

#06 - Handling Missing Data Part 1 | Handling Missing Data Easily Explained | Machine Learning 2022

How To Handle Missing Values in Categorical Features

Dealing With Missing Data Part I

Handling Missing Values in Pandas Dataframe | GeeksforGeeks

Dealing with Missing Values in Machine Learning: Easy Explanation for Data Science Interviews

Understanding missing data and missing values. 5 ways to deal with missing data using R programming

Day-2 Application of SPSS for Data Analysis (Quantitative Data Analysis)

Handling Missing Values | Machine Learning | GeeksforGeeks

Dealing With Missing Data - Multiple Imputation

Python Pandas Tutorial 5: Handle Missing Data: fillna, dropna, interpolate

Handling Missing Values | Python for Data Analysts

Handling Missing Data | Part 1 | Complete Case Analysis

Impute missing values using KNNImputer or IterativeImputer

Handling NA in R | is.na, na.omit & na.rm Functions for Missing Values

R Tutorial: Handling missing data

Python Tutorial: Handling missing values

Handling Missing Data in Stata

How to Handle Missing Values in R Using RStudio

Data Pre-processing in R: Handling Missing Data

4.3. Handling Missing Values in Machine Learning | Imputation | Dropping

Dealing With Missing Values Explained for Beginners | Dropping / Imputing Data

Python Pandas Tutorial (Part 9): Cleaning Data - Casting Datatypes and Handling Missing Values

Комментарии

0:23:22

0:23:22

0:06:10

0:06:10

0:06:46

0:06:46

0:06:22

0:06:22

0:08:10

0:08:10

0:22:17

0:22:17

0:05:27

0:05:27

0:11:56

0:11:56

1:22:38

1:22:38

0:10:54

0:10:54

0:11:02

0:11:02

0:22:07

0:22:07

0:00:59

0:00:59

0:24:54

0:24:54

0:05:50

0:05:50

0:06:19

0:06:19

0:04:33

0:04:33

0:04:11

0:04:11

0:05:31

0:05:31

0:02:54

0:02:54

0:17:49

0:17:49

0:21:59

0:21:59

0:17:49

0:17:49

0:31:54

0:31:54