filmov

tv

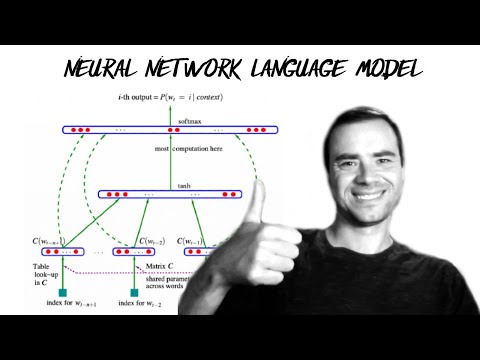

Building makemore Part 3: Activations & Gradients, BatchNorm

Показать описание

We dive into some of the internals of MLPs with multiple layers and scrutinize the statistics of the forward pass activations, backward pass gradients, and some of the pitfalls when they are improperly scaled. We also look at the typical diagnostic tools and visualizations you'd want to use to understand the health of your deep network. We learn why training deep neural nets can be fragile and introduce the first modern innovation that made doing so much easier: Batch Normalization. Residual connections and the Adam optimizer remain notable todos for later video.

Links:

Useful links:

Exercises:

- E01: I did not get around to seeing what happens when you initialize all weights and biases to zero. Try this and train the neural net. You might think either that 1) the network trains just fine or 2) the network doesn't train at all, but actually it is 3) the network trains but only partially, and achieves a pretty bad final performance. Inspect the gradients and activations to figure out what is happening and why the network is only partially training, and what part is being trained exactly.

- E02: BatchNorm, unlike other normalization layers like LayerNorm/GroupNorm etc. has the big advantage that after training, the batchnorm gamma/beta can be "folded into" the weights of the preceeding Linear layers, effectively erasing the need to forward it at test time. Set up a small 3-layer MLP with batchnorms, train the network, then "fold" the batchnorm gamma/beta into the preceeding Linear layer's W,b by creating a new W2, b2 and erasing the batch norm. Verify that this gives the same forward pass during inference. i.e. we see that the batchnorm is there just for stabilizing the training, and can be thrown out after training is done! pretty cool.

Chapters:

00:00:00 intro

00:01:22 starter code

00:04:19 fixing the initial loss

00:12:59 fixing the saturated tanh

00:27:53 calculating the init scale: “Kaiming init”

00:40:40 batch normalization

01:03:07 batch normalization: summary

01:04:50 real example: resnet50 walkthrough

01:14:10 summary of the lecture

01:18:35 just kidding: part2: PyTorch-ifying the code

01:26:51 viz #1: forward pass activations statistics

01:30:54 viz #2: backward pass gradient statistics

01:32:07 the fully linear case of no non-linearities

01:36:15 viz #3: parameter activation and gradient statistics

01:39:55 viz #4: update:data ratio over time

01:46:04 bringing back batchnorm, looking at the visualizations

01:51:34 summary of the lecture for real this time

Links:

Useful links:

Exercises:

- E01: I did not get around to seeing what happens when you initialize all weights and biases to zero. Try this and train the neural net. You might think either that 1) the network trains just fine or 2) the network doesn't train at all, but actually it is 3) the network trains but only partially, and achieves a pretty bad final performance. Inspect the gradients and activations to figure out what is happening and why the network is only partially training, and what part is being trained exactly.

- E02: BatchNorm, unlike other normalization layers like LayerNorm/GroupNorm etc. has the big advantage that after training, the batchnorm gamma/beta can be "folded into" the weights of the preceeding Linear layers, effectively erasing the need to forward it at test time. Set up a small 3-layer MLP with batchnorms, train the network, then "fold" the batchnorm gamma/beta into the preceeding Linear layer's W,b by creating a new W2, b2 and erasing the batch norm. Verify that this gives the same forward pass during inference. i.e. we see that the batchnorm is there just for stabilizing the training, and can be thrown out after training is done! pretty cool.

Chapters:

00:00:00 intro

00:01:22 starter code

00:04:19 fixing the initial loss

00:12:59 fixing the saturated tanh

00:27:53 calculating the init scale: “Kaiming init”

00:40:40 batch normalization

01:03:07 batch normalization: summary

01:04:50 real example: resnet50 walkthrough

01:14:10 summary of the lecture

01:18:35 just kidding: part2: PyTorch-ifying the code

01:26:51 viz #1: forward pass activations statistics

01:30:54 viz #2: backward pass gradient statistics

01:32:07 the fully linear case of no non-linearities

01:36:15 viz #3: parameter activation and gradient statistics

01:39:55 viz #4: update:data ratio over time

01:46:04 bringing back batchnorm, looking at the visualizations

01:51:34 summary of the lecture for real this time

Комментарии

1:55:58

1:55:58

1:42:32

1:42:32

1:55:24

1:55:24

0:56:22

0:56:22

1:15:40

1:15:40

1:57:45

1:57:45

0:00:32

0:00:32

0:00:19

0:00:19

0:00:42

0:00:42

0:00:27

0:00:27

0:00:33

0:00:33

0:00:39

0:00:39

0:00:26

0:00:26

0:00:35

0:00:35

0:00:48

0:00:48

0:00:29

0:00:29

0:00:57

0:00:57

0:00:33

0:00:33

0:00:31

0:00:31

0:00:20

0:00:20

0:00:57

0:00:57

0:00:24

0:00:24

0:01:00

0:01:00

0:00:12

0:00:12