filmov

tv

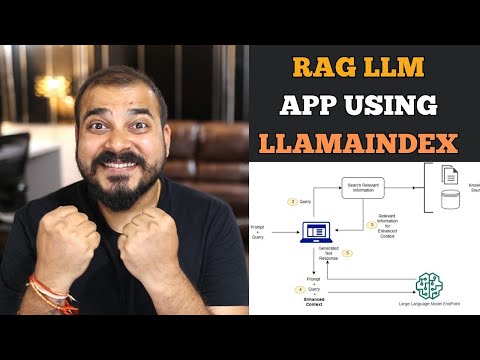

LlamaIndex 22: Llama 3.1 Local RAG using Ollama | Python | LlamaIndex

Показать описание

LlamaIndex 22: Llama 3.1 Local RAG using Ollama | Python | LlamaIndex

About this video: In this video, you will learn how to crate RAG from scractch in LlamaIndex

Large Language Model (LLM) - LangChain

Large Language Model (LLM) - LlamaIndex

Machine Learning Model Deployment

Spark with Python (PySpark)

Data Preprocessing (scikit-learn)

Social Media Links

#llamaindex #openai #llm #ai #huggingface #api #genai #generativeai #statswire

About this video: In this video, you will learn how to crate RAG from scractch in LlamaIndex

Large Language Model (LLM) - LangChain

Large Language Model (LLM) - LlamaIndex

Machine Learning Model Deployment

Spark with Python (PySpark)

Data Preprocessing (scikit-learn)

Social Media Links

#llamaindex #openai #llm #ai #huggingface #api #genai #generativeai #statswire

LlamaIndex 22: Llama 3.1 Local RAG using Ollama | Python | LlamaIndex

Llama 3 8B: BIG Step for Local AI Agents! - Full Tutorial (Build Your Own Tools)

'I want Llama3 to perform 10x with my private knowledge' - Local Agentic RAG w/ llama3

EASILY Train Llama 3 and Upload to Ollama.com (Must Know)

Step-by-Step Guide to Building a RAG LLM App with LLamA2 and LLaMAindex

LLMs for Advanced Question-Answering over Tabular/CSV/SQL Data (Building Advanced RAG, Part 2)

'I want Llama3.1 to perform 10x with my private knowledge' - Self learning Local Llama3.1 ...

Llama Index + Node.js App READY! 🤯 How to integrate RAG? QUICK & EASY! 🚀 (Step-by-Step Tutorial...

Building Multimodal AI RAG with LlamaIndex, NVIDIA NIM, and Milvus | LLM App Development

'okay, but I want Llama 3 for my specific use case' - Here's how

End to end RAG LLM App Using Llamaindex and OpenAI- Indexing and Querying Multiple pdf's

Python RAG Tutorial (with Local LLMs): AI For Your PDFs

Step-by-Step Guide to Build RAG App using LlamaIndex | Ollama | Llama-2 | Python

How To Use Meta Llama3 With Huggingface And Ollama

Local RAG with Llama 3.1 for PDFs | Private Chat with Your Documents using LangChain & Streamlit

What is Retrieval-Augmented Generation (RAG)?

How to chat with your PDFs using local Large Language Models [Ollama RAG]

Chat with Docs using LLAMA3 & Ollama| FULLY LOCAL| Ollama RAG|Chainlit #ai #llm #localllms

How to Download Llama 3 Models (8 Easy Ways to access Llama-3)!!!!

Everything You Need to Know About LlamaIndex

Llama 3 RAG: Create Chat with PDF App using PhiData, Here is how..

Vector databases are so hot right now. WTF are they?

LlamaIndex Webinar: Build an Open-Source Coding Assistant with OpenDevin

Fine-Tuning Llama 3 on a Custom Dataset: Training LLM for a RAG Q&A Use Case on a Single GPU

Комментарии

0:20:37

0:20:37

0:17:32

0:17:32

0:24:02

0:24:02

0:14:51

0:14:51

0:24:09

0:24:09

0:35:07

0:35:07

0:25:34

0:25:34

0:03:22

0:03:22

0:16:41

0:16:41

0:24:20

0:24:20

0:27:21

0:27:21

0:21:33

0:21:33

0:14:07

0:14:07

0:08:27

0:08:27

0:42:17

0:42:17

0:06:36

0:06:36

0:23:00

0:23:00

0:21:17

0:21:17

0:11:22

0:11:22

0:44:11

0:44:11

0:07:35

0:07:35

0:03:22

0:03:22

0:53:30

0:53:30

0:33:24

0:33:24