filmov

tv

Build an Ecosystem, Not a Monolith

Показать описание

Colin Raffel (University of North Carolina & Hugging Face)

Large Language Models and Transformers

Currently, the preeminent paradigm for building artificial intelligence is the development of large, general-purpose models that aim to be able to perform all tasks at (super)human level. In this talk, I will argue that an ecosystem of specialist models would likely be dramatically more efficient and could be significantly more effective. Such an ecosystem could be built collaboratively by a distributed community and be continually expanded and improved. In this talk, I will outline some of the technical challenges involved in creating model ecosystems, including automatically selecting which models to use for a particular task, merging models to combine their capabilities, and efficiently communicating changes to a model.

Large Language Models and Transformers

Currently, the preeminent paradigm for building artificial intelligence is the development of large, general-purpose models that aim to be able to perform all tasks at (super)human level. In this talk, I will argue that an ecosystem of specialist models would likely be dramatically more efficient and could be significantly more effective. Such an ecosystem could be built collaboratively by a distributed community and be continually expanded and improved. In this talk, I will outline some of the technical challenges involved in creating model ecosystems, including automatically selecting which models to use for a particular task, merging models to combine their capabilities, and efficiently communicating changes to a model.

One Year Ago I Built an Ecosystem, This Happened

You Have Never Seen An Ecosystem Like THIS - The Miniature Factory // Ep.1

The Shallow Ecosystem on My Desk (150 Day Evolution)

I Built a Tiny Ecosystem

NO WATER CHANGES for a YEAR!! Ecosystem Aquarium How To

$10 vs $1,000 Terrarium Ecosystem!

I Turned my Bedroom into a Giant Ecosystem...

Creating a flourishing ecosystem is puzzling...

673 Days Ago I Built a Boa Paludarium Ecosystem, What Happened?

Creating A Low Maintenance, Balanced Ecosystem in Any Style of Aquarium. No Water Changes or Filter

I Simulated Freshwater Ecosystem For 180 Days, No Co2, No Water Change, No Filter

I simulated a LEGO ECOSYSTEM...

600 Days old ecosystem 🌱🐸

DIY Budget Ecosystem Pond - Solo Build in 5 Days by Hand

the creatures living in my no water change ecosystem fish tank

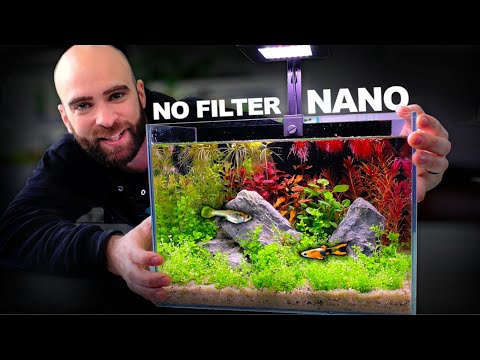

Nano Ecosystem Fish Tank You Can Put Anywhere!

My Aquaponic Ecosystem Aquarium

Building an ecosystem of ecosystems with IOG’s Sidechains Toolkit

Creating a SIMPLE But BEAUTIFUL Ecosystem: Step-by-Step Terrarium Guide

Games that Make You Part of the Ecosystem

No-Filter Guppy Sanctuary Ecosystem Fish Tank

Simulating a Beach for 50 Days

How To Fix A Broken Ecosystem.

Making my Prison Frogs a Real Ecosystem (1 year experiment)

Комментарии

0:08:05

0:08:05

0:14:06

0:14:06

0:14:12

0:14:12

0:11:13

0:11:13

0:13:34

0:13:34

0:14:00

0:14:00

0:08:38

0:08:38

0:19:10

0:19:10

0:16:53

0:16:53

0:59:35

0:59:35

0:10:13

0:10:13

0:11:42

0:11:42

0:00:42

0:00:42

0:19:49

0:19:49

0:05:53

0:05:53

0:26:53

0:26:53

0:01:00

0:01:00

0:14:13

0:14:13

0:08:06

0:08:06

0:21:02

0:21:02

0:10:43

0:10:43

0:08:47

0:08:47

0:00:50

0:00:50

0:15:00

0:15:00