filmov

tv

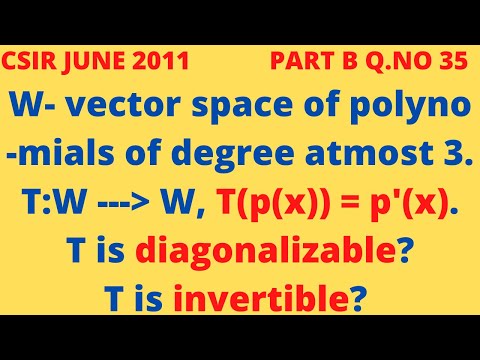

Derivative diagonalizable ?

Показать описание

Can you turn the derivative into a diagonal matrix? Watch this video and find out!

Derivative diagonalizable ?

10.3 Solving a linear system of differential equation using diagonalization

Drinking and Deriving: Diagonalization Derivation

DERIVATIVE LINEAR TRANSFORMATION IS NOT DIAGONALIZABLE

Diagonalizing a Matrix

Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra

Derivative in a box

Systems of DEs by Diagonalization

Advanced Linear Algebra 8: The Half Derivative

non-diagonalizable systems -- differential equations 22

Non-diagonalizable Systems Part 1

15. Matrices A(t) Depending on t, Derivative = dA/dt

Linear Algebra: Eigenvalues, Eigenvectors, and Diagonalization (MAT223 Final Test)

Diagonalize a 2 by 2 Matrix (Full Process)

5.3 and 5.4 part 1 - Similarity and Diagonalization

Commuting diagonalizable matrices are simultaneously diagonalizable

The Unbelievable Transformation: Finding the Factorial of the Derivative!

The Matrix Exponential

Homogeneous Linear Systems of ODE: The Jordan case of non-diagonalizable matrices

Diagonalizable Systems Part 1

Linear Algebra: check if a 2x2 matrix is diagonalizable

Diagonalizable matrix | Diagonalizable matrix property | youtube shorts #

5.3 - Diagonalization

How to Check a Linear Transformation is Diagonalizable or Not

Комментарии

0:14:52

0:14:52

0:08:45

0:08:45

0:11:49

0:11:49

0:09:40

0:09:40

0:11:37

0:11:37

0:17:16

0:17:16

0:08:15

0:08:15

0:06:26

0:06:26

0:42:51

0:42:51

0:28:07

0:28:07

0:08:40

0:08:40

0:50:52

0:50:52

0:04:02

0:04:02

0:06:35

0:06:35

0:09:26

0:09:26

0:18:26

0:18:26

0:15:31

0:15:31

0:15:32

0:15:32

0:38:01

0:38:01

0:14:06

0:14:06

0:03:07

0:03:07

0:01:00

0:01:00

0:23:05

0:23:05

0:06:27

0:06:27