filmov

tv

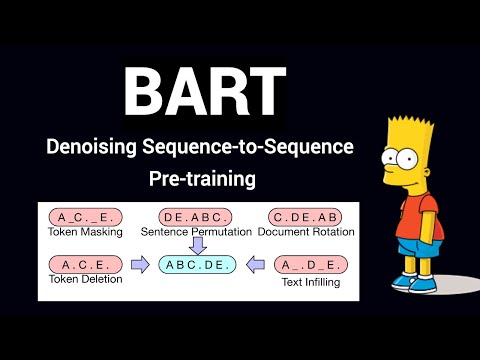

BART: Denoising Sequence-to-Sequence Pre-training for NLG & Translation (Explained)

Показать описание

BART is a powerful model that can be used for many different text generation tasks, including summarization, machine translation, and abstract question answering. It could also be used for text classification and token classification. This video explains the architecture of BART and how it leverages 6 different pre-training objectives to achieve excellence.

BERT explained

Transformer Architecture Explained

BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension

Code (Facebook)

Code (Hugginface)

Connect

BERT explained

Transformer Architecture Explained

BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension

Code (Facebook)

Code (Hugginface)

Connect

BART: Denoising Sequence-to-Sequence Pre-training for NLG (Research Paper Walkthrough)

BART Explained: Denoising Sequence-to-Sequence Pre-training

BART: Denoising Sequence-to-Sequence Pre-training for NLG & Translation (Explained)

BART: Denoising Sequence-to-Sequence Pre-training for NLP Generation, Translation, and Comprehension

60sec papers - BART: Denoising S2S Pre-Training for NLG, Translation, and Comprehension

CMU Neural Nets for NLP 2021 (15): Sequence-to-sequence Pre-training

BART | Lecture 56 (Part 4) | Applied Deep Learning (Supplementary)

BART And Other Pre-Training (Natural Language Processing at UT Austin)

BART (Natural Language Processing at UT Austin)

BART Model Explained #machinelearning #datascience #bart #transformer #attentionmechanism

Multilingual Denoising Pre-training for Neural Machine Translation (Reading Papers)

L19.5.2.6 BART: Combining Bidirectional and Auto-Regressive Transformers

'BART' | UCLA CS 263 NLP Presentation

Unlocking BART: The Game Changer for Language Models

L19.5.2.1 Some Popular Transformer Models: BERT, GPT, and BART -- Overview

Multilingual Denoising Pre-training for Neural Machine Translation

BART: Bridging Comprehension and Generation in Natural Language Processing

Saramsh - Patent Document Summarization using BART | Workshop Capstone

Don’t Stop Pretraining | Lecture 55 (Part 3) | Applied Deep Learning (Supplementary)

Blockwise Parallel Decoding for Deep Autoregressive Models

Neural and Pretrained Machine Translation (Natural Language Processing at UT Austin)

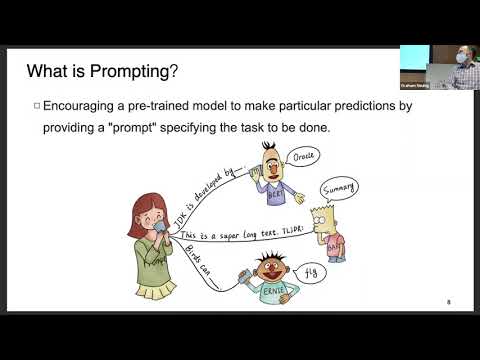

CMU Advanced NLP 2021 (10): Prompting + Sequence-to-sequence Pre-training

Bert versus Bart

Hands-On Workshop on Training and Using Transformers 3 -- Model Pretraining

Комментарии

0:12:47

0:12:47

0:03:36

0:03:36

0:18:17

0:18:17

0:13:24

0:13:24

0:00:59

0:00:59

0:27:23

0:27:23

0:04:36

0:04:36

0:06:01

0:06:01

0:05:40

0:05:40

0:00:44

0:00:44

0:19:43

0:19:43

0:10:15

0:10:15

0:20:01

0:20:01

0:03:57

0:03:57

0:08:41

0:08:41

0:17:33

0:17:33

0:04:52

0:04:52

0:08:05

0:08:05

0:09:36

0:09:36

0:23:52

0:23:52

0:07:52

0:07:52

1:17:21

1:17:21

0:01:21

0:01:21

1:17:40

1:17:40