filmov

tv

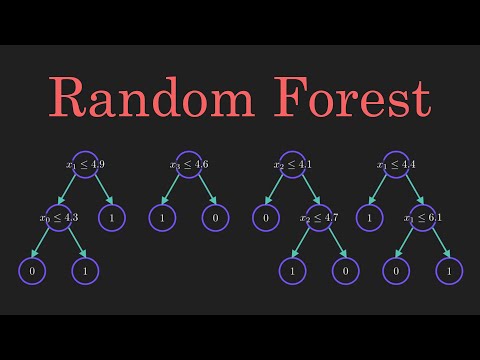

Binary classification using ensemble model

Показать описание

Machine learning models:

Data Set:

Ensemble Model Code:

accuracy_ensemble, precision_ensemble, recall_ensemble = {}, {}, {}

models_ensemble = {}

def evaluate(model, X_train, X_val, Y_train, y_val,key):

# Prediction

# Calculate Accuracy, Precision and Recall Metrics

accuracy_ensemble[key]= accuracy_score(predictions, y_val)

precision_ensemble[key] = precision_score(predictions, y_val)

recall_ensemble[key] = recall_score(predictions, y_val)

auc = roc_auc_score(y_val, Y_predict)

print('Classification Report:')

print(classification_report(y_val,predictions,digits=4))

from xgboost import XGBClassifier

tree = XGBClassifier()

models_ensemble['Bagging'] = BaggingClassifier(base_estimator=tree, n_estimators=40, random_state=0)

evaluate(models_ensemble['Bagging'], X_train, X_val, Y_train, Y_val,'Bagging')

models_ensemble['AdaBoostClassifier'] = AdaBoostClassifier(n_estimators=10)

evaluate(models_ensemble['AdaBoostClassifier'], X_train, X_val, Y_train, Y_val,'AdaBoostClassifier')

models_ensemble['Gradient Boost'] = GradientBoostingClassifier(n_estimators=100, random_state=42)

evaluate(models_ensemble['Gradient Boost'], X_train, X_val, Y_train, Y_val,'Gradient Boost')

clf1 = ExtraTreesClassifier()

clf2 = RandomForestClassifier()

clf3 = XGBClassifier()

clf4 = DecisionTreeClassifier()

models_ensemble['Soft Voting'] = VotingClassifier(estimators=[('ExTrees', clf1), ('Random Forest', clf2), ('XGB', clf3),('Decision Tree',clf4)], voting='soft')

evaluate(models_ensemble['Soft Voting'],X_train, X_val, Y_train, Y_val,'Soft Voting')

clf1 = ExtraTreesClassifier()

clf2 = RandomForestClassifier()

clf3 = XGBClassifier()

clf4 = DecisionTreeClassifier()

models_ensemble['Hard Voting'] = VotingClassifier(estimators=[('ExTrees', clf1), ('Random Forest', clf2), ('XGB', clf3),('Decision Tree',clf4)], voting='hard')

evaluate(models_ensemble['Hard Voting'],X_train, X_val, Y_train, Y_val,'Hard Voting')

models_ensemble['Stacked'] = StackingClassifier(estimators=[('m1', models['Xgboost']),

('m2', models['Extra Tree Classifier']), ('m3', models['Random Forest']),('m4',models['Decision Trees'])],final_estimator=LinearSVC())

evaluate(models_ensemble['Stacked'], X_train, X_val, Y_train, Y_val,'Stacked')

import pandas as pd

Data Set:

Ensemble Model Code:

accuracy_ensemble, precision_ensemble, recall_ensemble = {}, {}, {}

models_ensemble = {}

def evaluate(model, X_train, X_val, Y_train, y_val,key):

# Prediction

# Calculate Accuracy, Precision and Recall Metrics

accuracy_ensemble[key]= accuracy_score(predictions, y_val)

precision_ensemble[key] = precision_score(predictions, y_val)

recall_ensemble[key] = recall_score(predictions, y_val)

auc = roc_auc_score(y_val, Y_predict)

print('Classification Report:')

print(classification_report(y_val,predictions,digits=4))

from xgboost import XGBClassifier

tree = XGBClassifier()

models_ensemble['Bagging'] = BaggingClassifier(base_estimator=tree, n_estimators=40, random_state=0)

evaluate(models_ensemble['Bagging'], X_train, X_val, Y_train, Y_val,'Bagging')

models_ensemble['AdaBoostClassifier'] = AdaBoostClassifier(n_estimators=10)

evaluate(models_ensemble['AdaBoostClassifier'], X_train, X_val, Y_train, Y_val,'AdaBoostClassifier')

models_ensemble['Gradient Boost'] = GradientBoostingClassifier(n_estimators=100, random_state=42)

evaluate(models_ensemble['Gradient Boost'], X_train, X_val, Y_train, Y_val,'Gradient Boost')

clf1 = ExtraTreesClassifier()

clf2 = RandomForestClassifier()

clf3 = XGBClassifier()

clf4 = DecisionTreeClassifier()

models_ensemble['Soft Voting'] = VotingClassifier(estimators=[('ExTrees', clf1), ('Random Forest', clf2), ('XGB', clf3),('Decision Tree',clf4)], voting='soft')

evaluate(models_ensemble['Soft Voting'],X_train, X_val, Y_train, Y_val,'Soft Voting')

clf1 = ExtraTreesClassifier()

clf2 = RandomForestClassifier()

clf3 = XGBClassifier()

clf4 = DecisionTreeClassifier()

models_ensemble['Hard Voting'] = VotingClassifier(estimators=[('ExTrees', clf1), ('Random Forest', clf2), ('XGB', clf3),('Decision Tree',clf4)], voting='hard')

evaluate(models_ensemble['Hard Voting'],X_train, X_val, Y_train, Y_val,'Hard Voting')

models_ensemble['Stacked'] = StackingClassifier(estimators=[('m1', models['Xgboost']),

('m2', models['Extra Tree Classifier']), ('m3', models['Random Forest']),('m4',models['Decision Trees'])],final_estimator=LinearSVC())

evaluate(models_ensemble['Stacked'], X_train, X_val, Y_train, Y_val,'Stacked')

import pandas as pd

Комментарии

0:21:25

0:21:25

0:11:18

0:11:18

0:04:32

0:04:32

0:06:27

0:06:27

0:35:45

0:35:45

0:02:50

0:02:50

0:14:38

0:14:38

0:05:01

0:05:01

0:10:33

0:10:33

0:13:44

0:13:44

![[CPSC 340] Ensemble](https://i.ytimg.com/vi/3SD6fgNGZSo/hqdefault.jpg) 0:47:30

0:47:30

0:23:32

0:23:32

0:57:05

0:57:05

1:07:47

1:07:47

0:03:47

0:03:47

0:05:39

0:05:39

0:10:05

0:10:05

0:02:48

0:02:48

0:16:17

0:16:17

0:08:55

0:08:55

0:08:01

0:08:01

0:09:58

0:09:58

0:13:41

0:13:41

0:16:16

0:16:16