filmov

tv

Differential Privacy + Federated Learning Explained (+ Tutorial) | #AI101

Показать описание

Thank you to Kasia, Jeff, Gerald, Milan, Ian, Becky, Jino, Daniel, Narskogr, Jason, and Mariano for being $5+/month Patrons!

Sources:

Differential Privacy in Federated Learning - Owndata

Brendan McMahan - Guarding user Privacy with Federated Learning and Differential Privacy

Differential Privacy + Federated Learning Explained (+ Tutorial) | #AI101

Federated Learning with Formal User-Level Differential Privacy Guarantees

Federated Learning And Differential Privacy

PETER KAIROUZ: Federated Learning and Differential Privacy – Part 1

Differential Privacy in Federated Learning

Tackling Data Privacy With Federated Learning: Real-World Use Cases

Privacy Preserving AI (Andrew Trask) | MIT Deep Learning Series

NDSS 2022 Local and Central Differential Privacy for Robustness and Privacy in Federated Learning

Tech Talks - PKC - Securing Federated Learning with Differential Privacy & Cryptography

Machine learning, Distributed learning, Differential Privacy, Federated learning

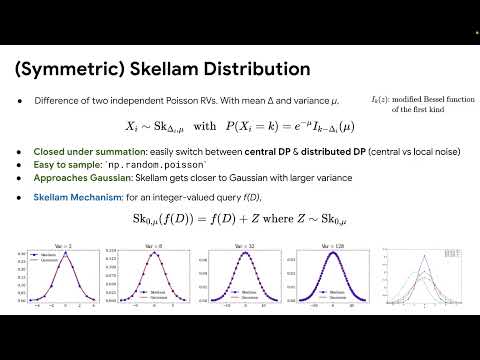

The Skellam Mechanism for Differentially Private Federated Learning

OM PriCon2020: Private Deep Learning for Hospitals using Federated Learning + Differential Privacy

Federated Learning and Differential Privacy - CanDIG Mini-Conference

FLOW Seminar #86: Chuan Guo (Meta) Privacy-Aware Compression for Federated Data Analysis

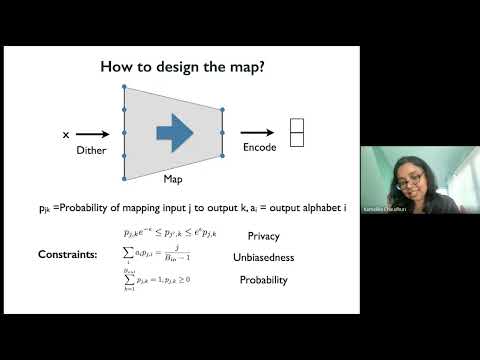

Privacy-Aware Compression for Federated Learning

Differential Privacy For Machine Learning In Action (Sensitive Data)

Abhradeep Guha Thakurta: Federated Learning with Formal User-Level Differential Privacy Guarantees

From Research to Real World Bringing Differential Privacy and Federated Learning to Life

Federated Heavy Hitters Discovery with Differential Privacy

Federated Learning for Cancer Prediction Models with Differential Privacy - iDash 2020

USENIX Security '23 - PrivateFL: Accurate, Differentially Private Federated Learning via...

Enhancing IoT Security A Novel Approach with Federated Learning and Differential Privacy Integration

Комментарии

0:05:37

0:05:37

0:31:30

0:31:30

0:07:26

0:07:26

0:30:53

0:30:53

0:04:59

0:04:59

1:29:00

1:29:00

0:13:41

0:13:41

0:35:54

0:35:54

1:13:51

1:13:51

0:13:34

0:13:34

1:15:22

1:15:22

1:01:45

1:01:45

0:10:08

0:10:08

0:09:53

0:09:53

0:16:53

0:16:53

0:48:48

0:48:48

0:33:09

0:33:09

0:23:56

0:23:56

0:48:20

0:48:20

0:01:01

0:01:01

0:43:45

0:43:45

0:09:59

0:09:59

0:13:13

0:13:13

0:00:38

0:00:38