filmov

tv

PAIR AI Explorables | Is the problem in the data? Examples on Fairness, Diversity, and Bias.

Показать описание

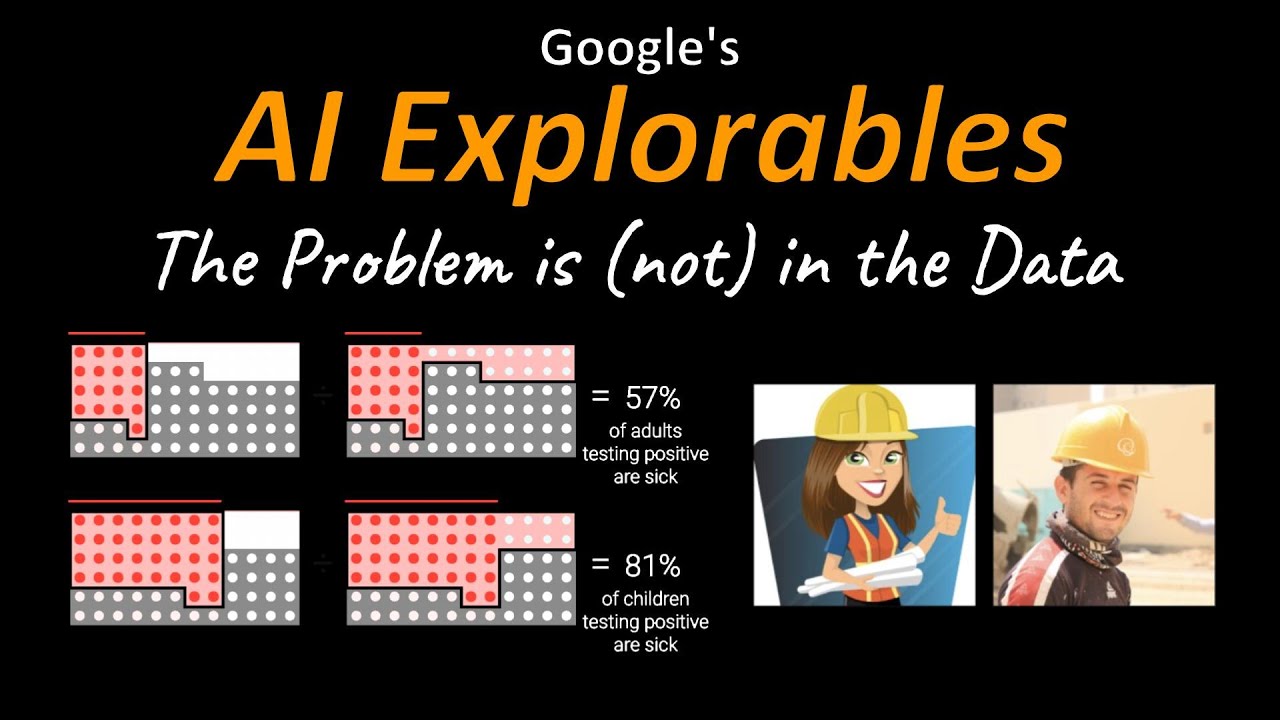

In the recurring debate about bias in Machine Learning models, there is a growing argument saying that "the problem is not in the data", often citing the influence of various choices like loss functions or network architecture. In this video, we take a look at PAIR's AI Explorables through the lens of whether or not the bias problem is a data problem.

OUTLINE:

0:00 - Intro & Overview

1:45 - Recap: Bias in ML

4:25 - AI Explorables

5:40 - Measuring Fairness Explorable

11:00 - Hidden Bias Explorable

16:10 - Measuring Diversity Explorable

23:00 - Conclusion & Comments

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

OUTLINE:

0:00 - Intro & Overview

1:45 - Recap: Bias in ML

4:25 - AI Explorables

5:40 - Measuring Fairness Explorable

11:00 - Hidden Bias Explorable

16:10 - Measuring Diversity Explorable

23:00 - Conclusion & Comments

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Комментарии

0:23:33

0:23:33

0:08:09

0:08:09

0:02:55

0:02:55

0:03:17

0:03:17

0:12:21

0:12:21

1:16:33

1:16:33

0:15:19

0:15:19

0:00:39

0:00:39

0:26:56

0:26:56

0:21:27

0:21:27

0:12:32

0:12:32

1:35:02

1:35:02

0:47:19

0:47:19

0:02:41

0:02:41

0:30:50

0:30:50

0:09:23

0:09:23

0:13:27

0:13:27

0:59:51

0:59:51

1:05:46

1:05:46

2:33:21

2:33:21

0:13:49

0:13:49

1:58:32

1:58:32

0:16:51

0:16:51

0:05:57

0:05:57