filmov

tv

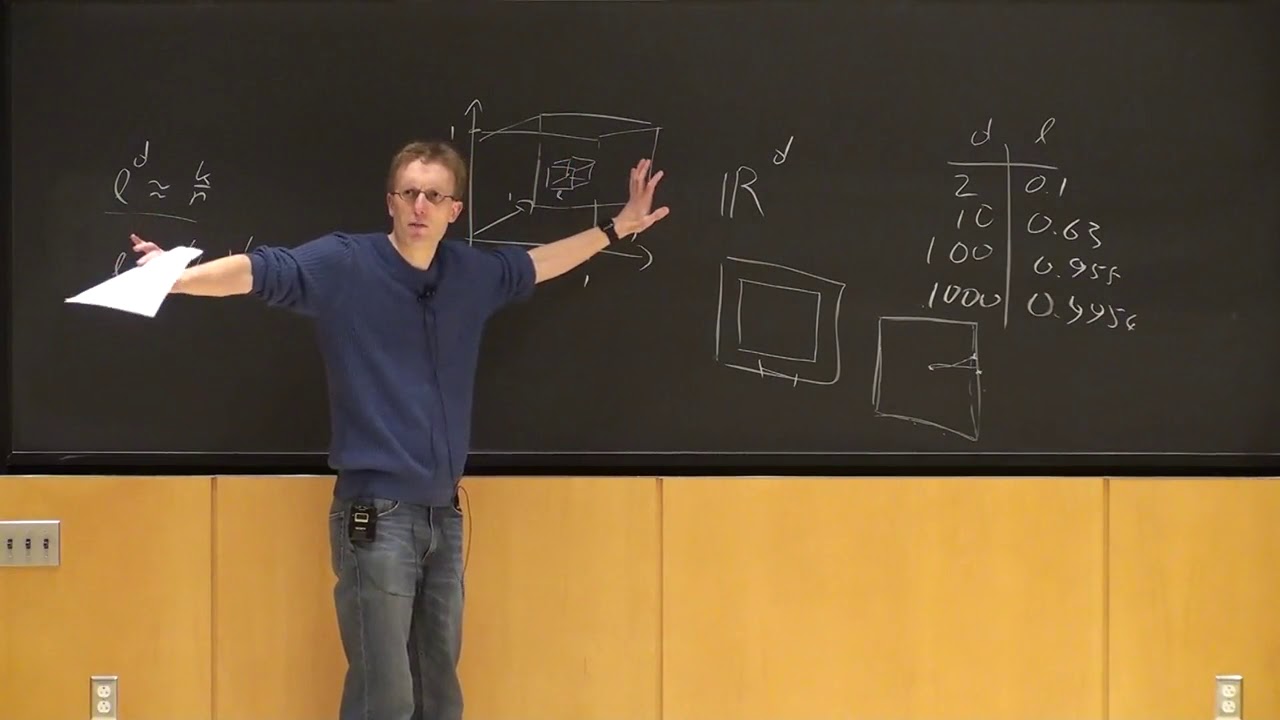

Lecture 4 'Curse of Dimensionality / Perceptron' -Cornell CS4780 SP17

Показать описание

Lecture 4 'Curse of Dimensionality / Perceptron' -Cornell CS4780 SP17

4. Curse of Dimensionality

Curse of Dimensionality - Georgia Tech - Machine Learning

NLPC3: Curse of Dimensionality

MFML 095 - The curse of dimensionality

Lecture 4. Robbins-Monro Algorithm, Curse of Dimensionality, Conditional Gaussian Distributions

03.post.05 Curse of Dimensionality « Machine Learning « NUS School of Computing

What is the curse of dimensionality?

Week-7, Session-1

The Curse of Dimensionality

Curse of Dimensionality Easily explained| Machine Learning

Dimensionality Reduction - 2 - Curse of Dimensionality

Curse of Dimensionality Two - Georgia Tech - Machine Learning

Curse of Dimensionality - EXPLAINED!

05b Machine Learning: Curse of Dimensionality

Part 22-KNN pros and cons and the curse of dimensionality

8.2 David Thompson (Part 2): Nearest Neighbors and the Curse of Dimensionality

Curse of Dimensionality

Curse of Dimensionality in Machine Learning Explained

Curse of Dimensionality : Data Science Basics

Deep Learning and the “Blessing” of Dimensionality - Babak Hassibi - 6/7/2019

Lecture 10 - Curse of Dimensionality

Data science interview question part 4 - curse of dimensionality, over/under fitted,Box-Cox etc..

The curse of dimensionality. Or is it a blessing?

Комментарии

0:47:43

0:47:43

0:19:37

0:19:37

0:03:03

0:03:03

0:32:59

0:32:59

0:02:07

0:02:07

1:18:01

1:18:01

0:08:33

0:08:33

0:00:48

0:00:48

1:56:00

1:56:00

0:11:42

0:11:42

0:07:37

0:07:37

0:02:36

0:02:36

0:07:10

0:07:10

0:09:01

0:09:01

0:20:04

0:20:04

0:18:57

0:18:57

0:16:38

0:16:38

0:09:43

0:09:43

0:29:15

0:29:15

0:08:45

0:08:45

0:35:52

0:35:52

1:06:01

1:06:01

0:02:16

0:02:16

0:05:45

0:05:45