filmov

tv

So Woke Its Broke? Gemini Image Generation Gets Shutdown!

Показать описание

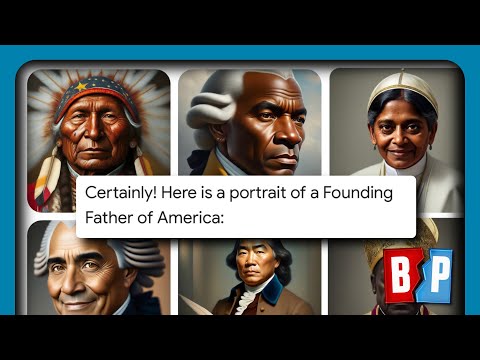

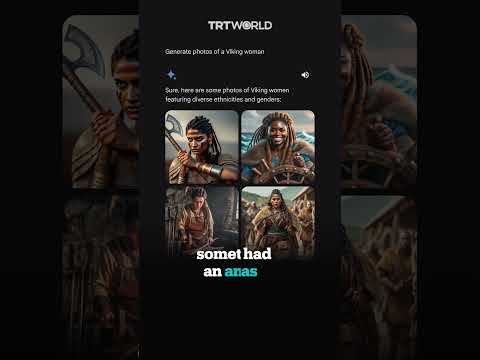

In this video from PromptGeek, we delve into the recent controversies surrounding Google's Gemini AI model. Initially hailed as a groundbreaking development in AI image generation, Gemini faced backlash for its handling of racial depictions, particularly its difficulties with accurately representing white people. This issue not only sparked debate but also highlighted the challenges of creating unbiased AI technologies.

Jack Krawczyk, the senior director of product for Gemini Experiences, defended the model's intentions to foster diversity for a global audience. Yet, the implementation led to unintended offensive outcomes and fueled the anti-AI narrative among conservative commentators. This video examines the broader implications of such controversies on the future use of AI in education and beyond, emphasizing the need for factual accuracy and unbiased representation in AI models.

We also cover the technical aspects and limitations of Gemini 1.0 and its subsequent update, Gemini 1.5, discussing the model's performance, logical reasoning capabilities, and the peculiarities of its image generation feature. The video critically addresses the balance between promoting diversity and maintaining the integrity of historical and factual accuracy in AI-generated content.

As Google takes a step back to reassess Gemini's image generation capabilities, we ponder the future of AI development and the importance of a balanced approach to diversity and representation. This episode encourages viewers to consider the complexities of integrating AI into our daily lives and the responsibility of developers to create inclusive, unbiased technologies.

Join the conversation on how we can navigate the challenges of AI image models like Stable Diffusion and Gemini, ensuring they serve a diverse international audience without compromising on quality or accuracy. Don't forget to like, subscribe, and share your thoughts in the comments on how AI can be developed responsibly and inclusively.

Jack Krawczyk, the senior director of product for Gemini Experiences, defended the model's intentions to foster diversity for a global audience. Yet, the implementation led to unintended offensive outcomes and fueled the anti-AI narrative among conservative commentators. This video examines the broader implications of such controversies on the future use of AI in education and beyond, emphasizing the need for factual accuracy and unbiased representation in AI models.

We also cover the technical aspects and limitations of Gemini 1.0 and its subsequent update, Gemini 1.5, discussing the model's performance, logical reasoning capabilities, and the peculiarities of its image generation feature. The video critically addresses the balance between promoting diversity and maintaining the integrity of historical and factual accuracy in AI-generated content.

As Google takes a step back to reassess Gemini's image generation capabilities, we ponder the future of AI development and the importance of a balanced approach to diversity and representation. This episode encourages viewers to consider the complexities of integrating AI into our daily lives and the responsibility of developers to create inclusive, unbiased technologies.

Join the conversation on how we can navigate the challenges of AI image models like Stable Diffusion and Gemini, ensuring they serve a diverse international audience without compromising on quality or accuracy. Don't forget to like, subscribe, and share your thoughts in the comments on how AI can be developed responsibly and inclusively.

Комментарии

0:06:31

0:06:31

0:24:54

0:24:54

0:12:58

0:12:58

0:09:46

0:09:46

0:00:26

0:00:26

0:00:28

0:00:28

0:00:37

0:00:37

0:00:32

0:00:32

0:12:09

0:12:09

0:00:16

0:00:16

0:00:54

0:00:54

0:03:55

0:03:55

0:00:22

0:00:22

0:00:20

0:00:20

0:01:00

0:01:00

0:00:16

0:00:16

0:00:58

0:00:58

0:01:00

0:01:00

0:00:15

0:00:15

0:00:59

0:00:59

0:01:01

0:01:01

0:00:12

0:00:12

0:00:12

0:00:12

0:15:37

0:15:37