filmov

tv

Video2Video in ComfyUI [AnimateDiff] - IV

Показать описание

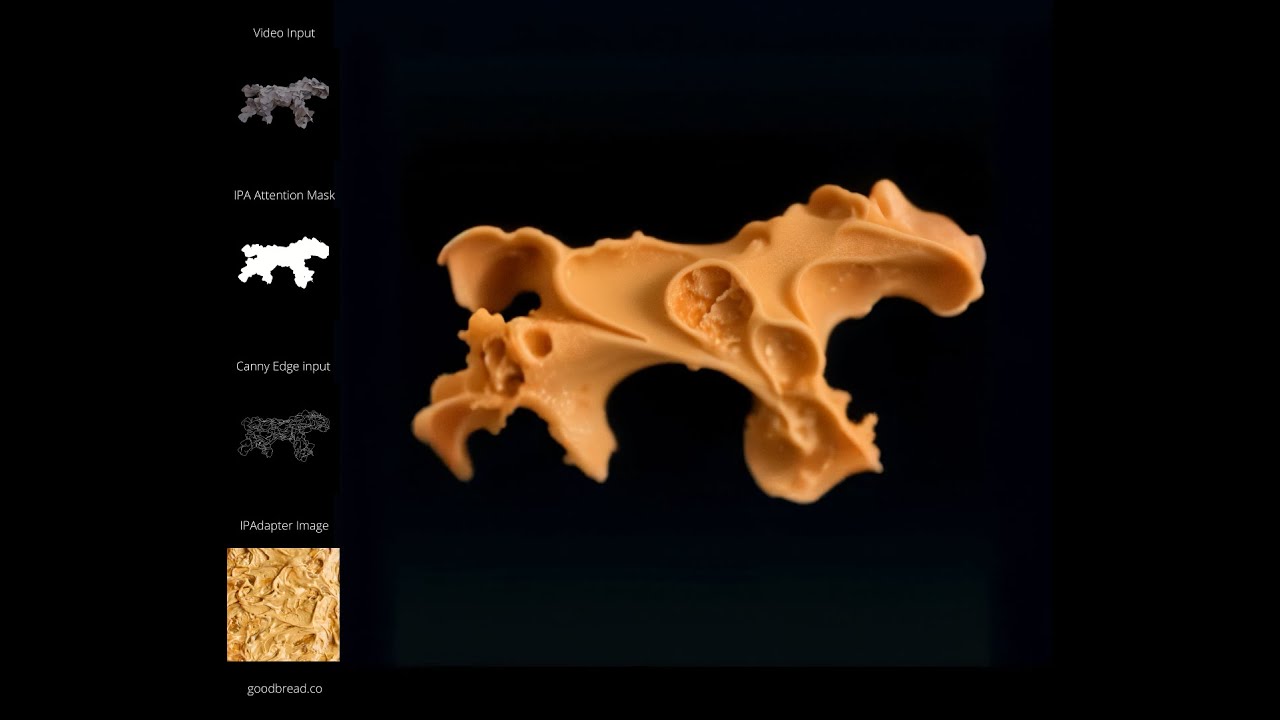

A Cinema4D clones animation of a galloping horse fed into ComfyUI + SD 1.5.

Using the Unsampling/Resampling technique, IPAdapter and ControlNet (Sketch&Toon lines render) I experimented with a fairly wide range of output styles from a single input video loop.

The Unsampling/Resampling technique allows to create frame by frame latent noise based on the input video, yielding some fairly consistent results considering these are all based on a single generic input video.

Using the Unsampling/Resampling technique, IPAdapter and ControlNet (Sketch&Toon lines render) I experimented with a fairly wide range of output styles from a single input video loop.

The Unsampling/Resampling technique allows to create frame by frame latent noise based on the input video, yielding some fairly consistent results considering these are all based on a single generic input video.

AnimateDiff Tutorial: Turn Videos to A.I Animation | IPAdapter x ComfyUI

Video2Video in ComfyUI [AnimateDiff] - IV

Video2Video in ComfyUI [AnimateDiff] - V

Video2Video - Stable Diffusion in ComfyUI : AnimateDiff + IPAdapter + ControlNet.

Video2VIdeo - ComfyUI + ControlNet + IPAdapter + AnimateDIff

How to AI Animate. AnimateDiff in ComfyUI Tutorial.

Animatediff w Multi ControlNet | StableDiffusion

10 Insane New ComfyUI Workflows To Use in 2025

Video2Video in ComfyUI [AnimateDiff] - V [REUPLOAD]

Complete Workflow Breakdown in ComfyUI Animatediff video to video

Create Morphing AI Animations | AnimateDiff: IPIV’s Morph img2vid Tutorial

AI Animation: Make Anything Dance | AnimateDiff + ComfyUI

ComfyUI Video2Video Workflow - AI Animation Using Segment And Unsampling

AnimateDiff SDXL Unsampling - Effective stylzed video to video workflow in ComfyUI

AnimateDiff ControlNet Animation v2.1 [ComfyUI]

ComfyUI Video to Video Animation with Animatediff LCM Lora & LCM Sampler

Easy Image to Video with AnimateDiff (in ComfyUI) #stablediffusion #comfyui #animatediff

Crystal AI #ai #comfyui #animatediff

How to Generate REALISTIC AI Animations | AnimateDiff & ComfyUI Tutorial

CONSISTENT VID2VID WITH ANIMATEDIFF AND COMFYUI

ComfyUI - AnimateDiff - Video2Video - Halloween Liquid

AnimateDiff ControlNet Animation v1.0 [ComfyUI]

Generate AI Videos With AnimateDiff & ComfyUI | Text to Animation Tutorial

ComfyUI - MimicMotion | Human Motion Video Generation (Img2Vid)

Комментарии

0:11:25

0:11:25

0:00:21

0:00:21

0:00:21

0:00:21

0:00:10

0:00:10

0:00:11

0:00:11

0:27:47

0:27:47

0:00:16

0:00:16

0:01:43

0:01:43

0:00:21

0:00:21

0:04:23

0:04:23

0:11:18

0:11:18

0:12:13

0:12:13

0:18:50

0:18:50

0:12:39

0:12:39

0:14:14

0:14:14

0:09:42

0:09:42

0:08:04

0:08:04

0:00:11

0:00:11

0:11:03

0:11:03

0:20:10

0:20:10

0:01:00

0:01:00

0:16:03

0:16:03

0:10:35

0:10:35

0:08:02

0:08:02