filmov

tv

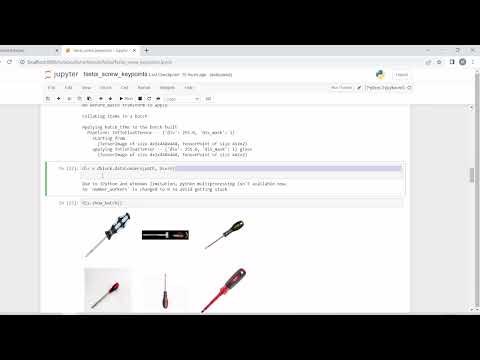

Key point Detection On Custom Dataset Using YOLOv7 | YOLOv7-Pose on Custom Dataset

Показать описание

In this tutorial, I will show you how to use yolov7-pose for custom key point detection.

Keypoint detection is a computer vision task that involves identifying and localizing specific points of interest, or keypoints, in an image.

Models like detectron2, yolov7, yolov8 are designed to detect only a specific number of keypoints (e.g., 17 keypoints for the person ).

And If your custom dataset has a different set of key points or a different number of key points, then the model needs to be modified accordingly to recognize and predict those key points.

Keypoint detection is a computer vision task that involves identifying and localizing specific points of interest, or keypoints, in an image.

Models like detectron2, yolov7, yolov8 are designed to detect only a specific number of keypoints (e.g., 17 keypoints for the person ).

And If your custom dataset has a different set of key points or a different number of key points, then the model needs to be modified accordingly to recognize and predict those key points.

Key point Detection On Custom Dataset Using YOLOv7 | YOLOv7-Pose on Custom Dataset

Keypoints Detection on Custom Dataset Using Detectron2 | Detectron2 Keypoint Detection

Train pose detection Yolov8 on custom data | Keypoint detection | Computer vision tutorial

fastai keypoints training with custom data

YOLOv8 - Keypoint Detection | YOLOv8-Pose | YOLOv8 pose estimation

Yolo Custom Pose Keypoint Detection Tutorial|Medium Blog Review|Link in Description....

Create key point annotations in CVAT - Getting Started With CVAT

Keypoint Detection Algorithms Comparison in AR Image Tracking

Keypoint detection and description. Discussing motivations and methods

Pose Estimation with Ultralytics YOLOv8 | Episode 6

How to train a YOLO-NAS Pose Estimation Model on a custom dataset step-by-step

Build an AI/ML Tennis Analysis system with YOLO, PyTorch, and Key Point Extraction

Neeraj Chopra key point detection with YOLOv7

Tennis Shots Identification using YOLOv7 Pose Estimation and LSTM Model

Unlocking Animal Pose Estimation with YOLOv8: Fine-tuning for Dogs

Keypoint Detection for Measuring Body Size of Giraffes: Enhancing Accuracy and Precision

YOLOv8 - Keypoint Detection | YOLOv8-Pose | YOLOv8 pose estimation Urdu

KeyPoint Detection and Tracking

Tree detection and keypoint detection

YOLOV8 Key point Detection Provided by Ultralytics. #yolov8 #yolov5 #ipl #myers #cpl #pose

An Affordance Keypoint Detection Network for Robot Manipulation

Smoke keypoint detection - UE4-1

Activity recognition using keypoint detection and LSTM model #computervision #activityrecognition

Keypoint Detection and Tracking

Комментарии

0:16:12

0:16:12

0:26:32

0:26:32

0:52:26

0:52:26

0:05:37

0:05:37

0:06:35

0:06:35

0:01:20

0:01:20

0:03:12

0:03:12

0:00:47

0:00:47

0:21:55

0:21:55

0:03:50

0:03:50

0:31:58

0:31:58

4:41:25

4:41:25

0:00:36

0:00:36

0:00:35

0:00:35

0:08:11

0:08:11

0:56:17

0:56:17

0:06:01

0:06:01

0:00:20

0:00:20

0:00:13

0:00:13

0:00:22

0:00:22

0:01:55

0:01:55

0:00:24

0:00:24

0:01:23

0:01:23

0:01:20

0:01:20