filmov

tv

How to Do Incremental Data Loading and Data Validation with PySpark and Spark! Spark Basics!

Показать описание

In this video, I'll be showing you how you can perform an incremental data loading job with PySpark, and then validate the uploaded data is of the correct shape and size!

30 How to do incremental load in SQL server

How to use INCREMENTAL REFRESH for Datasets (PRO) and Dataflows (PREMIUM) in Power BI

Data Load Strategies - Full vs Incremental Load

Azure data engineering | learn incremental load pipeline in adf

Loading Incremental data in Informatica

PySpark | Tutorial-9 | Incremental Data Load | Realtime Use Case | Bigdata Interview Questions

Azure data Engineer project | Incremental data load in Azure Data Factory

Excel Incremental Data Load with Power Query

Databricks Certified Data Engineer Associate Exam Questions Dumps 2024 (48 Real Questions)

Incremental data load in Azure Data Factory

#60.Azure Data Factory - Incremental Data Load using Lookup\Conditional Split

How to Make Incremental Load and Save Time with QVD in Qlik Sense

Avoid the full refresh with Incremental Refresh in Power BI (Premium)

08 Incremental data load in SSIS based on lastupdated or modifieddate | SSIS real time scenarios

Oracle SQL Query for Full Load and Incremental Load

148 How to do incremental load to mysql from sql server using ssis

Microsoft Fabric - Incremental ETL

How to Perform Incremental Data Load using SCD in SSIS

Azure Data Factory - Incremental Data Copy with Parameters

What happens to the incremental data???

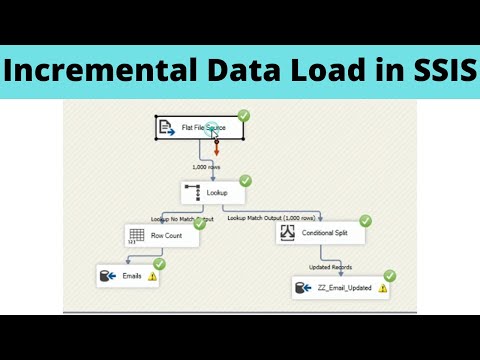

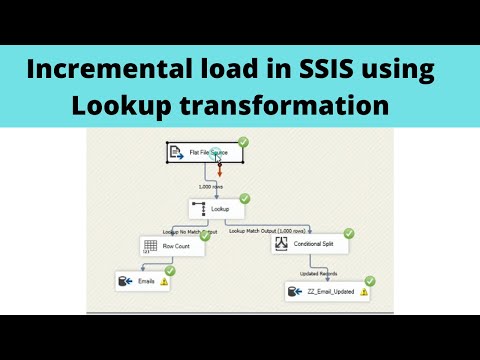

01 Incremental Data Load in SSIS

01 Incremental load in SSIS using Lookup transformation | SSIS real time scenarios

IICS | Incremental data load - simplified version | #informatica

Incremental Load WITHOUT control table In Talend 👉 Delta Load in talend tutorial etl

Комментарии

0:10:16

0:10:16

0:10:53

0:10:53

0:15:32

0:15:32

0:12:15

0:12:15

0:43:18

0:43:18

0:15:10

0:15:10

0:14:56

0:14:56

0:04:06

0:04:06

1:34:44

1:34:44

0:07:16

0:07:16

0:15:45

0:15:45

0:06:47

0:06:47

0:15:42

0:15:42

0:56:08

0:56:08

0:02:40

0:02:40

0:27:15

0:27:15

0:26:29

0:26:29

0:22:50

0:22:50

0:23:15

0:23:15

0:05:05

0:05:05

0:45:57

0:45:57

0:16:52

0:16:52

0:09:31

0:09:31

0:13:58

0:13:58