filmov

tv

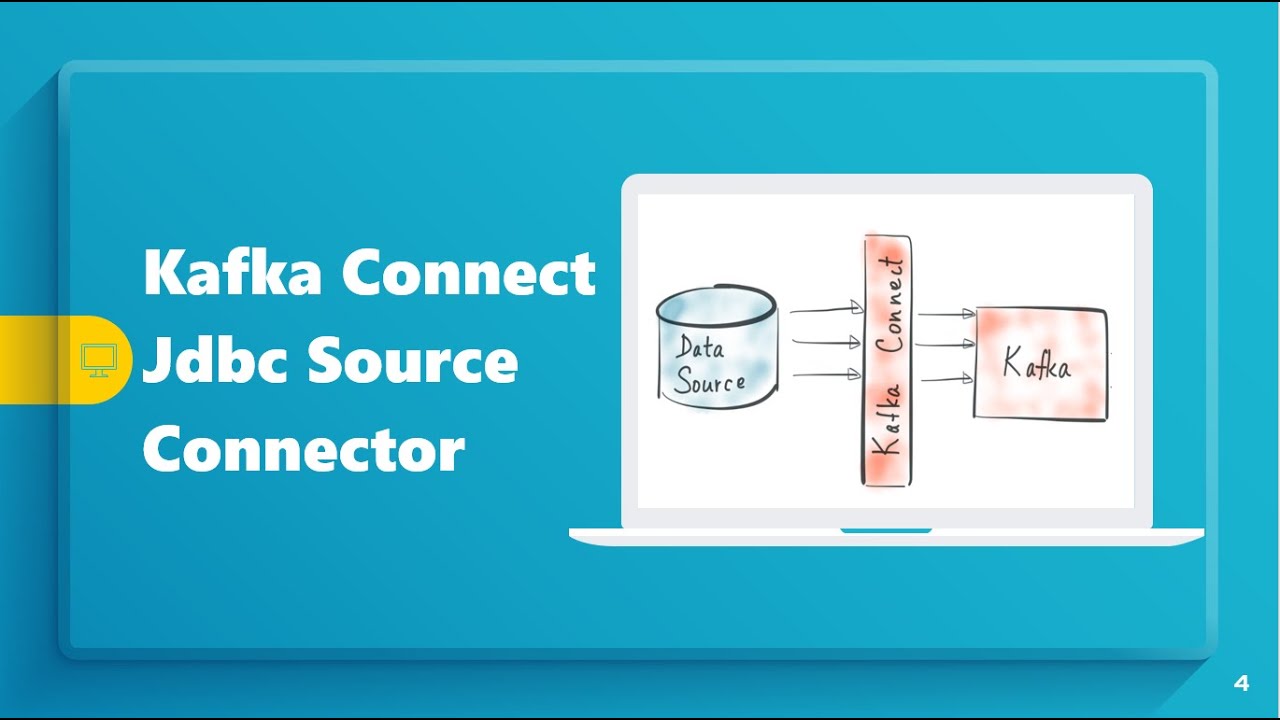

Source MySQL table data to Kafka | Build JDBC Source Connector | Confluent Connector | Kafka Connect

Показать описание

The Kafka Connect JDBC Source connector allows you to import data from any relational database with a JDBC driver into an Apache Kafka® topic.

This video will build JDBC Source Connector to source or stream MySql Table data into Kafka Topic on real time basis. It will explain creating source connector config and then deploy it into Confluent Platform using Control Center Gui.

Like | Subscribe | Share

This video will build JDBC Source Connector to source or stream MySql Table data into Kafka Topic on real time basis. It will explain creating source connector config and then deploy it into Confluent Platform using Control Center Gui.

Like | Subscribe | Share

Source MySQL table data to Kafka | Build JDBC Source Connector | Confluent Connector | Kafka Connect

PHP & MySQL Tutorial: Displaying Database Data in HTML Tables

MySQL - The Basics // Learn SQL in 23 Easy Steps

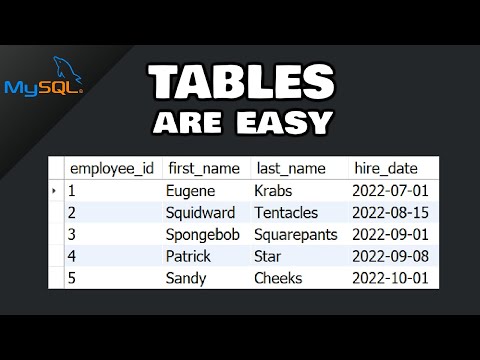

MySQL: How to create a TABLE

Join Different tables of a Database with SQL Join statement on MySQL (2020)

MySQL Workbench 8.0 CE | Import and Export Database

Fill HTML Table From MySQL Database Using PHP | Display MySQL Data in HTML Table

Create Free MySQL Database Online

How To Install PostgreSQL on Ubuntu 24.04 LTS (Linux) (2024)

Connect to MySQL from Excel (Complete Guide)

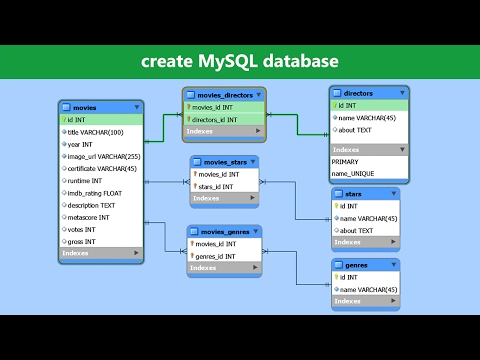

Create MySQL Database - MySQL Workbench Tutorial

How to connect MySQL Database in Eclipse IDE?

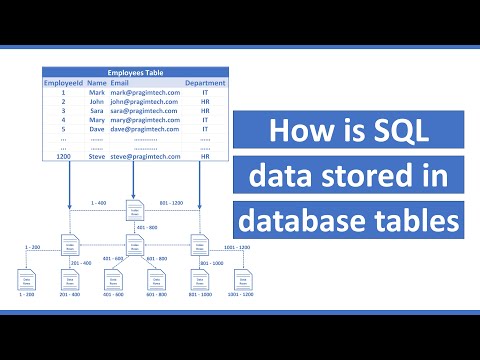

How is data stored in sql database

How to save SQL code in MySQL Workbench

Store HTML Form Data to MySQL Database

Export MySQL Table Data to Excel with PHP - PHP Project

Installing MySQL and Creating Databases | MySQL for Beginners

How to IMPORT Excel file (CSV) to MySQL Workbench.

Data Cleaning in MySQL | Full Project

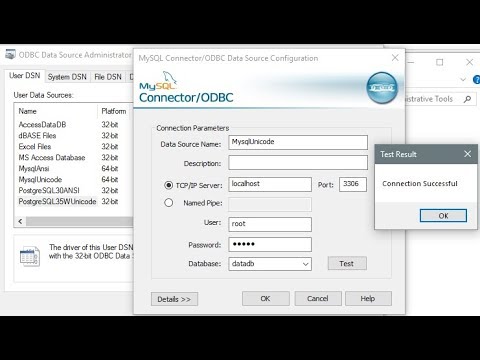

MySQL - Set up a connection to MySQL database using ODBC Data Source Administrator

MYSQL Tutorial: Efficiently Importing Large CSV Files into MySQL Database with LOAD DATA INFILE

Connect Java with Mysql Database | Java JDBC | Java Database connectivity | JDBC MySql | ArjunCodes

MySQL - How to import Database into MySQL Workbench (8.0.22)

MySQL Source Data for SSIS

Комментарии

0:21:14

0:21:14

0:03:11

0:03:11

0:17:17

0:17:17

0:08:10

0:08:10

0:03:25

0:03:25

0:02:04

0:02:04

0:05:59

0:05:59

0:09:36

0:09:36

0:23:33

0:23:33

0:04:49

0:04:49

0:17:16

0:17:16

0:08:35

0:08:35

0:07:04

0:07:04

0:00:51

0:00:51

0:06:49

0:06:49

0:07:43

0:07:43

0:12:04

0:12:04

0:05:04

0:05:04

0:51:11

0:51:11

0:01:47

0:01:47

0:08:41

0:08:41

0:09:12

0:09:12

0:02:07

0:02:07

0:11:14

0:11:14