filmov

tv

Kafka Consumer Offsets Explained

Показать описание

We will be looking into Kafka consumer offsets in this video. This will let us know how apache kafka is managing the offsets of different partitions.

Following topics will be discussed.

- What are consumer Offsets?

- How they are maintained ?

- Message Reprocess or Miss scenario.

- Types of commits of offsets.

Following points will be discussed.

- What is consumer offsets.

- Consumer offset are stored in special topic __consumer_offsets

- Its responsibility of consumer to produce offset message to above topic.

- After rebalance new consumer pick current offset from this topic.

- We can reprocess or miss messages.

We will also be discussing types of commits:

- Auto commit

- Manual commit

- Sync commit.

- Async commit.

If you like the video please like share subscribe.

Following topics will be discussed.

- What are consumer Offsets?

- How they are maintained ?

- Message Reprocess or Miss scenario.

- Types of commits of offsets.

Following points will be discussed.

- What is consumer offsets.

- Consumer offset are stored in special topic __consumer_offsets

- Its responsibility of consumer to produce offset message to above topic.

- After rebalance new consumer pick current offset from this topic.

- We can reprocess or miss messages.

We will also be discussing types of commits:

- Auto commit

- Manual commit

- Sync commit.

- Async commit.

If you like the video please like share subscribe.

Kafka Topics, Partitions and Offsets Explained

How do Kafka Consumer Groups and Consumer Offsets work in Apache Kafka?

Kafka Consumer Offsets Explained

Topics, Partitions and Offsets: Apache Kafka Tutorial #2

Apache Kafka 101: Consumers (2023)

Kafka Consumer and Consumer Groups Explained

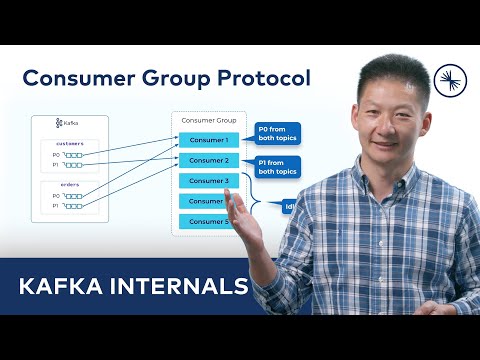

Apache Kafka® Consumers and Consumer Group Protocol

Kafka Offsets Committed vs Current vs Earliest vs Latest Differences | Kafka Interview Questions

What is a Kafka Consumer and How does it work?

Apache Kafka 101: Partitioning (2023)

Lecture 8 - Consumer Offsets - [ Kafka for Beginners ]

Kafka Tutorial Offset Management

Apache Kafka in 6 minutes

08 Kafka Elasticsearch Consumer and Advanced Configurations 014 Consumer offsets reset behavior

Kafka Consumer Deep Dive - Apache Kafka Tutorial For Beginners

Understanding Consumer Offset, Consumer Groups, and Message Consumption in Apache Kafka

02 Kafka Theory 007 Consumer offsets and delivery semantics

Debug Kafka Consumers: Partitions & Offsets

08 Kafka Elasticsearch Consumer and Advanced Configurations 011 Consumer offset commit strategies

Read Kafka Messages from a Specific Offset & Partition in the Console Consumer | Kafka Tutorials

2.APACHE KAFKA : What is Offset in Apache Kafka ||Kafka Tutorial || APACHE KAFKA||TOPIC || OFFSET

How to manage Offset in Kafka

5.3 Complete Kafka Training - What are Kafka Offsets [ Explained ]

Kafka Consumer Offset Commit Interview Questions | Kafka Interview Questions

Комментарии

0:07:03

0:07:03

0:08:01

0:08:01

0:15:01

0:15:01

0:06:41

0:06:41

0:06:51

0:06:51

0:04:57

0:04:57

0:15:08

0:15:08

0:04:58

0:04:58

0:05:09

0:05:09

0:04:23

0:04:23

0:04:02

0:04:02

0:09:37

0:09:37

0:06:48

0:06:48

0:02:07

0:02:07

0:25:50

0:25:50

0:09:41

0:09:41

0:04:40

0:04:40

0:08:51

0:08:51

0:02:45

0:02:45

0:03:34

0:03:34

0:11:57

0:11:57

0:14:45

0:14:45

0:01:44

0:01:44

0:06:44

0:06:44