filmov

tv

Deploy a custom model to Vertex AI

Показать описание

Steps to import a Keras model trained in Colab into Vertex AI, deploy the model to an endpoint, and validate the deployment.

Thanks to Codence for the music.

Thanks to Codence for the music.

Deploy a custom Machine Learning model to mobile

Deploy a custom model to Vertex AI

The Best Way to Deploy AI Models (Inference Endpoints)

Deploy ML model in 10 minutes. Explained

Compile and deploy a custom deep learning model

How to Build and Deploy a Custom MCP Server in 10 Minutes

Build a custom ML model with Vertex AI

Katonic Deploy - How to Deploy a Custom Model | Katonic AI

How to Deploy Machine Learning Models (ft. Runway)

Deploy a custom model to vertex ai

Deploy YOLOv8 via Hosted Inference API

Deploy DeepSeek-R1 models with Amazon Bedrock Custom Model Import | Amazon Web Services

How I deploy serverless containers for free

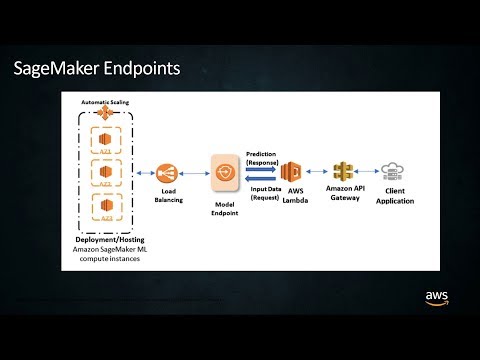

Deploy Your ML Models to Production at Scale with Amazon SageMaker

On-device object detection: Train and deploy a custom TensorFlow Lite model

Build and Deploy a Machine Learning App in 2 Minutes

Let's deploy a custom AI model container as an autoscaling API on your private cloud in 10 minu...

How to Deploy a Custom Model to your OpenCV AI Kit (OAK) | OpenCV + Roboflow Course 6/6

Build and deploy custom object detection model with TensorFlow Lite | Workshop

Portable Prediction Server | Deploy a Custom Model in an External Prediction Environment with PPS

deploy a custom machine learning model to mobile

Train a custom object detection model using your data

How to deploy your custom tensorflow model to the web (Part 1)

The EASIEST Way to Deploy AI Models from Hugging Face (No Code)

Комментарии

0:16:37

0:16:37

0:05:27

0:05:27

0:05:48

0:05:48

0:12:41

0:12:41

0:02:55

0:02:55

0:12:06

0:12:06

0:10:55

0:10:55

0:01:51

0:01:51

0:13:12

0:13:12

0:17:40

0:17:40

0:01:05

0:01:05

0:03:01

0:03:01

0:06:33

0:06:33

0:07:53

0:07:53

0:12:04

0:12:04

0:02:12

0:02:12

0:08:36

0:08:36

0:09:18

0:09:18

0:49:38

0:49:38

0:03:09

0:03:09

0:03:12

0:03:12

0:12:10

0:12:10

0:10:11

0:10:11

0:10:28

0:10:28