filmov

tv

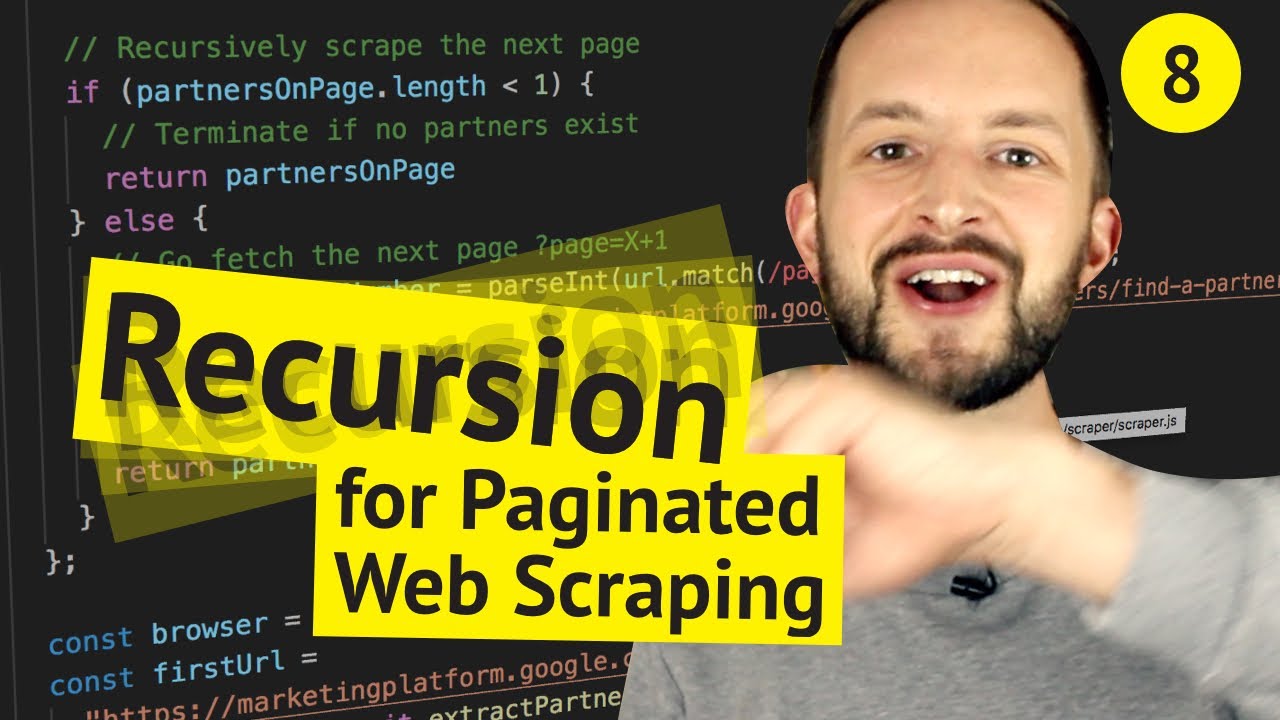

Recursion ➰ for Paginated Web Scraping

Показать описание

We figure out how to deal with the paginated search results in our web scrape. RECURSION is our tool - not as difficult as you might think!!

🗿 MILESTONES

⏯ 00:12 Fika 🍪

⏯ 13:10 Extracting the next page number with regex

⏯ 16:50 Encounter with prettier... 🌋

⏯ 18:39 ➰ Recap

⏯ 20:15 TIME FOR RECURSION 😎

⏯ 29:00 Quick Google rant 🌋

⏯ 29:23 ➰➰ Rerecap by Commenting the Code

See the previous episode where we explain Puppeteer and finding the data to scrape

The code used in this video is on GitHub

Puppeteer - Headless Chrome browser for scraping (instead of Phantom JS)

The editor is called Visual Studio Code and is free. Look for the Live Share extension to share your environment with friends.

DevTips is a weekly show for YOU who want to be inspired 👍 and learn 🖖 about programming. Hosted by David and MPJ - two notorious bug generators 💖 and teachers 🤗. Exploring code together and learning programming along the way - yay!

DevTips has a sister channel called Fun Fun Function, check it out!

#recursion #webscraping #nodejs

Комментарии

0:32:24

0:32:24

0:07:31

0:07:31

0:15:07

0:15:07

0:06:53

0:06:53

0:16:12

0:16:12

0:53:37

0:53:37

0:25:22

0:25:22

0:41:41

0:41:41

0:30:20

0:30:20

0:06:41

0:06:41

0:05:06

0:05:06

0:03:20

0:03:20

0:15:17

0:15:17

0:24:42

0:24:42

0:04:10

0:04:10

0:00:35

0:00:35

0:07:06

0:07:06

0:06:03

0:06:03

0:22:36

0:22:36

0:07:56

0:07:56

0:00:30

0:00:30

0:07:22

0:07:22

0:00:55

0:00:55

0:00:39

0:00:39