filmov

tv

How to Build a Delta Live Table Pipeline in Python

Показать описание

Delta Live Tables are a new and exciting way to develop ETL pipelines. In this video, I'll show you how to build a Delta Live Table Pipeline and explain the gotchas you need to know about.

Patreon Community and Watch this Video without Ads!

Useful Links:

What is Delta Live Tables?

Tutorial on Developing a DLT Pipeline with Python

Python DLT Notebook

DLT Costs

Python Delta Live Table Language Reference

See my Pre Data Lakehouse training series at:

Patreon Community and Watch this Video without Ads!

Useful Links:

What is Delta Live Tables?

Tutorial on Developing a DLT Pipeline with Python

Python DLT Notebook

DLT Costs

Python Delta Live Table Language Reference

See my Pre Data Lakehouse training series at:

Building with a Delta-v Budget - KSP Beginner's Tutorial

How to Build a Delta Live Table Pipeline in Python

You'll fall in love with the Dyke Delta

How To Increase Delta-V Performance [Kerbal Space Program]

THE DELTA LOOP / MULTI BAND DELTA LOOP / SINGLE BAND DELTA LOOP

Delta Force Multiplayer Beta/Demo - Top 5 Weapons (+ATTACHMENTS)

Delta Force | *BEST* Settings for FPS + Performance

Delta Live Tables Demo: Modern software engineering for ETL processing

HOW MUCH WE BUILT THIS 4 BEDROOMS BUNGALOW IN DELTA STATE NIGERIA FOR OUR CLIENT

How To Build an OPEN DELTA Bank #lineman #journeyman #linelife #linejunk #linemanlife #hilineacademy

How to build Delta IV Heavy Rocket In Space Agency

Delta Force Best Settings Guide for High FPS, Performance, Visibility & More

How To Build Delta IV Heavy | In Spaceflight Simulator | Delta IV |

How to Build a Foamboard Delta Wing from Scratch (Timelapse)

Delta Tables 101: What is a delta table? And how to build one?

CB Base Antenna, Full wave Delta Loop

How To Build A Delta-7 Star Fighter (With Optional Hyperdrive): Starfield Ship Build Guide

Delta Wing Build Along Instructions #2

Delta Live Tables A to Z: Best Practices for Modern Data Pipelines

Top 5 META Weapons in Delta Force

[BETTER VERSION] In the making: Delta Airlines' first A350

Amazing Cheap Multiband Delta-Loop Horizontal Antenna

Building Delta - II & Mars Rover in Spaceflight Simulator

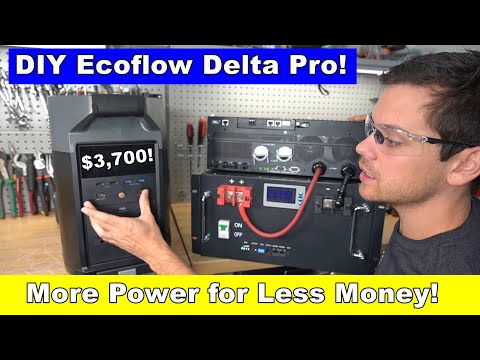

Budget DIY Ecoflow Delta Pro! More power for less money

Комментарии

0:20:28

0:20:28

0:25:27

0:25:27

0:10:28

0:10:28

0:07:01

0:07:01

0:06:08

0:06:08

0:14:27

0:14:27

0:04:40

0:04:40

0:15:37

0:15:37

0:11:45

0:11:45

0:16:52

0:16:52

0:02:49

0:02:49

0:10:49

0:10:49

0:05:26

0:05:26

0:05:54

0:05:54

0:13:38

0:13:38

0:10:39

0:10:39

0:19:23

0:19:23

0:08:01

0:08:01

1:27:52

1:27:52

0:06:06

0:06:06

![[BETTER VERSION] In](https://i.ytimg.com/vi/qHipM4MMKhM/hqdefault.jpg) 0:02:53

0:02:53

0:11:43

0:11:43

0:10:20

0:10:20

0:11:19

0:11:19