filmov

tv

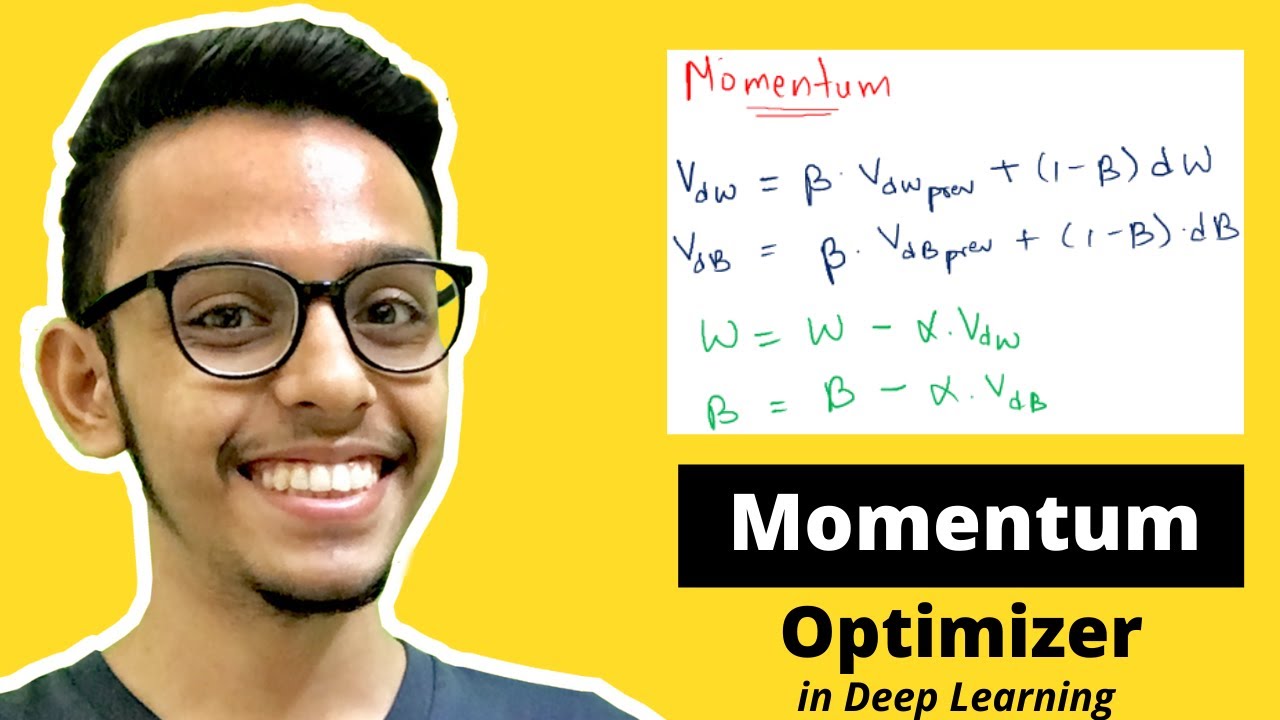

Momentum Optimizer in Deep Learning | Explained in Detail

Показать описание

In this video, we will understand in detail what is Momentum Optimizer in Deep Learning.

Momentum Optimizer in Deep Learning is a technique that reduces the time taken to train a model.

The path of learning in mini-batch gradient descent is zig-zag, and not straight. Thus, some time gets wasted in moving in a zig-zag direction. Momentum Optimizer in Deep Learning smooth out the zig-zag path and make it much more straighter, thus reducing the time taken to train the model.

Momentum Optimizer uses Exponentially Weighted Moving Average, which averages out the vertical movement and the net movement is mostly in the horizontal direction. Thus zig-zag path becomes straighter.

In this video, we will also understand what Exponentially Weighted Moving Average is, and thus this video is a full in-depth explanation of Momentum Optimizer in Deep Learning.

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

Timestamp:

0:00 Agenda

1:00 Why do we need Momentum?

2:53 Exponentially Weighted Moving Average

8:29 Momentum in Mini Batch Gradient Descent

9:50 Why Momentum works?

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

Momentum Optimizer in Deep Learning is a technique that reduces the time taken to train a model.

The path of learning in mini-batch gradient descent is zig-zag, and not straight. Thus, some time gets wasted in moving in a zig-zag direction. Momentum Optimizer in Deep Learning smooth out the zig-zag path and make it much more straighter, thus reducing the time taken to train the model.

Momentum Optimizer uses Exponentially Weighted Moving Average, which averages out the vertical movement and the net movement is mostly in the horizontal direction. Thus zig-zag path becomes straighter.

In this video, we will also understand what Exponentially Weighted Moving Average is, and thus this video is a full in-depth explanation of Momentum Optimizer in Deep Learning.

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

Timestamp:

0:00 Agenda

1:00 Why do we need Momentum?

2:53 Exponentially Weighted Moving Average

8:29 Momentum in Mini Batch Gradient Descent

9:50 Why Momentum works?

➖➖➖➖➖➖➖➖➖➖➖➖➖➖➖

Комментарии

0:15:52

0:15:52

0:11:17

0:11:17

0:09:21

0:09:21

0:03:18

0:03:18

0:00:56

0:00:56

0:07:23

0:07:23

0:08:59

0:08:59

0:13:15

0:13:15

0:23:20

0:23:20

0:09:59

0:09:59

0:38:25

0:38:25

0:07:10

0:07:10

0:27:52

0:27:52

0:13:48

0:13:48

0:49:02

0:49:02

0:00:43

0:00:43

0:00:36

0:00:36

0:05:05

0:05:05

0:18:49

0:18:49

0:03:06

0:03:06

0:07:08

0:07:08

1:41:55

1:41:55

0:09:05

0:09:05

0:05:39

0:05:39