filmov

tv

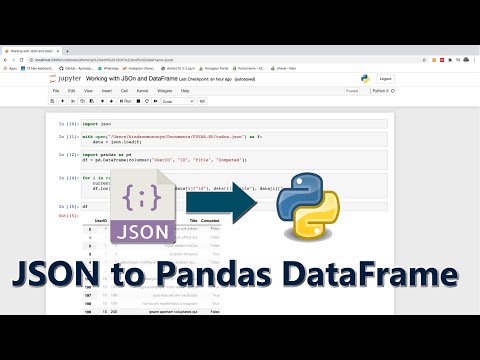

Transforming JSON files into DataFrames: A Guide to Handling Multiple Entities in Python

Показать описание

Learn how to effectively convert multiple JSON entities into pandas DataFrames, overcoming common errors for large-scale data processing.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Multipe entities in JSON to Dataframe

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Transforming JSON files into DataFrames: A Guide to Handling Multiple Entities in Python

Working with JSON data in Python can sometimes lead to unexpected challenges, especially when you are dealing with multiple entities. One common issue you may encounter is transforming structured JSON files into pandas DataFrames, particularly when each JSON file contains a variety of top-level entities.

In this guide, we will tackle the problem of converting JSON files into DataFrames using Python's powerful pandas library, ensuring that we can handle even billions of files efficiently.

The Problem at Hand

[[See Video to Reveal this Text or Code Snippet]]

This issue arises when pandas struggles to interpret certain large integers within your JSON data, as it attempts to convert them into a format it understands. Additionally, the complexity of your JSON structure, with its mix of dictionaries and lists, may leave pandas unclear about how to form the desired DataFrames.

A Step-by-Step Solution

1. Read the JSON File as a Python Dictionary

To gain tighter control over the data transformation process, you should first read the JSON file and convert it into a Python dictionary. This allows for a more structured approach to extracting the required DataFrames.

Here's how you can do it:

[[See Video to Reveal this Text or Code Snippet]]

2. Create DataFrames from Individual Entities

With your JSON data successfully loaded into a dictionary, the next step is to craft separate DataFrames for each top-level key (block, transactions, logs, etc.). This is where you can specify exactly what data you want in each DataFrame.

Here's an example of how to do this:

[[See Video to Reveal this Text or Code Snippet]]

3. Handle Special Cases and Data Types

When working with large numbers or special data types in your JSON data, you may need to ensure that they are appropriately handled in your DataFrames. For instance, you may need to convert certain columns to a different type based on your analysis needs. Here are some useful tips:

Handling Large Numbers: If you find that certain numbers exceed pandas' native handling capabilities, consider casting them to strings first.

Dealing with NaN values: Make sure to handle missing data gracefully. You can use pandas' built-in functions to clean or fill missing values.

DataFrame Confirmation: After creating your DataFrames, it's a good practice to view their shapes and data types:

[[See Video to Reveal this Text or Code Snippet]]

Conclusion

By following this structured approach to transforming JSON data into pandas DataFrames, you can effectively manage multiple entities while avoiding common pitfalls, such as integer size limitations and ambiguity in data types. This method not only helps you streamline data processing for individual files but also scales well for large datasets.

With these tools at your disposal, you can handle billions of JSON files with ease, enabling you to focus on what really matters – the insights hidden within your data. Happy coding!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Multipe entities in JSON to Dataframe

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Transforming JSON files into DataFrames: A Guide to Handling Multiple Entities in Python

Working with JSON data in Python can sometimes lead to unexpected challenges, especially when you are dealing with multiple entities. One common issue you may encounter is transforming structured JSON files into pandas DataFrames, particularly when each JSON file contains a variety of top-level entities.

In this guide, we will tackle the problem of converting JSON files into DataFrames using Python's powerful pandas library, ensuring that we can handle even billions of files efficiently.

The Problem at Hand

[[See Video to Reveal this Text or Code Snippet]]

This issue arises when pandas struggles to interpret certain large integers within your JSON data, as it attempts to convert them into a format it understands. Additionally, the complexity of your JSON structure, with its mix of dictionaries and lists, may leave pandas unclear about how to form the desired DataFrames.

A Step-by-Step Solution

1. Read the JSON File as a Python Dictionary

To gain tighter control over the data transformation process, you should first read the JSON file and convert it into a Python dictionary. This allows for a more structured approach to extracting the required DataFrames.

Here's how you can do it:

[[See Video to Reveal this Text or Code Snippet]]

2. Create DataFrames from Individual Entities

With your JSON data successfully loaded into a dictionary, the next step is to craft separate DataFrames for each top-level key (block, transactions, logs, etc.). This is where you can specify exactly what data you want in each DataFrame.

Here's an example of how to do this:

[[See Video to Reveal this Text or Code Snippet]]

3. Handle Special Cases and Data Types

When working with large numbers or special data types in your JSON data, you may need to ensure that they are appropriately handled in your DataFrames. For instance, you may need to convert certain columns to a different type based on your analysis needs. Here are some useful tips:

Handling Large Numbers: If you find that certain numbers exceed pandas' native handling capabilities, consider casting them to strings first.

Dealing with NaN values: Make sure to handle missing data gracefully. You can use pandas' built-in functions to clean or fill missing values.

DataFrame Confirmation: After creating your DataFrames, it's a good practice to view their shapes and data types:

[[See Video to Reveal this Text or Code Snippet]]

Conclusion

By following this structured approach to transforming JSON data into pandas DataFrames, you can effectively manage multiple entities while avoiding common pitfalls, such as integer size limitations and ambiguity in data types. This method not only helps you streamline data processing for individual files but also scales well for large datasets.

With these tools at your disposal, you can handle billions of JSON files with ease, enabling you to focus on what really matters – the insights hidden within your data. Happy coding!

0:04:48

0:04:48

0:01:46

0:01:46

0:10:18

0:10:18

0:04:51

0:04:51

0:04:49

0:04:49

0:04:48

0:04:48

0:04:36

0:04:36

0:01:43

0:01:43

0:02:33

0:02:33

0:01:30

0:01:30

0:01:45

0:01:45

0:01:59

0:01:59

0:09:19

0:09:19

0:01:31

0:01:31

0:01:42

0:01:42

0:03:20

0:03:20

0:02:03

0:02:03

0:01:54

0:01:54

0:23:33

0:23:33

0:07:15

0:07:15

0:03:18

0:03:18

0:02:38

0:02:38

0:04:59

0:04:59

0:03:11

0:03:11