filmov

tv

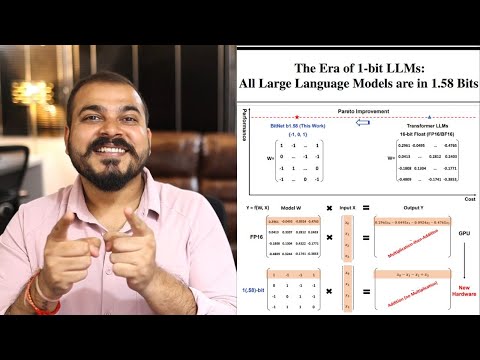

The Era of 1-bit LLMs by Microsoft | AI Paper Explained

Показать описание

In this video we dive into a recent research paper by Microsoft: "The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits".

This paper introduce an interesting and exciting architecture for large language models, called BitNet b1.58, which significantly reduces LLMs memory consumption, and speeds-up LLMs inference latency. All of that, while showing promising results, that do not fall from a comparable LLaMA model!

Large language models quantization is already tackling the same problem, and we'll explain the benefits of BitNet b1.58 comparing to common quantization techniques.

BitNet b1.58 is an improvement for the BitNet model presented few months ago.

-----------------------------------------------------------------------------------------------

👍 Please like & subscribe if you enjoy this content

-----------------------------------------------------------------------------------------------

Chapters:

0:00 Paper Introduction

0:55 Quantization

1:31 Introducing BitNet b1.58

2:55 BitNet b1.58 Benefits

4:01 BitNet b1.58 Architecture

4:46 Results

This paper introduce an interesting and exciting architecture for large language models, called BitNet b1.58, which significantly reduces LLMs memory consumption, and speeds-up LLMs inference latency. All of that, while showing promising results, that do not fall from a comparable LLaMA model!

Large language models quantization is already tackling the same problem, and we'll explain the benefits of BitNet b1.58 comparing to common quantization techniques.

BitNet b1.58 is an improvement for the BitNet model presented few months ago.

-----------------------------------------------------------------------------------------------

👍 Please like & subscribe if you enjoy this content

-----------------------------------------------------------------------------------------------

Chapters:

0:00 Paper Introduction

0:55 Quantization

1:31 Introducing BitNet b1.58

2:55 BitNet b1.58 Benefits

4:01 BitNet b1.58 Architecture

4:46 Results

Комментарии

0:17:06

0:17:06

0:06:10

0:06:10

0:13:59

0:13:59

0:07:18

0:07:18

0:08:51

0:08:51

0:06:08

0:06:08

0:20:08

0:20:08

0:46:25

0:46:25

0:17:26

0:17:26

0:07:31

0:07:31

0:11:04

0:11:04

0:43:18

0:43:18

0:46:50

0:46:50

0:57:45

0:57:45

0:22:23

0:22:23

0:00:59

0:00:59

0:00:37

0:00:37

0:12:48

0:12:48

0:28:43

0:28:43

0:16:10

0:16:10

0:04:09

0:04:09

0:04:40

0:04:40

0:37:41

0:37:41

0:13:45

0:13:45