filmov

tv

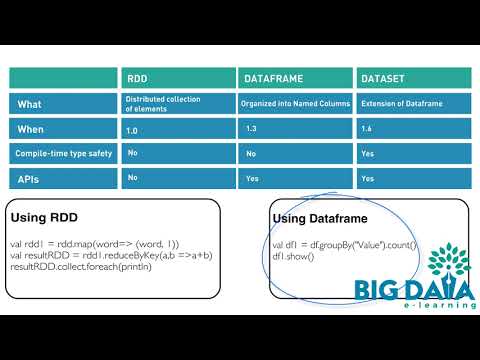

RDD vs DataFrame vs Datasets | Spark Tutorial Interview Questions #spark #sparktuning

Показать описание

As part of our spark Interview question Series, we want to help you prepare for your spark interviews. We will discuss various topics about spark like Lineage, reduceby vs group by, yarn client mode vs yarn cluster mode etc. As part of this video we are covering

difference between rdd , dataframe and datasets.

Please subscribe to our channel.

Here is link to other spark interview questions

Here is link to other Hadoop interview questions

difference between rdd , dataframe and datasets.

Please subscribe to our channel.

Here is link to other spark interview questions

Here is link to other Hadoop interview questions

RDD vs Dataframe vs Dataset

rdd dataframe and dataset difference || rdd vs dataframe vs dataset in spark || Pyspark video - 8

RDD vs Dataframe vs Dataset | Interview Question | Spark Tutorial |

RDD vs DataFrame vs Datasets | Spark Tutorial Interview Questions #spark #sparktuning

Spark APIs | Spark programming for beginners | RDD vs Dataframe vs Dataset

02. Databricks | PySpark: RDD, Dataframe and Dataset

RDD vs DataFrames vs Datasets

RDD vs DataFrame vs Dataset | big data interview questions and answers #10 | Spark | TeKnowledGeek

RDD vs DataFrame vs Dataset

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets - Jules Damji

4.6 RDD vs DataFrame | Spark Interview Questions

Spark Data Sets Vs Spark Data Frames | Difference in Spark Data frame and Data set

RDD vs DataFrame Vs DataSet in Spark | Spark Interview questions | Bigdata FAQ

Apache Spark DataFrame vs Dataset vs RDD | Project Tungsten, Catalyst Optimizer | PySpark Tutorial

RDD vs Dataframe vs Dataset | With sample code | Spark Interview Questions

SPARK SQL - RDD vs dataframe vs dataset differences

SPARK SQL - RDD vs dataframe vs dataset features

RDD vs DataFrame in Spark ?

RDD vs Dataframe vs Dataset | Spark Interview Question Series | Spark tutorial | Dataframe | Dataset

RDD vs Dataframe vs Dataset | Spark APIs | Spark programming for beginners | Pyspark tutorial

Pyspark Tutorial 10,Differences between RDD, Dataframe and Dataset,#PysparkTutorial,#RDDAndDataframe

Rdd Vs Dataframe Easily Explained| Apache Spark Interview Questions

Spark DataFrames & Datasets

🐬 [APACHE SPARK] 🔍 RDD vs DATA FRAME vs DATA SET 🚀 Diferencias entre RDD, Data Frame y Data Set 😎...

Комментарии

0:05:15

0:05:15

0:11:45

0:11:45

0:08:24

0:08:24

0:11:38

0:11:38

0:10:27

0:10:27

0:12:41

0:12:41

0:03:04

0:03:04

0:06:35

0:06:35

0:14:29

0:14:29

0:31:19

0:31:19

0:03:21

0:03:21

0:06:32

0:06:32

0:08:42

0:08:42

0:11:57

0:11:57

0:22:17

0:22:17

0:07:32

0:07:32

0:04:32

0:04:32

0:00:54

0:00:54

0:12:07

0:12:07

0:12:16

0:12:16

0:10:26

0:10:26

0:02:51

0:02:51

0:05:19

0:05:19

![🐬 [APACHE SPARK]](https://i.ytimg.com/vi/daTMRljrU8c/hqdefault.jpg) 0:12:24

0:12:24