filmov

tv

What is Neural Search? Nils Reimers - Sentence Transformers and Embedding Evaluation

Показать описание

Sentence Transformers and Embedding Evaluation - Talking Language AI Ep#3 Full episode:

About The Speaker:

Nils is the creator of Sentence-BERT and has authored several well-known research papers, including Sentence-BERT and the popular Sentence Transformers library. He’s also worked as a Research Scientist at HuggingFace, (co-)founded several web companies, and worked as an AI consultant in the area of investment banking, media, and IoT.

===

In our conversation, Nils gives us an introduction to the Sentence-BERT package and the large language models provided in it. He also shares some lessons from his experience in open-source development of such a popular package. Finally, Nils touches on his research collaborations on how to evaluate embeddings through works like MTEB: Massive Text Embedding Benchmark and BEIR.

To go deeper into these tools, and other concepts around embeddings, watch the video and join the conversation on Discord. Stay tuned for more episodes in our Talking Language AI series!

===

Discussion thread for this episode (feel free to ask questions):

===

Resources:

About The Speaker:

Nils is the creator of Sentence-BERT and has authored several well-known research papers, including Sentence-BERT and the popular Sentence Transformers library. He’s also worked as a Research Scientist at HuggingFace, (co-)founded several web companies, and worked as an AI consultant in the area of investment banking, media, and IoT.

===

In our conversation, Nils gives us an introduction to the Sentence-BERT package and the large language models provided in it. He also shares some lessons from his experience in open-source development of such a popular package. Finally, Nils touches on his research collaborations on how to evaluate embeddings through works like MTEB: Massive Text Embedding Benchmark and BEIR.

To go deeper into these tools, and other concepts around embeddings, watch the video and join the conversation on Discord. Stay tuned for more episodes in our Talking Language AI series!

===

Discussion thread for this episode (feel free to ask questions):

===

Resources:

What is Neural Search? Nils Reimers - Sentence Transformers and Embedding Evaluation

Nils Reimers: Advances in Neural Search

Nils Reimers @ECIR 2023 — Multilingual Neural Search, Retrieve and Generate w/ Large Language Models...

Introduction - Recent Developments in Neural Search

Next Generation of Semantic Search - Nils Reimers | Munich NLP Hands-on 004

Training State-of-the-Art Text Embedding & Neural Search Models

Neural Search for Low Resource Scenarios

Nils Reimers: Sentence Transformers, Search, Future of NLP | Learning from Machine Learning #3

Sentence Transformers and Embedding Evaluation - Nils Reimers - Talking Language AI Ep#3

Nils Reimers — Extending Neural Retrieval Models to New Domains and Languages

Neural Search in the Low-Resource Scenario - Nils Reimers (Hugging Face) @ Cardamom Seminar

What is Neural Search?

Intro to Neural Search

Haystack LIVE! - Unsupervised Domain Adaptation for Neural Search - Nils Reimers of HuggingFace

Domain Adaptation for Dense Information Retrieval

Neural Search Engine Functions

Nils Reimers on Cohere Search AI - Weaviate Podcast #63!

Multilingual and cross lingual embeddings - Nils Reimers

Nils Reimers — Transformers at Work

#31 - Deep Learning, Research, NLP, Career and Vector Databases with Nils Reimers

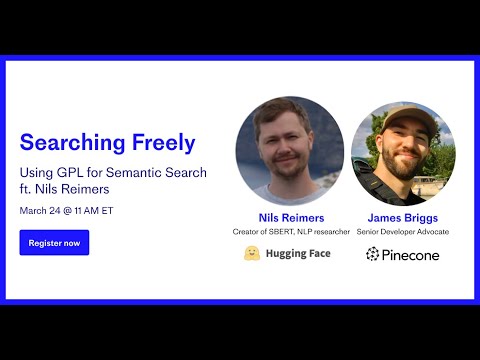

Searching Freely: Using GPL for Semantic Search ft. Nils Reimers

Nurturing Effective Search Skills for Engineers in PyTorch

AI in Action E477: Nils Reimers, Director of Machine Learning at Cohere

Recent Developments in Neural Search

Комментарии

0:07:20

0:07:20

0:56:30

0:56:30

0:24:22

0:24:22

0:19:01

0:19:01

0:55:22

0:55:22

0:38:26

0:38:26

0:40:25

0:40:25

1:02:04

1:02:04

0:59:32

0:59:32

0:27:55

0:27:55

1:05:16

1:05:16

0:17:14

0:17:14

0:09:33

0:09:33

1:21:58

1:21:58

0:34:57

0:34:57

0:00:37

0:00:37

1:05:11

1:05:11

0:09:37

0:09:37

0:01:20

0:01:20

0:54:50

0:54:50

0:56:57

0:56:57

0:00:32

0:00:32

0:19:27

0:19:27

0:46:50

0:46:50