filmov

tv

LLM Evaluation using DeepEval

Показать описание

DeepEval is a major python framework to evaluate LLM applications and build test cases. This video explains how to use DeepEval and its different functionalities #ai #ml #llm #evaluation #generativeai

How to Setup DeepEval for Fast, Easy, and Powerful LLM Evaluations

LLM Evaluation using DeepEval

🔥🔥 #deepeval - #LLM Evaluation Framework | Theory & Code

LangSmith Tutorial - LLM Evaluation for Beginners

Session 7: RAG Evaluation with RAGAS and How to Improve Retrieval

Deepeval LLM evaluation intro | #hacking_ai on #Twitch

LLM Evaluation Basics: Datasets & Metrics

Testing Framework Giskard for LLM and RAG Evaluation (Bias, Hallucination, and More)

LIVE Recording | Testing an LLM | Exploring Tools for Testing LLMs | Part 2 - DeepEval

Evaluating the Output of Your LLM (Large Language Models): Insights from Microsoft & LangChain

deepeval llm evaluation framework theory code

LLM Evaluation With MLFLOW And Dagshub For Generative AI Application

How to evaluate an LLM-powered RAG application automatically.

Evaluate LLMs - RAG

RAGAS: How to Evaluate a RAG Application Like a Pro for Beginners

Learn to Evaluate LLMs and RAG Approaches

Testing LLMs or AI chatbots using Deepeval

Top 3 Open-Source Tools for Evaluating Your LLM: Automate AI Quality Checks!

LLM Evaluation with Mistral 7B for Evaluating your Finetuned models

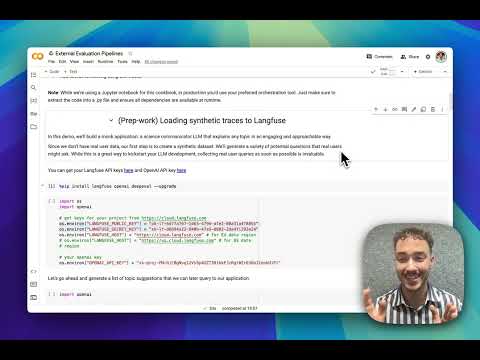

Evaluating LLM Applications with External Evaluation Pipelines in Langfuse

How to Build, Evaluate, and Iterate on LLM Agents

LLM Evaluation Intro

AI Agent Evaluation with RAGAS

RAGAs- A Framework for Evaluating RAG Applications

Комментарии

0:09:05

0:09:05

0:06:36

0:06:36

0:19:59

0:19:59

0:36:10

0:36:10

0:37:21

0:37:21

0:00:31

0:00:31

0:05:18

0:05:18

0:40:35

0:40:35

1:33:15

1:33:15

0:01:42

0:01:42

0:06:30

0:06:30

0:18:49

0:18:49

0:50:42

0:50:42

0:08:45

0:08:45

0:08:38

0:08:38

0:19:14

0:19:14

0:21:33

0:21:33

0:00:43

0:00:43

0:27:04

0:27:04

0:11:22

0:11:22

1:02:12

1:02:12

0:00:13

0:00:13

0:19:42

0:19:42

0:08:50

0:08:50