filmov

tv

Creating External Table with Spark

Показать описание

In this video lecture we will learn how to create external table in hive using apache spark 2. we will also learn how we can identify hive table location. we will use impala shell for checking hive table, in this process we also use invalidate and compute stats commands of impala

Books I Follow:

Apache Spark Books:

Scala Programming:

Hadoop Books:

Hive:

HBase:

Python Books:

Books I Follow:

Apache Spark Books:

Scala Programming:

Hadoop Books:

Hive:

HBase:

Python Books:

Creating External Table with Spark

What is Managed and External table in PySpark | Databricks Tutorial |

Internal and external tables in Apache Spark

Spark Data Engineering Patterns – Shortcuts and external tables

Spark Interview question : Managed tables vs External tables

Azure Databricks Tutorial # 24:-What are Managed table and external table in pyspark databricks

19. Create and query external tables from a file in ADLS in Azure Synapse Analytics

Java IllegalArgumentException When Create External Table In Spark

Tecno Spark 30 Pro Review —Better than you think!

Spark SQL - Basic DDL and DML - Creating External Tables

5. Managed and External Tables(Un-Managed) tables in Spark Databricks

Hive Internal Vs External Table

Spark SQL for Data Engineering 6 : Difference between Managed table and External table #sparksql

Advancing Spark - External Tables with Unity Catalog

10.6. Hive | External Tables - Hands On

Data Engineering Spark SQL - Managing Tables - DDL & DML - Creating External Tables

Using Spark SQL DataFrameWriter to Create External Hive Table

Spark SQL Tutorial 3 | Create External Table In Spark SQL | Spark Tutorial | Data Engineering

How to Create External Table in Hive

Creating External Tables in Hive

Mastering Hive Tutorial | Internal VS External Tables | Interview Question

Spark 2 Catalog API - How to create a Hive Table

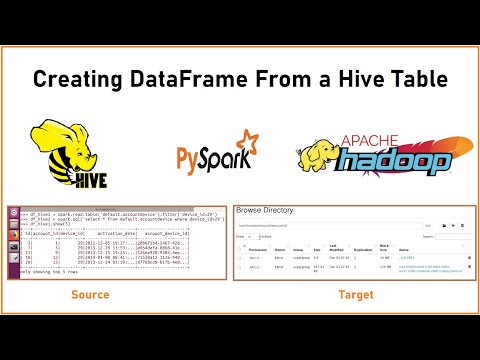

PySpark | Tutorial-11 | Creating DataFrame from a Hive table | Writing results to HDFS | Bigdata FAQ

Exploring The Power of Single Node Cluster:Creating Managed & External Table with Spark DDL Comm...

Комментарии

0:08:15

0:08:15

0:07:25

0:07:25

0:11:40

0:11:40

0:15:48

0:15:48

0:00:45

0:00:45

0:12:54

0:12:54

0:08:05

0:08:05

0:02:37

0:02:37

0:07:06

0:07:06

0:02:55

0:02:55

0:18:23

0:18:23

0:07:13

0:07:13

0:22:45

0:22:45

0:17:25

0:17:25

0:02:02

0:02:02

0:03:11

0:03:11

0:01:46

0:01:46

0:11:24

0:11:24

0:01:16

0:01:16

0:05:11

0:05:11

0:07:04

0:07:04

0:15:05

0:15:05

0:13:19

0:13:19

0:26:57

0:26:57