filmov

tv

What is adversarial machine learning?

Показать описание

In this episode we discuss the basics of adversarial machine learning, or the ability to 'hack' machine learning models.

Overview of Adversarial Machine Learning

Adversarial Machine Learning explained! | With examples.

What are GANs (Generative Adversarial Networks)?

Adversarial Machine Learning: What? So What? Now What?

What is adversarial machine learning?

Adversarial Artificial Intelligence - SY0-601 CompTIA Security+ : 1.2

What is Adversarial Machine Learning

A Friendly Introduction to Adversarial Machine Learning

MedAI #131: Analyzing and Exposing Vulnerabilities in Language Models | Yibo Wang

Adversarial Attack Demo

Adversarial Machine Learning

Nicholas Carlini – Some Lessons from Adversarial Machine Learning

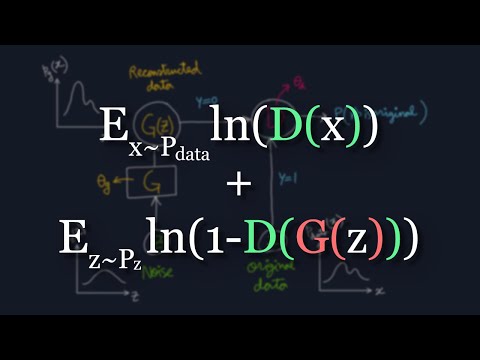

The Math Behind Generative Adversarial Networks Clearly Explained!

Adversarial Attacks on Neural Networks - Bug or Feature?

Adversarial Robustness

Generative Adversarial Networks (GANs) - Computerphile

What are generative adversarial networks ? GANs #artificialintelligence #ai #datascience

Lecture 16 | Adversarial Examples and Adversarial Training

Five examples of adversarial machine learning attacks

A Beginner's Guide to Adversarial Machine Learning

Adversarial Attacks + Re-training Machine Learning Models EXPLAINED + TUTORIAL

Exploring the World of Adversarial Machine Learning

A Friendly Introduction to Generative Adversarial Networks (GANs)

CrikeyCon 2021 - Edward Prior - Introduction to Adversarial Machine Learning and other AI attacks

Комментарии

0:08:10

0:08:10

0:10:24

0:10:24

0:08:23

0:08:23

0:03:30

0:03:30

0:07:09

0:07:09

0:03:54

0:03:54

0:08:24

0:08:24

0:46:29

0:46:29

0:37:17

0:37:17

0:00:17

0:00:17

0:51:41

0:51:41

0:16:29

0:16:29

0:17:04

0:17:04

0:04:57

0:04:57

0:30:55

0:30:55

0:21:21

0:21:21

0:00:17

0:00:17

1:21:46

1:21:46

0:09:16

0:09:16

0:00:26

0:00:26

0:04:46

0:04:46

0:04:00

0:04:00

0:21:01

0:21:01

0:41:54

0:41:54