filmov

tv

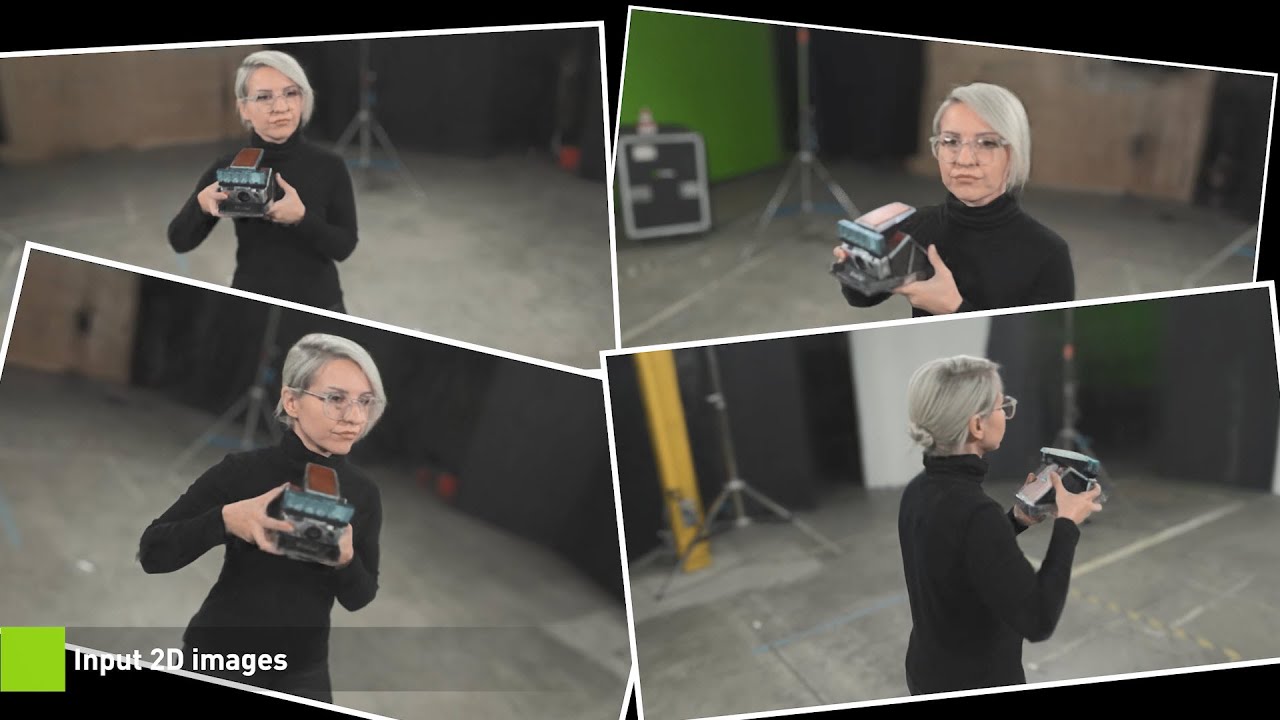

NVIDIA Instant NeRF: NVIDIA Research Turns 2D Photos Into 3D Scenes in the Blink of an AI

Показать описание

When the first instant photo was taken 75 years ago with a Polaroid camera, it was groundbreaking to rapidly capture the 3D world in a realistic 2D image. Today, AI researchers are working on the opposite: turning a collection of still images into a digital 3D scene in a matter of seconds with the implementation of neural radiance fields (NeRFs).

#GTC22 #AI #neuralradiance #neuralgraphics #rendering #deeplearning

NeRF, Deep Learning, Neural Radiance Fields, Neural Graphics, Rendering

#GTC22 #AI #neuralradiance #neuralgraphics #rendering #deeplearning

NeRF, Deep Learning, Neural Radiance Fields, Neural Graphics, Rendering

NVIDIA Instant NeRF: NVIDIA Research Turns 2D Photos Into 3D Scenes in the Blink of an AI

Instant NeRF - NVIDIA Research Turns 2D Photos Into 3D Scenes in the Blink of an AI

NVIDIA’s New AI: Wow, Instant Neural Graphics! 🤖

Instant NeRF Sweepstakes

NVIDIA Just Made AI Photogrammetry 1,000x Faster [Instant-NGP]

NVIDIA Introduces No Code Instant NeRF!

Turn Photos into 3D Scenes in Milliseconds ! Instant NeRF Explained

NVIDIA Instant NERF 3D Scene Reconstruction with your Phone

NVIDIA Instant NeRF

NVidia Instant NeRF test

Instant NERF Sweepstakes Winners

Hands on With Nvidia Instant NeRFs

Introducing new AI technique - NeRF! #shorts

BMPCC 4k + Instant NGP Nvidia Nerf | Cinematic test

NVIDIA Instant NeRFs

Create an Immersive Experience for VR with Instant NeRF

Nvidia Instant-ngp: Create your own NeRF scene from a video or images (Hands-on Tutorial)

NVIDIA’s New AI Is 20x Faster…But How?

NVIDIA NeRF Test #1: Using AI to create a 3D scene from still images

Export 3D Object from Nvidia (instant-ngp) NeRF and load it into Blender and MeshLab

Useful tips to install Nvidia Instant Nerf

Experiment on NVidia Instant-ngp

How To Make Datasets for Instant NGP (NeRF)

NVIDIA recently introduced the Instant NeRF neural network,

Комментарии

0:00:44

0:00:44

0:04:12

0:04:12

0:06:21

0:06:21

0:00:09

0:00:09

0:05:38

0:05:38

0:06:55

0:06:55

0:07:40

0:07:40

0:15:49

0:15:49

0:00:20

0:00:20

0:00:20

0:00:20

0:01:12

0:01:12

0:40:58

0:40:58

0:00:19

0:00:19

0:00:22

0:00:22

0:00:13

0:00:13

0:00:18

0:00:18

0:33:21

0:33:21

0:08:16

0:08:16

0:00:28

0:00:28

0:11:55

0:11:55

0:01:03

0:01:03

0:00:35

0:00:35

0:08:41

0:08:41

0:00:24

0:00:24