filmov

tv

The Principles of Data Modeling for MongoDB

Показать описание

Creating a schema for a relational database is a straightforward process. Designing a schema for a MongoDB application may seem a little more challenging. However, it doesn't have to be if you follow the main principles that MongoDB has identified for its users. This talk will go over these data modeling principles. We'll also reveal modeling tips to address the constant changes in the data technology world like new features in MongoDB, hardware evolution, data lakes, and the growing impact of analytics.

Speaker: Jay Runkel, Distinguished Solutions Architect

Company: MongoDB

Level: Intermediate

#MongoDBlocalToronto22

Speaker: Jay Runkel, Distinguished Solutions Architect

Company: MongoDB

Level: Intermediate

#MongoDBlocalToronto22

The Principles of Data Modeling for MongoDB

The Principles of Data Modeling for MongoDB

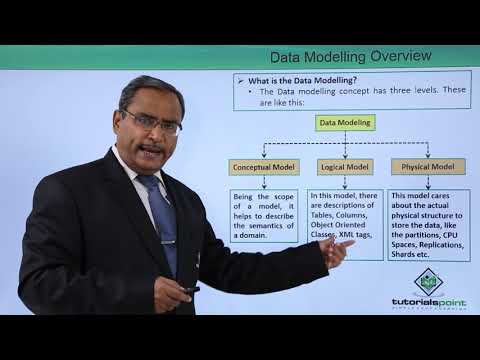

Data Modelling Overview

The Principles of Data Modeling for MongoDB

What is Data Modelling? Beginner's Guide to Data Models and Data Modelling

What is Data Modeling? | IDERA Data University

The Principles of Data Modeling for MongoDB (MongoDB World 2022)

Developer Jumpstart: The Principles of Data Modeling for MongoDB

18. Classification Model using Decision Trees

Concepts and Principles of Conceptual Data Modeling

Methodology and Principles of Data Modeling for MongoDB

Data Modeling vs. Data Architecture

Conceptual vs Logical Data Models - What are the key differences?

What is STAR schema | Star vs Snowflake Schema | Fact vs Dimension Table

The World is Flat: Design Principles for Salesforce Data Modeling

Data Modeling Tutorial: Star Schema (aka Kimball Approach)

Conceptual, Logical & Physical Data Models

Data Modeling Tutorial

Data Governance Explained in 5 Minutes

Database vs Data Warehouse vs Data Lake | What is the Difference?

7 Database Design Mistakes to Avoid (With Solutions)

Data Ed Online: Data Modeling Fundamentals

Advanced Data Modeling

[Training] Data Modeling

Комментарии

0:27:45

0:27:45

0:38:32

0:38:32

0:03:41

0:03:41

0:28:35

0:28:35

0:18:59

0:18:59

0:01:22

0:01:22

0:30:07

0:30:07

0:29:57

0:29:57

0:11:27

0:11:27

1:04:56

1:04:56

0:46:27

0:46:27

0:02:54

0:02:54

0:05:00

0:05:00

0:06:59

0:06:59

0:29:36

0:29:36

0:16:34

0:16:34

0:13:45

0:13:45

0:40:27

0:40:27

0:05:22

0:05:22

0:05:22

0:05:22

0:11:29

0:11:29

1:30:31

1:30:31

0:32:06

0:32:06

![[Training] Data Modeling](https://i.ytimg.com/vi/roVfcHBvD3w/hqdefault.jpg) 0:29:09

0:29:09