filmov

tv

The MOST IMPORTANT topic in Machine Learning right now

Показать описание

Today we're discussing the most important topic in Machine Learning right now, namely model explainability. It is one of the hottest discussion points in the data community, because ultimately if we cannot understand how the models arrive at the predictions, it renders them useless in many practical applications.

Like I mentioned in the video, I'm linking all the releavant links:

-------------------------------------------

If you'd like to make my day, and help me keep going (with a better mic, lol), feel free to get me my beloved coffee :)

Instagram: @karo_sowinska

Like I mentioned in the video, I'm linking all the releavant links:

-------------------------------------------

If you'd like to make my day, and help me keep going (with a better mic, lol), feel free to get me my beloved coffee :)

Instagram: @karo_sowinska

The MOST IMPORTANT topic in Machine Learning right now

Hindi Grammar Class 12 Bihar Board | Hindi Vyakaran | Hindi grammar Most important Topic

The Most Important Topic in Life!

What is the most important influence on child development | Tom Weisner | TEDxUCLA

CA foundation accountancy most important topics | Jan 2025 | CA Vipul Dhall | iWision

Glenn: THIS is the most important topic of my LIFETIME

Best Topics for Presentation / Topic for speech / Interesting Topics

Top 10 Topics for 80+ Marks - CA Intermediate Advanced Accounting 2025 exams

5 MOST IMPORTANT MATHS CHAPTERS | CLASS 12TH | SCORE 100% IN MATHS CLASS 12 CBSE | ONE SHOT OVERVIEW

CA Inter Audit Most Important Topics To Not Skip #caexamstrategy

Most IMPORTANT Topics of History For Class 12th Board Exam🔥#Shorts #Cbse2025 #Class12BoardPrep

Most Important Question for Business Studies Class 12|Business studies Important Topic 2023#class12

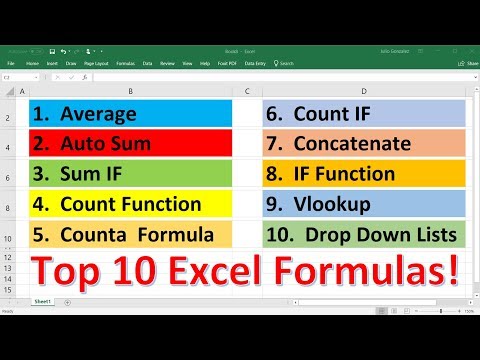

Top 10 Most Important Excel Formulas - Made Easy!

GS CGL 2023 (POLITY IMPORTANT TOPIC )

DON'T Study SST 🤯 Social Science Important Questions Class 10 SST Important Topics

GEOGRAPHY SSC CGL 2023 IMPORTANT TOPIC

How to BOOST your MOCK SCORES? | Most Important Topics in CET | Topic-wise weightage

Most Important Topics of Accounts For Class 12 Board Exam | CBSE 2025 Syllabus Overview & Weight...

SSC CGL tier-1 General Intelligence and Reasoning topic wise weightage SSC CGL 2023 #ssccgl

Don't Leave this Topic from REAL NUMBERS 😨 Most Important ⚠️#maths #byju #class10boards #class1...

CA Foundation Business Laws Most Expected Topics | CA Foundation Jan 2025 | Must Watch

Class 12th Biology | Top 10 Most Important Question | Biology 10 Vvi Question | 12th Board Exam 2025

Useful IDIOMS for Any Topic in IELTS Speaking

The most important skills of data scientists | Jose Miguel Cansado | TEDxIEMadrid

Комментарии

0:08:05

0:08:05

0:05:29

0:05:29

0:22:24

0:22:24

0:08:42

0:08:42

0:08:14

0:08:14

0:12:21

0:12:21

0:00:50

0:00:50

0:04:43

0:04:43

0:12:58

0:12:58

0:08:48

0:08:48

0:00:39

0:00:39

0:00:06

0:00:06

0:27:19

0:27:19

0:00:45

0:00:45

0:22:48

0:22:48

0:00:46

0:00:46

0:27:13

0:27:13

0:19:34

0:19:34

0:00:08

0:00:08

0:01:00

0:01:00

0:16:02

0:16:02

0:44:06

0:44:06

0:25:01

0:25:01

0:09:54

0:09:54