filmov

tv

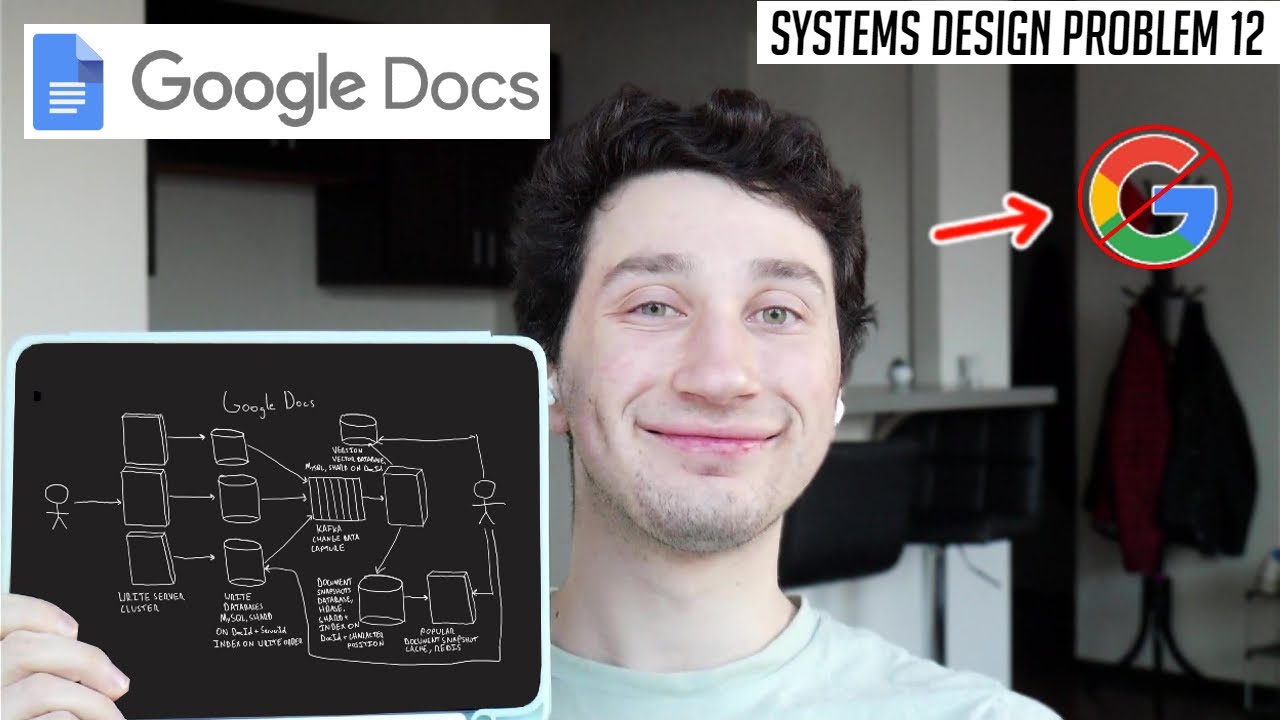

12: Design Google Docs/Real Time Text Editor | Systems Design Interview Questions With Ex-Google SWE

Показать описание

I swear Kate Upton and Megan Fox wrote I was handsome and sexy, you guys just didn't use two phase commit for your document snapshots and version vectors so you never received those writes on your local copy (since your version vector was more up to date than the document snapshot)!

12: Design Google Docs/Real Time Text Editor | Systems Design Interview Questions With Ex-Google SWE

System Design of Google Docs: Real-Time Collaboration & Scalability Explained

Google Docs Design Deep Dive with Google SWE! | Systems Design Interview Question 13

COMPLETE SYSTEM DESIGN OF GOOGLE DOCS [2022 LATEST] - Concurrent editing, Version history & More...

How Google Docs Resolves Conflicts in Real-Time Collaboration at Scale

How to make a real-time collaborative text editor in 5 easy steps! // Rudi Chen

Notion is... overrated?

Google Docs System Design | Collaborative Editor System Design | Operational Transformation

Top Apps I Use Daily as an Architect

A 12-year-old app developer | Thomas Suarez | TED

How To Write A Love Letter | Ross Smith

Changing Font in Entire Document in Word 2010 (Windows)

AI Tool That Creates Dashboards in Minutes for Free

How to Create a Dashboard in Google Sheets in 5 Minutes - 2024 Edition 📈

'This is how i organize my thoughts and my knowledge' - Jordan Peterson

Today’s Date and Time in Sheets? 🔥 [SHEETS TIPS! 💻] #shortsfeed

Software Architect Interview: Designing Google Docs | System Design Mock Interview

POV - Windows User Tries MacOS 😂

How to Crack Any System Design Interview

📄 How to Convert PDF to Google Docs 2024 [Easy Conversion]

Build and Deploy a Full Stack Google Drive Clone with Next.js 15

Can you Solve this Google Interview Question? | Puzzle for Software Developers

NEW! Make $225 Per Day Using Google Docs Hack | Make Money Online With Google

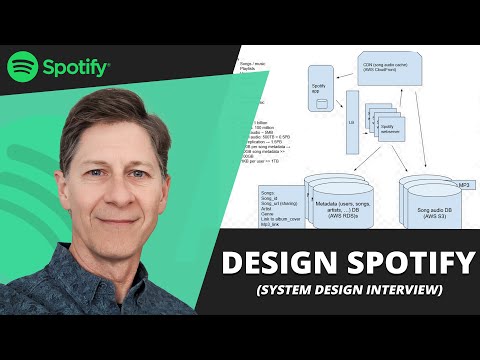

Google system design interview: Design Spotify (with ex-Google EM)

Комментарии

0:47:10

0:47:10

0:12:48

0:12:48

0:27:12

0:27:12

0:50:48

0:50:48

0:06:12

0:06:12

0:13:03

0:13:03

0:00:40

0:00:40

0:37:29

0:37:29

0:12:58

0:12:58

0:04:41

0:04:41

0:00:16

0:00:16

0:00:17

0:00:17

0:09:44

0:09:44

0:05:36

0:05:36

0:00:55

0:00:55

0:00:15

0:00:15

0:42:33

0:42:33

0:00:37

0:00:37

0:08:19

0:08:19

0:01:31

0:01:31

5:19:07

5:19:07

0:00:57

0:00:57

0:24:36

0:24:36

0:42:13

0:42:13