filmov

tv

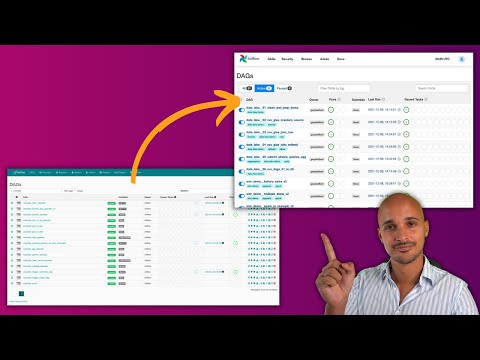

Don't Use Apache Airflow

Показать описание

Apache Airflow is touted as the answer to all your data movement and transformation problems but is it? In this video, I explain what Airflow is, why it is not the answer for most data movement and transformation needs, and provide some better options.

Join my Patreon Community and Watch this Video without Ads!

Slides

Follow me on Twitter

@BryanCafferky

Follow Me on LinkedIn

Join my Patreon Community and Watch this Video without Ads!

Slides

Follow me on Twitter

@BryanCafferky

Follow Me on LinkedIn

Don't Use Apache Airflow

What They Don't Tell You About Apache Airflow

The Realities Of Airflow - The Mistakes New Data Engineers Make Using Apache Airflow

Why Airflow? The Top 5 Reasons To Use It!

Apache Airflow in under 60 seconds

Learn Apache Airflow in 10 Minutes | High-Paying Skills for Data Engineers

Airflow explained in 3 mins

Data Engineer Tip for Airflow Alternative

How to Run Airflow Locally without Docker!

What is Apache Airflow? For beginners

How to Automate Your API Requests Using Airflow!

Airflow and Analytics Engineering - Dos and don'ts

What's new in Apache Airflow 2.10?

How to use timezones in Apache Airflow

Building (Better) Data Pipelines with Apache Airflow

Apache Airflow vs. Dagster

Apache Airflow One Shot- Building End To End ETL Pipeline Using AirFlow And Astro

🌈Apache Airflow for beginners

Open-Source Spotlight - Apache Airflow - Marc Lamberti

Master Apache Airflow: 5 Real-World Projects to Get You Started

Running Apache Airflow Reliably with Kubernetes | Astronomer

How to Deploy Airflow From Dev to Prod Like A BOSS

Luther Hill: Use Apache-Airflow to Build Data Workflows | PyData Indy 2019

Implementing Event Based DAGs with Airflow

Комментарии

0:16:21

0:16:21

0:11:10

0:11:10

0:12:26

0:12:26

0:10:01

0:10:01

0:00:53

0:00:53

0:12:38

0:12:38

0:04:17

0:04:17

0:00:57

0:00:57

0:05:14

0:05:14

0:11:50

0:11:50

0:00:38

0:00:38

0:11:10

0:11:10

0:15:50

0:15:50

0:03:57

0:03:57

0:11:41

0:11:41

0:06:57

0:06:57

0:51:35

0:51:35

0:29:06

0:29:06

0:27:48

0:27:48

0:13:18

0:13:18

0:37:30

0:37:30

0:27:35

0:27:35

0:38:03

0:38:03

0:20:59

0:20:59