filmov

tv

GopherCon 2018: Kavya Joshi - The Scheduler Saga

Показать описание

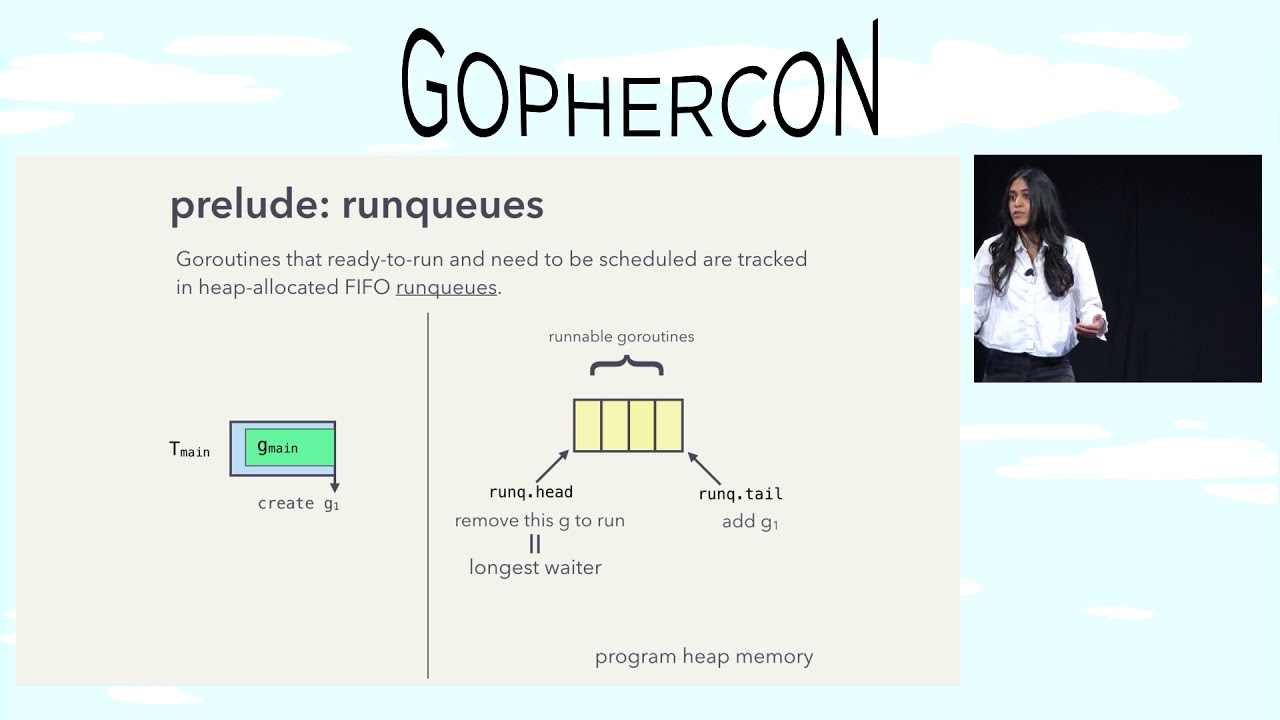

he Go scheduler is the behind-the-scenes magical machine that powers Go programs. It efficiently runs goroutines, and also coordinates network IO and memory management.

Kavya’s talk will explore the inner workings of the scheduler machinery. She will delve into the M:N multiplexing of goroutines on system threads, and the mechanisms to schedule, unschedule, and rebalance goroutines. Kavya will also touch upon how the scheduler supports the netpoller and the memory management systems for goroutine stack resizing and heap garbage collection. Finally, she will evaluate the effectiveness and performance of the scheduler.

Kavya’s talk will explore the inner workings of the scheduler machinery. She will delve into the M:N multiplexing of goroutines on system threads, and the mechanisms to schedule, unschedule, and rebalance goroutines. Kavya will also touch upon how the scheduler supports the netpoller and the memory management systems for goroutine stack resizing and heap garbage collection. Finally, she will evaluate the effectiveness and performance of the scheduler.

GopherCon 2018: Kavya Joshi - The Scheduler Saga

GopherCon 2017: Kavya Joshi - Understanding Channels

GopherCon 2018: Kat Zien - How Do You Structure Your Go Apps

''go test -race' Under the Hood' by Kavya Joshi

GopherCon 2018: Nyah Check - 5 Mistakes C/C++ Devs Make While Writing Go

'A Practical Look at Performance Theory' by Kavya Joshi

GopherCon 2018: Tess Rinearson - An Over Engineering Disaster with Macaroons

Practical performance theory - Kavya Joshi (Samsara)

GopherCon 2021: Madhav Jivrajani - Queues, Fairness, and The Go Scheduler

GopherCon 2018: Sean T. Allen - Adventures in Cgo Performance

GopherCon 2018 Lightning Talk: Neil Primmer - Decentralizing CI CD Pipelines Using Go

GopherCon 2018: Ron Evans - Computer Vision Using Go and OpenCV 3

GopherCon UK 2018: Kat Zien - How do you structure your Go apps?

GopherCon 2018: Jon Bodner - Go Says WAT

Kavya Joshi

A Race Detector Unfurled -- Kavya Joshi

GopherCon 2018: Michael Stapelberg - Go in Debian

GopherCon 2018: Natalie Pistunovich - The Importance of Beginners

GopherCon 2018: Kevin Burke - Becoming a Go Contributor

Opening keynote: Go with Versions - GopherConSG 2018

GopherCon 2018: Sugu Sougoumarane - How to Write a Parser in Go

GopherCon 2018: Julia Ferraioli - Writing Accessible Go

GopherCon 2018: Hunter Loftis - Painting with Light

GopherCon 2021 - Day 2

Комментарии

0:30:48

0:30:48

0:21:45

0:21:45

0:46:18

0:46:18

0:44:14

0:44:14

0:29:47

0:29:47

0:38:13

0:38:13

0:23:48

0:23:48

0:06:25

0:06:25

0:47:00

0:47:00

0:43:26

0:43:26

0:05:33

0:05:33

0:28:27

0:28:27

0:44:42

0:44:42

0:40:17

0:40:17

0:00:43

0:00:43

0:19:14

0:19:14

0:23:58

0:23:58

0:21:59

0:21:59

0:27:15

0:27:15

0:40:50

0:40:50

0:40:13

0:40:13

0:23:25

0:23:25

0:40:15

0:40:15

7:05:59

7:05:59