filmov

tv

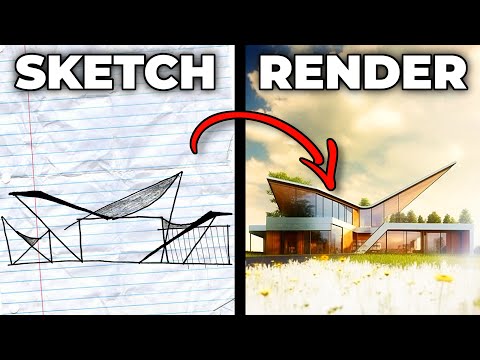

Transform Your Sketches into Masterpieces with Stable Diffusion ControlNet AI - How To Use Tutorial

Показать описание

Playlist of #StableDiffusion Tutorials, Automatic1111 and Google Colab Guides, DreamBooth, Textual Inversion / Embedding, LoRA, AI Upscaling, Pix2Pix, Img2Img:

Paper Adding #Conditional Control to Text-to-Image Diffusion Models :

0:00 What is revolutionary new Stable Diffusion AI technology ControlNet

0:36 What is ControlNet with Canny Edge

0:49 What is ControlNet with M-LSD Lines

1:17 What is ControlNet with HED Boundary

1:41 What is ControlNet with User Scribbles

1:58 What is ControlNet Interactive Interface

2:08 What is ControlNet with Fake Scribbles

2:28 What is ControlNet with Human Pose

2:45 What is ControlNet with Semantic Segmentation

3:02 What is ControlNet with Depth

3:15 What is ControlNet with Normal Map

3:35 How to download and install Anaconda

4:33 How to download / git clone ControlNet

5:25 How to download ControlNet models from Hugging Face repo

6:37 Which folder is the correct folder to put ControlNet models

7:03 How to install ControlNet to generate virtual environment with correct dependencies

8:53 How to start run first app Canny Edge

9:59 Correct local URL of the app

10:11 Testing first test image bird with Canny Edge

11:42 How to start M-LSD lines ControlNet app

12:10 How to set low VRAM option in configuration

13:20 Start again M-LSD lines ControlNet app

13:37 Running Hough Line Maps app example

14:28 Example of Control Stable Diffusion with HED Maps

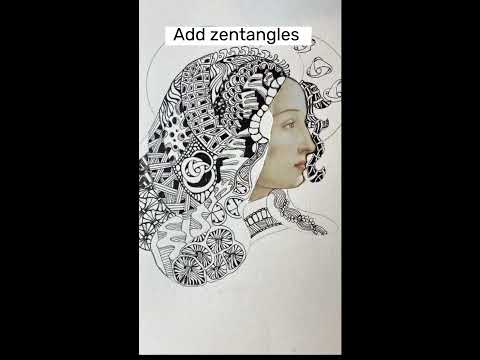

14:45 Testing ControlNet with User Scribbles

Used lineart source :

From official paper of Adding Conditional Control to Text-to-Image Diffusion Models

We present a neural network structure, ControlNet, to control pretrained large

diffusion models to support additional input conditions. The ControlNet learns

task-specific conditions in an end-to-end way, and the learning is robust even when

the training dataset is small ( 50k). Moreover, training a ControlNet is as fast as

fine-tuning a diffusion model, and the model can be trained on a personal devices.

Alternatively, if powerful computation clusters are available, the model can scale to

large amounts (millions to billions) of data. We report that large diffusion models

like Stable Diffusion can be augmented with ControlNets to enable conditional

inputs like edge maps, segmentation maps, keypoints, etc. This may enrich the

methods to control large diffusion models and further facilitate related applications.

1 Introduction

With the presence of large text-to-image models, generating a visually appealing image may require only a short descriptive prompt entered by users. After typing some texts and getting the images, we may naturally come up with several questions: does this prompt-based control satisfy our needs? For example in image processing, considering many long-standing tasks with clear problem formulations, can these large models be applied to facilitate these specific tasks? What kind of framework should we build to handle the wide range of problem conditions and user controls? In specific tasks, can large models preserve the advantages and capabilities obtained from billions of images? To answer these questions, we investigate various image processing applications and have three findings. First, the available data scale in a task-specific domain is not always as large as that in the general image-text domain. The largest dataset size of many specific problems (e.g., object shape/normal, pose understanding, etc.) is often under 100k, i.e., 5 × 104 times smaller than LAION5B. This would require robust neural network training method to avoid overfitting and to preserve generalization ability when the large models are trained for specific problems. Second, when image processing tasks are handled with data-driven solutions, large computation clusters are not always available. This makes fast training methods important for optimizing large models to specific tasks within an acceptable amount of time and memory space (e.g., on personal devices).

Комментарии

0:02:12

0:02:12

0:31:19

0:31:19

0:00:37

0:00:37

0:07:19

0:07:19

0:16:46

0:16:46

0:05:27

0:05:27

0:00:15

0:00:15

0:02:35

0:02:35

0:00:40

0:00:40

0:05:11

0:05:11

0:00:09

0:00:09

0:13:34

0:13:34

0:00:16

0:00:16

0:00:34

0:00:34

0:00:30

0:00:30

0:00:57

0:00:57

0:06:20

0:06:20

0:00:49

0:00:49

0:00:54

0:00:54

0:00:37

0:00:37

0:06:12

0:06:12

0:00:12

0:00:12

0:00:49

0:00:49

0:00:23

0:00:23