filmov

tv

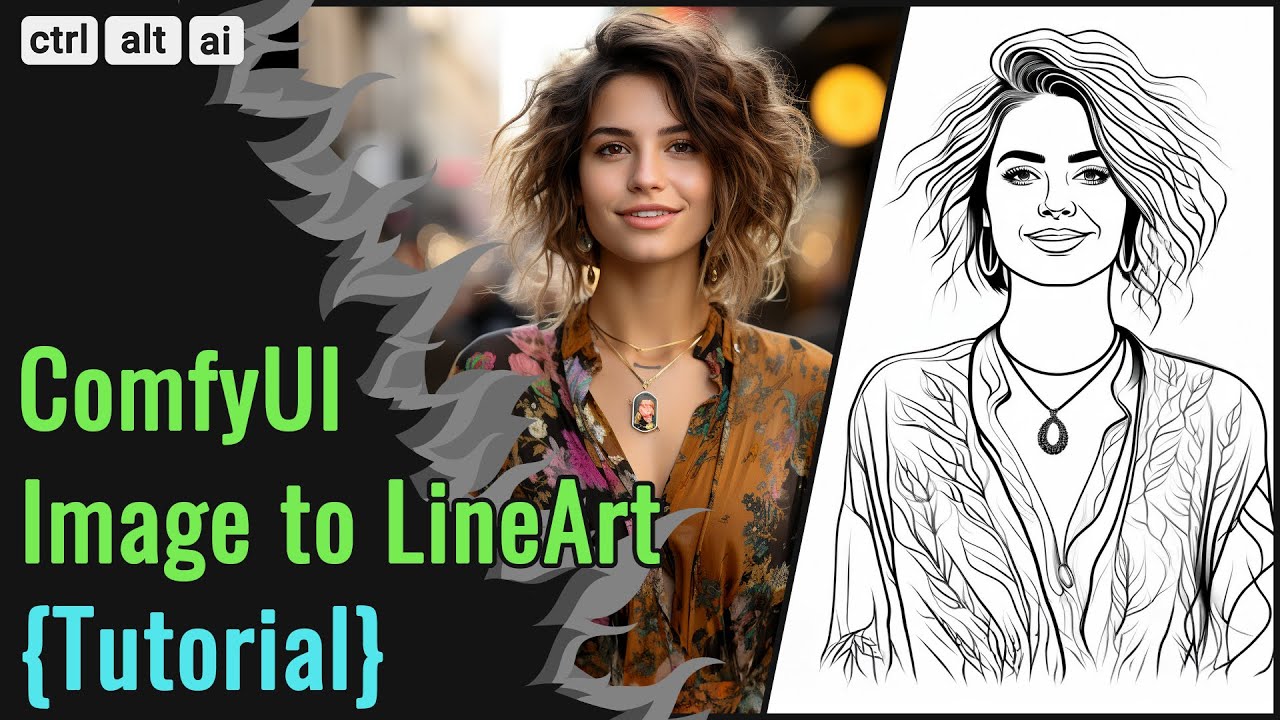

ComfyUI: Image to Line Art Workflow Tutorial

Показать описание

This is a comprehensive and robust workflow tutorial on how to set up Comfy to convert any style of image into Line Art for conceptual design or further processing. The workflow utilizes some unique methodology which includes using ControlNet Lora, IP Adapter, Blip, Combine Prompting, and more. The Workflow supports Batch Processing, Background Removal, Upscaling, Post Processing effects like Color Removal, Gray Shading Removal, Line Thickness, Edge Enhancement, etc.

------------------------

Relevant Links:

------------------------

TimeStamps:

0:00 Intro.

1:02 Requirements.

5:45 Nodes Setup Part 1.

12:41 Nodes Setup Part 2.

16:17 Connecting the Nodes.

29:30 Editing Blip, Upscaling.

35:29 Removing Color & Grayscale.

38:56 Randomize Batch Process.

39:57 Fine-Tuning Example.

41:22 Background Removal.

------------------------

Relevant Links:

------------------------

TimeStamps:

0:00 Intro.

1:02 Requirements.

5:45 Nodes Setup Part 1.

12:41 Nodes Setup Part 2.

16:17 Connecting the Nodes.

29:30 Editing Blip, Upscaling.

35:29 Removing Color & Grayscale.

38:56 Randomize Batch Process.

39:57 Fine-Tuning Example.

41:22 Background Removal.

CONVERT ANY IMAGE TO LINEART Using ControlNet! SO INCREDIBLY COOL!

ComfyUI: Image to Line Art Workflow Tutorial

How To Convert Image To Line Art Using AI Stable Diffusion (Tutorial Guide)

How to use stable diffusion to turn any image into exquisite hand-drawn artwork with markers

how to use new ControlNet in Stable Diffusion to Color your lineart

ComfyUI - Easily Transforming Any Image into Drawings

Real-Time Reasoning Drawing with krita and ComfyUI,Demo

how to use ControlNet in Stable Diffusion to Color your lineart, use a picture to control style

ComfyUI: FLUX - My 48.9 Cents | English

Advanced AI Sketch to Image: Style transfer with comfyui and IPadapter

Change Image Style With Multi-ControlNet in ComfyUI 🔥

Become a Style Transfer Master with ComfyUI and IPAdapter

Reimagine Any Image in ComfyUI

Create sketches from Images and videos in Comfyui #art #drawing #lineart

ComfyUI Scribbles to Masterpieces Workflow!

AI-Powered Sketch to Render: Transform Any Subject by ControlNet, ComfyUI Stable Diffusion Tutorial

Comparing current image to sketch or line art nodes in ComfyUI

Color your line art with comfi ui #diffusion #comics #comfyui #stablediffusion

SDXL ComfyUI img2img - A simple workflow for image 2 image (img2img) with the SDXL diffusion model

Comics Line Art With Stable Diffusion

ComfyUI FLUX IMAGE TO IMAGE FLORENCE 2 workflow #comfyui #flux #img2img #florence

Introducing ComfyUI Image to Drawing Assistants

Going From a Drawing to a Colour Final in ComfyUI

SIMPLE technique - creating COLORING BOOKS with FOOOCUS - stable diffusion

Комментарии

0:04:22

0:04:22

0:43:52

0:43:52

0:03:16

0:03:16

0:01:51

0:01:51

0:01:42

0:01:42

0:16:21

0:16:21

0:00:17

0:00:17

0:02:21

0:02:21

1:02:52

1:02:52

0:08:02

0:08:02

0:17:01

0:17:01

0:19:02

0:19:02

0:10:25

0:10:25

0:00:29

0:00:29

0:12:06

0:12:06

1:20:10

1:20:10

0:13:03

0:13:03

0:00:16

0:00:16

0:07:39

0:07:39

0:08:23

0:08:23

0:08:59

0:08:59

0:05:15

0:05:15

0:12:48

0:12:48

0:06:51

0:06:51