filmov

tv

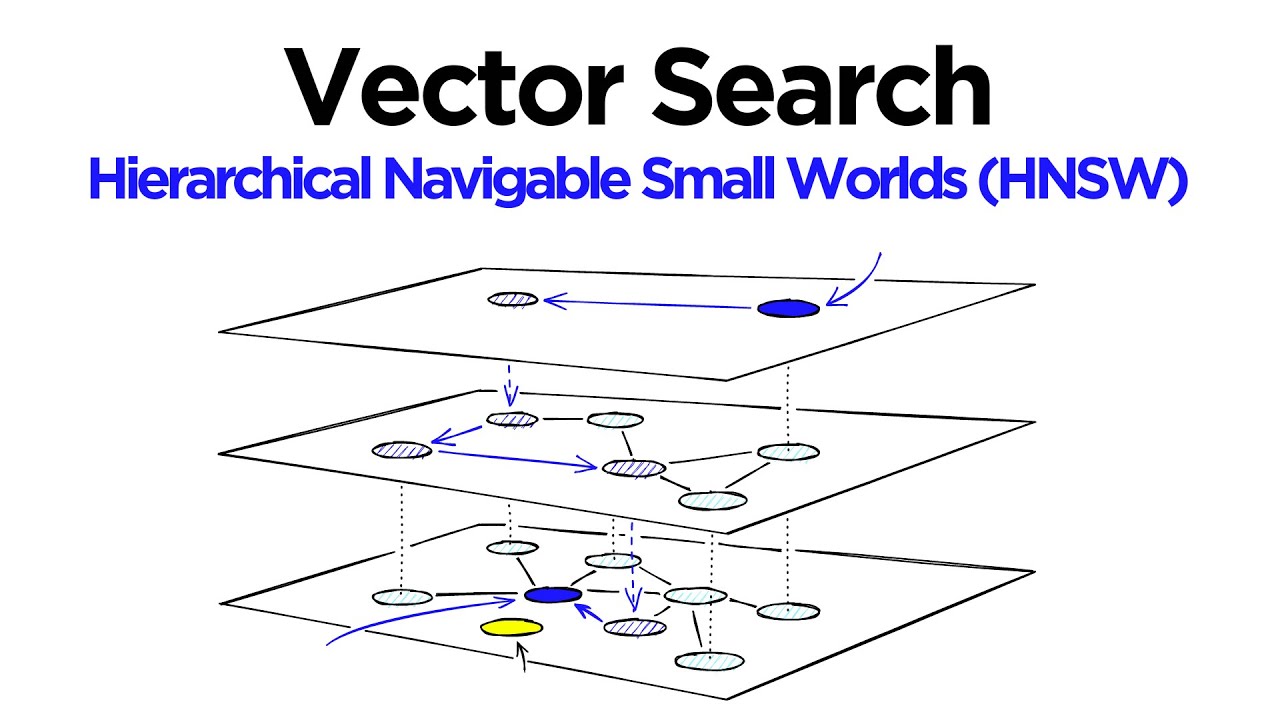

HNSW for Vector Search Explained and Implemented with Faiss (Python)

Показать описание

Hierarchical Navigable Small World (HNSW) graphs are among the top-performing indexes for vector similarity search. HNSW is a hugely popular technology that time and time again produces state-of-the-art performance with super-fast search speeds and flawless recall - HNSW is not to be missed.

Despite being a popular and robust algorithm for approximate nearest neighbors (ANN) searches, understanding how it works is far from easy.

This video helps demystify HNSW and explains this intelligent algorithm in an easy-to-understand way. Towards the end of the video, we'll look at how to implement HNSW using Faiss and which parameter settings give us the performance we need.

🌲 Pinecone article:

🤖 70% Discount on the NLP With Transformers in Python course:

🎉 Sign-up For New Articles Every Week on Medium!

👾 Discord:

00:00 Intro

00:41 Foundations of HNSW

08:41 How HNSW Works

16:38 The Basics of HNSW in Faiss

21:40 How Faiss Builds an HNSW Graph

26.49 Building the Best HNSW Index

33:33 Fine-tuning HNSW

34:30 Outro

Despite being a popular and robust algorithm for approximate nearest neighbors (ANN) searches, understanding how it works is far from easy.

This video helps demystify HNSW and explains this intelligent algorithm in an easy-to-understand way. Towards the end of the video, we'll look at how to implement HNSW using Faiss and which parameter settings give us the performance we need.

🌲 Pinecone article:

🤖 70% Discount on the NLP With Transformers in Python course:

🎉 Sign-up For New Articles Every Week on Medium!

👾 Discord:

00:00 Intro

00:41 Foundations of HNSW

08:41 How HNSW Works

16:38 The Basics of HNSW in Faiss

21:40 How Faiss Builds an HNSW Graph

26.49 Building the Best HNSW Index

33:33 Fine-tuning HNSW

34:30 Outro

Комментарии

0:08:03

0:08:03

0:34:35

0:34:35

0:01:45

0:01:45

0:00:43

0:00:43

0:58:31

0:58:31

0:30:32

0:30:32

0:18:37

0:18:37

0:58:29

0:58:29

0:04:23

0:04:23

0:30:18

0:30:18

0:49:24

0:49:24

0:15:05

0:15:05

0:00:43

0:00:43

0:12:25

0:12:25

0:03:29

0:03:29

0:09:49

0:09:49

0:06:55

0:06:55

0:07:35

0:07:35

0:00:42

0:00:42

0:06:52

0:06:52

0:08:29

0:08:29

0:03:09

0:03:09

0:03:59

0:03:59

0:00:28

0:00:28