filmov

tv

'Eigenvalues and Eigenvectors Simplified: Unraveling Their Significance with a Real-world Example'

Показать описание

Title: "Eigenvalues and Eigenvectors Simplified: Unraveling Their Significance with a Real-world Example"

#By Sir NolieBoy Rama Bantanos

The Author

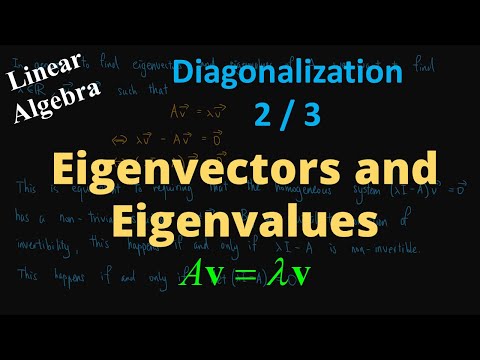

Eigenvalues and eigenvectors are essential concepts in linear algebra that have a wide range of applications in various fields. To simplify their understanding, let's break them down into an effective formula approach.

Eigenvalues: Eigenvalues are scalar values associated with a square matrix that represent the scaling factor by which the eigenvectors are stretched or compressed when the matrix is applied to them. In essence, eigenvalues reveal how a matrix affects the direction and magnitude of vectors.

The formula for eigenvalues can be summarized as follows:

For a square matrix A and an eigenvector v, the eigenvalue λ satisfies the equation: A * v = λ * v.

Eigenvectors: Eigenvectors are the non-zero vectors that remain in the same direction after applying a square matrix. They represent the fundamental directions of transformation associated with the matrix. Eigenvectors often define the principal components of a system.

Here's a simplified formula for calculating eigenvectors:

For a square matrix A and an eigenvalue λ, you can find the eigenvector v by solving the equation: (A - λI) * v = 0, where I is the identity matrix.

Applied Example: Let's consider a practical example in data science - Principal Component Analysis (PCA). PCA is a technique used for dimensionality reduction and feature selection. It relies on eigenvalues and eigenvectors to find the principal components of a dataset.

Suppose we have a dataset with multiple features, and we want to reduce it to its most informative components. We can represent the data as a covariance matrix and then calculate its eigenvalues and eigenvectors. The eigenvalues will tell us how much variance each principal component explains, and the eigenvectors will represent the directions of these components.

By selecting the top eigenvalues and their corresponding eigenvectors, we can reduce the dataset while preserving most of the important information, simplifying the analysis and visualization of the data.

In summary, eigenvalues and eigenvectors provide valuable insights into how matrices affect vectors and have practical applications in fields such as data science, engineering, and physics. Understanding these concepts through the simplified formula approach is crucial for tackling real-world problems effectively.

#By Sir NolieBoy Rama Bantanos

(The Circle 11.11 Series)

#nolieism

#By Sir NolieBoy Rama Bantanos

The Author

Eigenvalues and eigenvectors are essential concepts in linear algebra that have a wide range of applications in various fields. To simplify their understanding, let's break them down into an effective formula approach.

Eigenvalues: Eigenvalues are scalar values associated with a square matrix that represent the scaling factor by which the eigenvectors are stretched or compressed when the matrix is applied to them. In essence, eigenvalues reveal how a matrix affects the direction and magnitude of vectors.

The formula for eigenvalues can be summarized as follows:

For a square matrix A and an eigenvector v, the eigenvalue λ satisfies the equation: A * v = λ * v.

Eigenvectors: Eigenvectors are the non-zero vectors that remain in the same direction after applying a square matrix. They represent the fundamental directions of transformation associated with the matrix. Eigenvectors often define the principal components of a system.

Here's a simplified formula for calculating eigenvectors:

For a square matrix A and an eigenvalue λ, you can find the eigenvector v by solving the equation: (A - λI) * v = 0, where I is the identity matrix.

Applied Example: Let's consider a practical example in data science - Principal Component Analysis (PCA). PCA is a technique used for dimensionality reduction and feature selection. It relies on eigenvalues and eigenvectors to find the principal components of a dataset.

Suppose we have a dataset with multiple features, and we want to reduce it to its most informative components. We can represent the data as a covariance matrix and then calculate its eigenvalues and eigenvectors. The eigenvalues will tell us how much variance each principal component explains, and the eigenvectors will represent the directions of these components.

By selecting the top eigenvalues and their corresponding eigenvectors, we can reduce the dataset while preserving most of the important information, simplifying the analysis and visualization of the data.

In summary, eigenvalues and eigenvectors provide valuable insights into how matrices affect vectors and have practical applications in fields such as data science, engineering, and physics. Understanding these concepts through the simplified formula approach is crucial for tackling real-world problems effectively.

#By Sir NolieBoy Rama Bantanos

(The Circle 11.11 Series)

#nolieism

0:01:31

0:01:31

0:17:16

0:17:16

0:04:19

0:04:19

0:00:30

0:00:30

0:08:15

0:08:15

0:09:24

0:09:24

0:10:34

0:10:34

0:21:04

0:21:04

0:04:16

0:04:16

0:52:57

0:52:57

0:02:47

0:02:47

0:10:16

0:10:16

0:10:41

0:10:41

0:40:38

0:40:38

0:14:52

0:14:52

0:59:11

0:59:11

0:21:18

0:21:18

0:10:23

0:10:23

0:06:13

0:06:13

0:20:29

0:20:29

0:12:24

0:12:24

0:03:19

0:03:19

0:11:22

0:11:22

0:10:58

0:10:58