filmov

tv

Lecture 19: Generative Models I

Показать описание

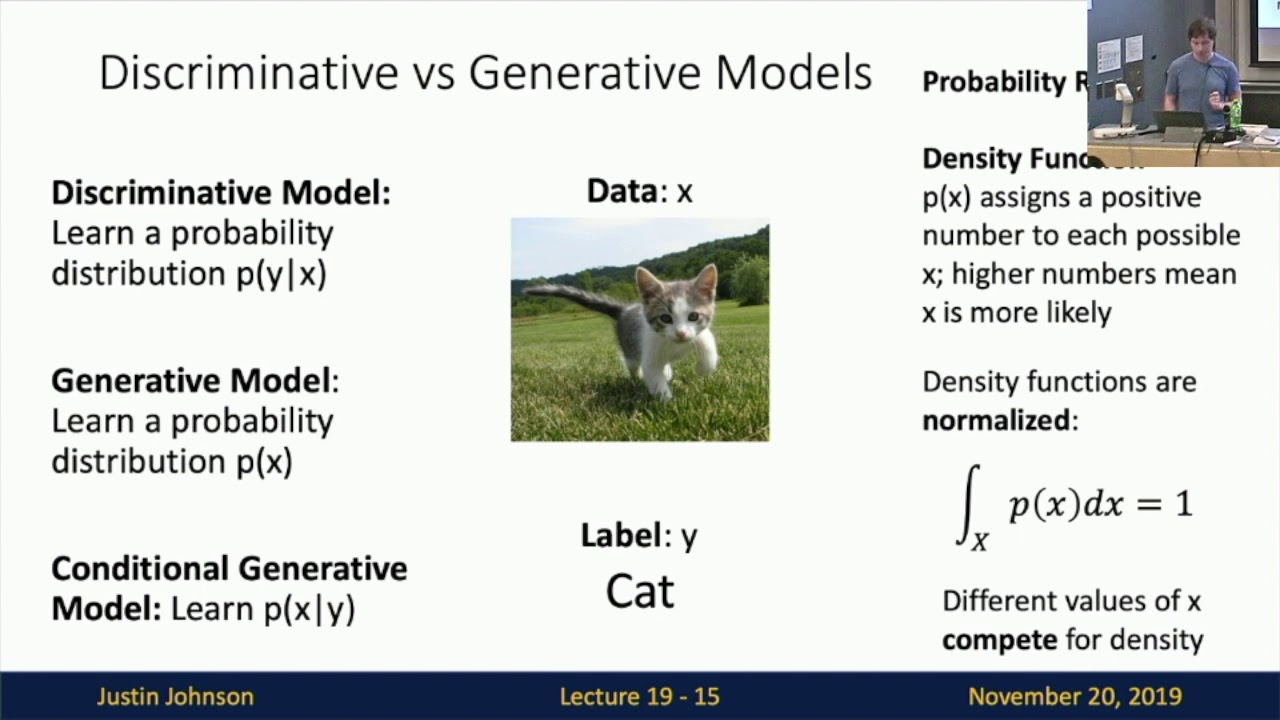

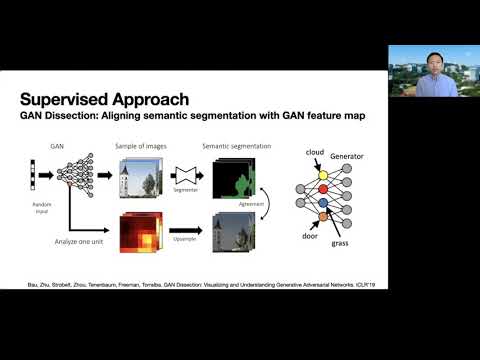

Lecture 19 is the first of two lectures about generative models. We compare supervised and unsupervised learning, and also compare discriminative vs generative models. We discuss autoregressive generative models that explicitly model densities, including PixelRNN and PixelCNN. We discuss autoencoders as a method for unsupervised feature learning, and generalize them to variational autoencoders which are a type of generative model that use variational inference to maximize a lower-bound on the data likelihood.

_________________________________________________________________________________________________

Computer Vision has become ubiquitous in our society, with applications in search, image understanding, apps, mapping, medicine, drones, and self-driving cars. Core to many of these applications are visual recognition tasks such as image classification and object detection. Recent developments in neural network approaches have greatly advanced the performance of these state-of-the-art visual recognition systems. This course is a deep dive into details of neural-network based deep learning methods for computer vision. During this course, students will learn to implement, train and debug their own neural networks and gain a detailed understanding of cutting-edge research in computer vision. We will cover learning algorithms, neural network architectures, and practical engineering tricks for training and fine-tuning networks for visual recognition tasks.

_________________________________________________________________________________________________

Computer Vision has become ubiquitous in our society, with applications in search, image understanding, apps, mapping, medicine, drones, and self-driving cars. Core to many of these applications are visual recognition tasks such as image classification and object detection. Recent developments in neural network approaches have greatly advanced the performance of these state-of-the-art visual recognition systems. This course is a deep dive into details of neural-network based deep learning methods for computer vision. During this course, students will learn to implement, train and debug their own neural networks and gain a detailed understanding of cutting-edge research in computer vision. We will cover learning algorithms, neural network architectures, and practical engineering tricks for training and fine-tuning networks for visual recognition tasks.

Комментарии

1:11:13

1:11:13

1:18:47

1:18:47

0:25:54

0:25:54

1:37:47

1:37:47

1:37:41

1:37:41

1:14:41

1:14:41

0:54:58

0:54:58

1:19:37

1:19:37

0:56:19

0:56:19

0:50:23

0:50:23

1:09:36

1:09:36

0:22:46

0:22:46

0:57:28

0:57:28

1:17:38

1:17:38

![[Lecture 19] 11785](https://i.ytimg.com/vi/CoSzKWkGmVA/hqdefault.jpg) 1:21:00

1:21:00

0:12:58

0:12:58

0:44:26

0:44:26

1:02:54

1:02:54

0:43:44

0:43:44

0:15:05

0:15:05

1:07:26

1:07:26

1:17:30

1:17:30

1:31:08

1:31:08

2:37:19

2:37:19