filmov

tv

How a Transformer works at inference vs training time

Показать описание

I made this video to illustrate the difference between how a Transformer is used at inference time (i.e. when generating text) vs. how a Transformer is trained.

The video goes in detail explaining the difference between input_ids, decoder_input_ids and labels:

- the input_ids are the inputs to the encoder

- the decoder_input_ids are the inputs to the decoder

- the labels are the targets for the decoder.

Resources:

The video goes in detail explaining the difference between input_ids, decoder_input_ids and labels:

- the input_ids are the inputs to the encoder

- the decoder_input_ids are the inputs to the decoder

- the labels are the targets for the decoder.

Resources:

How does a Transformer work ?

How does a Transformer work - Working Principle electrical engineering

How a Transformer Works 3D

How a Transformer Works

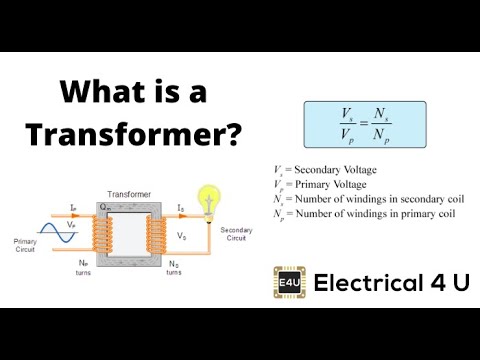

How a Transformer Works ⚡ What is a Transformer

What are Transformers (Machine Learning Model)?

How Does a Transformer Works? - Electrical Transformer explained

Is it easy to create your own Transformer? Everything you need to know about Transformers! || EB#42

What is Resistor || How resistor work || Understanding Resistors in Electronics

02 - What is a Transformer & How Does it Work? (Step-Up & Step-Down Transformer Circuits)

What's inside a Transformer?

How does a Transformer Work ANIMATION

What is a Transformer And How Do They Work? | Transformer Working Principle | Electrical4U

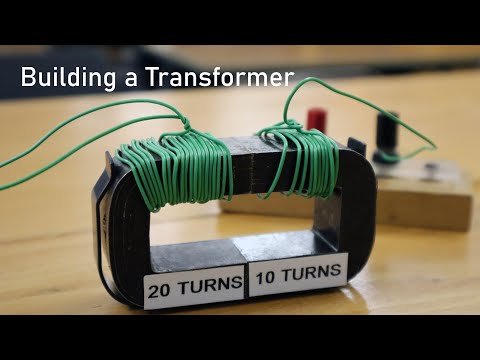

Building a Transformer - Physics Experiment

How a Transformer works at inference vs training time

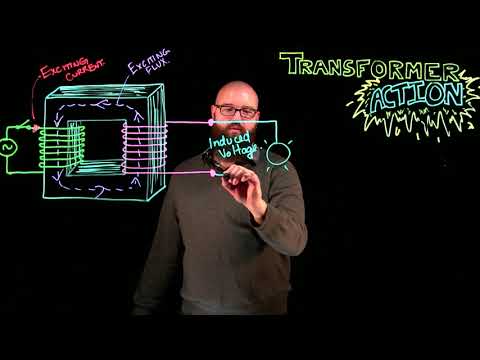

Transformer Action

Transformers explained

Transformer Design and Construction: How it's made? #vigyanrecharge #transformers

How does a Transformer work ? | working principle of transformer | Transformer working animation HD

How Electrical Power Transformer are made in Factory Amazing Process 😲☝

How a Toroidal Transformer Works ⚡ What is a Toroidal Transformer

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Transformer Parts and Functions

How does a Transformer Work?

Комментарии

0:05:48

0:05:48

0:06:30

0:06:30

0:04:47

0:04:47

0:03:36

0:03:36

0:11:45

0:11:45

0:05:50

0:05:50

0:11:50

0:11:50

0:11:12

0:11:12

0:00:47

0:00:47

0:33:49

0:33:49

0:06:44

0:06:44

0:10:26

0:10:26

0:08:49

0:08:49

0:03:14

0:03:14

0:49:53

0:49:53

0:06:38

0:06:38

0:05:41

0:05:41

0:16:28

0:16:28

0:08:37

0:08:37

0:12:59

0:12:59

0:08:50

0:08:50

0:58:04

0:58:04

0:06:59

0:06:59

0:10:19

0:10:19