filmov

tv

Add One Smoothing

Показать описание

What do we do with words that are in our vocabulary (they are not unknown words)

but appear in a test set in an unseen context (for example they appear after a word

they never appeared after in training)? To keep a language model from assigning

zero probability to these unseen events, we’ll have to shave off a bit of probability

mass from some more frequent events and give it to the events we’ve never seen.

This modification is called smoothing or discounting. In this section and the following ones we’ll introduce a variety of ways to do smoothing: Laplace (add-one)

smoothing, add-k smoothing, stupid backoff, and Kneser-Ney smoothing.

but appear in a test set in an unseen context (for example they appear after a word

they never appeared after in training)? To keep a language model from assigning

zero probability to these unseen events, we’ll have to shave off a bit of probability

mass from some more frequent events and give it to the events we’ve never seen.

This modification is called smoothing or discounting. In this section and the following ones we’ll introduce a variety of ways to do smoothing: Laplace (add-one)

smoothing, add-k smoothing, stupid backoff, and Kneser-Ney smoothing.

Add One Smoothing

N Grams Models Laplace Smoothing

Laplace smoothing | Laplace Correction | Zero Probability in Naive Bayes Classifier by Mahesh Huddar

N-Gram Smoothing Techniques

Data Mining and Machine Learning Laplace Smoothing Add One Smoothing

N-gram, Language Model, Laplace smoothing, Zero probability, Perplexity, Bigram, Trigram, Fourgram

CORELDRAW TRW TRAINING W IRIS

Smoothing Method | Language Model Log Add, Laplace Smoothing

Lecture - 22 - Language Modeling Smoothing: Add-one(Laplace) Smoothing

Additive Smoothing

Laplace Smoothing in Naive Bayes || Lesson 50 || Machine Learning || Learning Monkey ||

n gram Numerical | Predict the probability of the sentence | natural Language Processing

Exponential Smoothing in Excel (Find α) | Use Solver to find smoothing constant alpha

INFINITIPRO BY CONAIR The Knot Dr. All-in-One Smoothing Dryer Brush, Hair Dryer & Hot Air Brush

Skin Softening with Beautiful Texture | 1-Minute Photoshop (Ep. 4)

[Naïve Bayes Classification] add-one Smoothing, BernoulliNB, MultinomialNB, GaussianNB

Lecture - 23 - Smoothing Techniques : (1) Add-one (Laplace’s law) (2) Add-delta ((Lidstone’s law)...

Exponential Smoothing Forecasting Using Microsoft Excel

3 tips for smoothing frizzy hair #shorts #amyshairtips

Hair Hack For Smoothing Flyaways 💫 #ootd #styling #hairtutorial #hair #hairstyle #haircut #shorts

Does the Dyson Airwrap Coanda Smoothing Dryer really work?

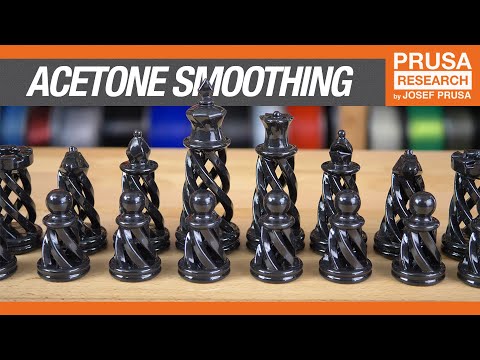

Improve your prints with acetone smoothing

Additive smoothing in language models (NLP817 3.7)

Laplace smoothing

Комментарии

0:17:10

0:17:10

0:05:53

0:05:53

0:08:02

0:08:02

0:14:19

0:14:19

0:07:00

0:07:00

0:11:49

0:11:49

1:13:43

1:13:43

0:08:36

0:08:36

0:30:01

0:30:01

0:09:47

0:09:47

0:08:15

0:08:15

0:06:03

0:06:03

0:04:49

0:04:49

0:00:16

0:00:16

0:01:01

0:01:01

![[Naïve Bayes Classification]](https://i.ytimg.com/vi/ptoiJCeofhw/hqdefault.jpg) 0:21:01

0:21:01

0:37:16

0:37:16

0:02:39

0:02:39

0:00:22

0:00:22

0:00:14

0:00:14

0:00:40

0:00:40

0:04:31

0:04:31

0:10:26

0:10:26

0:08:04

0:08:04